Icon generation model based on GANs

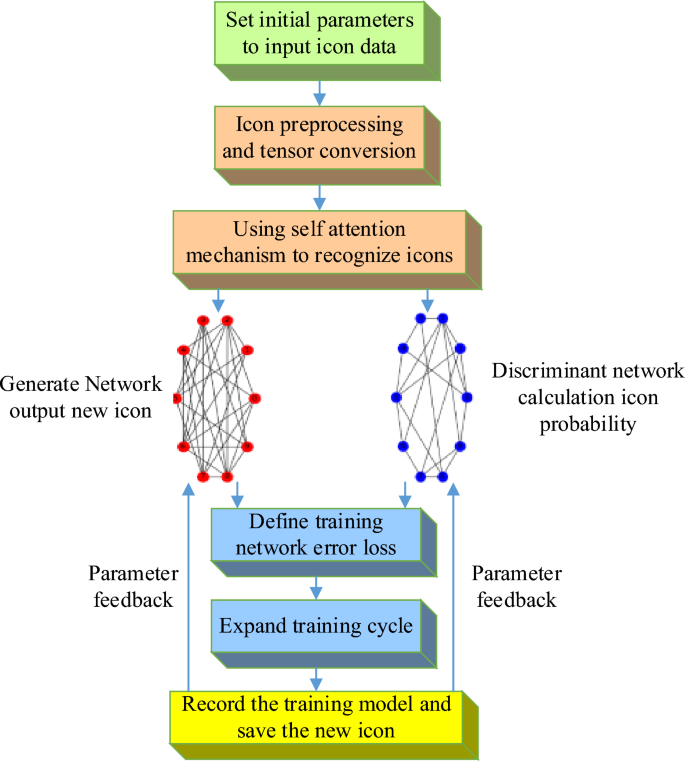

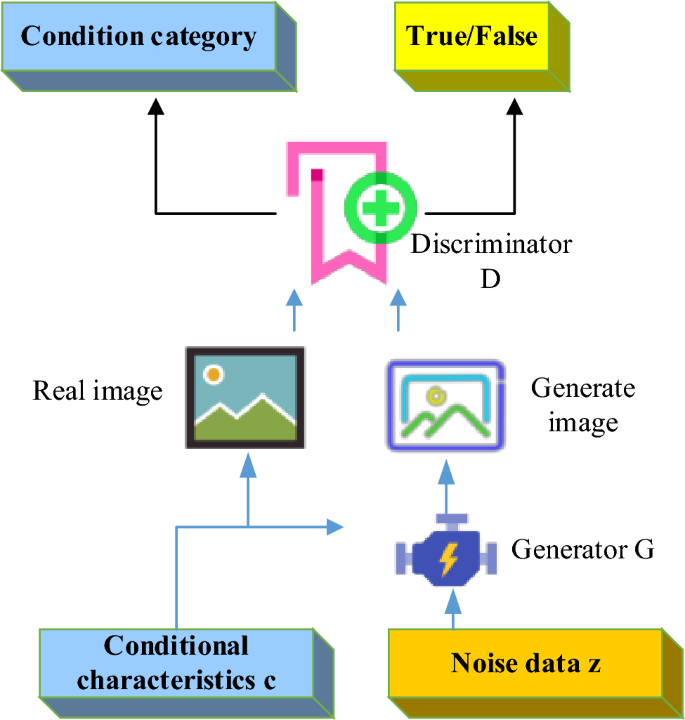

The GAN is a network structure composed of generators and discriminators. The generator aims to generate samples similar to real data, while the discriminator aims to distinguish between the generated samples and real data. Through continuous adversarial learning, the generator gradually improves the fidelity of the generated samples, enabling them to be confused with the real. The core idea of GAN is to train the generated model through adversarial training of generators and discriminators18,19,20. The structural diagram of GAN is shown in Fig. 1. The left side of Fig. 1 shows the generated network G, with an input of a set of random noise Z and an output of new data G(z), aiming to make its distribution as close as possible to the real data distribution. The architecture of the generated network can be seen as the reverse structure of discriminant networks, creating new data with or without targeting through multi-layer deconvolution calculations. The successful quality of generating a network depends on the way it optimizes its structure and parameters. Training and sampling data are conducted in a generative network to measure the skill level of researchers in analyzing and calculating high-dimensional probability distributions.

Structural schematic diagram of GAN.

The generator is a key component of icon GAN (IconGAN), whose task is to accept a random noise vector (usually represented as Z) and transform it into a new icon as similar as possible to the real icon. The generator adopts a multi-layer deconvolution network structure, gradually increasing the details and features of the icon to achieve icon generation. The optimization goal of the generator is to make the generated icons realistic enough to mislead the discriminator.

The discriminator is another important component of IconGAN, whose task is to evaluate the similarity between the generated icon and the real icon. The input of the discriminator can be a real icon or an icon generated by the generator, with the goal of distinguishing between the two. The discriminator gradually learns how to distinguish between generated icons and real icons by judging between generated icons and real icons21,22. The optimization goal of discriminators is to improve their judgment ability to better distinguish the authenticity of generated icons. The training process of IconGAN is based on adversarial learning between the generator and discriminator. In each training iteration, the generator generates a set of icons, and the discriminator judges between these generated and actual icons. The goal of the generator is to deceive the discriminator by generating icons, making it difficult to distinguish between generated and real icons. The discriminator aims to accurately determine which icons are generated and which are real. Through continuous adversarial learning, the generator gradually improves the quality of generated icons, making them more realistic. The basic framework of IconGAN enables its widespread application in icon design23,24,25. IconGAN can generate high-quality icons similar to real icons by optimizing the structure and parameters of the generator and discriminator. The generated icons can be customized according to specific design requirements to meet the needs of different application scenarios, such as mobile applications, website design, and brand identification. Figure 2 shows the basic framework of icon GANs.

Basic framework for icon GANs.

Identification module incorporating multiple features

In traditional GAN, discriminators usually only rely on global features to determine the authenticity of the generated samples. This can easily lead to the generated samples that are not realistic enough in some details, and unstable phenomena, such as pattern collapse and pattern collapse, can also occur during the training process26,27. Multi-scale discriminator is an improved approach that can capture image information more comprehensively by introducing discriminators of different scales. In the image generation task, discriminators can be trained on the original image and the image with reduced size simultaneously28,29,30. This multi-scale discriminator can capture features at different levels from details to the global, improving the authenticity and diversity of generated samples.

The multi-level feature discriminator proposed here is a structure that designs the discriminator as multiple sub-discriminators. Each sub-discriminator is responsible for different levels of features, such as low-level textures and high-level semantics. This design enables the discriminator to analyze images at multiple levels of abstraction, thereby improving the judgment ability and diversity of generated samples. A multimodal discriminator is a method that integrates multiple features and considers different aspects of an image, such as appearance and semantic information. A multimodal discriminator is a model component designed to handle multiple data modalities or features. In DL, this may involve multiple neural network branches, each specialized in processing a specific data type (such as images, text, and sound). The outputs of these branches may be integrated into a common representation or used for different tasks, such as classification and generation. The multimodal discriminator helps the model understand and utilize the relationships among different data types, thereby improving the model’s performance. The model can simultaneously focus on multiple aspects of the image by introducing a multimodal discriminator, thereby more accurately evaluating the authenticity of the generated samples. This helps generate more creative and diverse samples.

The improved Auxiliary Classifier GAN (ACGAN) has significant differences from the auxiliary GAN. In ACGAN, the generator still receives conditional features, but the design of the discriminator differs from traditional methods. In the discriminator, conditional features are no longer directly input but are added to the output through the conditional feature recognition module. This makes the task of the discriminator clearer, with the goal of checking whether the input image meets the conditional features expected by the generator. Therefore, in ACGAN, the generator is responsible for generating images that meet the conditional features, while the discriminator is responsible for verifying whether the images meet the conditions. The model structure is given in Fig. 3. This method more effectively captures important conditional features of the image, such as color and shape, making the generated image closer to reality.

Icon generation model based on ACGAN.

With the Wasserstein GAN (WGAN) model, the stability and generation efficiency of ACGAN can be further improved31,32. The distance among distributions is measured using Wasserstein distance. It introduces color condition features and the shape condition feature s. In this way, the Auxiliary Classifier Wasserstein GAN (ACWGAN) model is formed, and the loss functions of the generator and discriminator are:

$$L_{D} = E_{{z\sim p_{z} \left( z \right)}} \left[ {f\left( {G\left| {z\left( {c,s} \right)\left| {c,s} \right.} \right.} \right) – E_{{x\sim p_{{r\left| {c,s} \right.}} \left( {x\left| {c,s} \right.} \right)}} f\left( {x\left| {c,s} \right.} \right)} \right]$$

(1)

$$L_{G} = – E_{{z\sim p_{z} \left( z \right)}} \left[ {f\left( {G\left| {z\left( {c,s} \right)\left| {c,s} \right.} \right.} \right)} \right]$$

(2)

The function \(f\) follows the constraint of Lipschitz constant, and the parameters of this function are obtained through neural network training. To meet the limit of Lipschitz constant, the parameter \(\omega\) is constrained to a pre-set cutoff parameter \(h\) during each round of parameter updates. In addition, the conditional features of the model are composed of color condition feature \(c\) and shape condition feature \(s\), which can collectively affect the generation of icons. If necessary, further features can be added to make the generated icons more targeted.

After selecting and constructing the icon generation model, it is necessary to clarify the training method and evaluation strategy of the model. Here, the initial ACGAN is improved, and a multi-class GAN model is proposed. The algorithm process is as follows. Batch training is adopted for iterative training, which involves extracting data from the dataset to form batches for training. The specific training process is shown in Table 1.

Construction of an icon generation network for conditionally assisted improvement

Conditional inputs, usually specified features or parameters, are introduced into the icon generation network to customize the generated icons. These conditions can be color, shape, size, and style. Conditional input guides the generator to generate icons with specific styles or features, achieving customized generation. The appropriate generator and discriminator network architecture is selected to adapt to conditional inputs. The generator needs to combine conditional input with random noise to generate icons that match the conditions. The discriminator needs to determine whether the generated icon meets the conditions33,34.

In the generation network, the previously obtained label needs to be converted into a label tensor label (label_tensor) suitable for matrix calculation, which is simultaneously passed in a flat layer and an embedding layer to convert the two-dimensional output matrix into a one-dimensional vector. Subsequently, the number of labels (num_classes) is combined. Then, it is combined with noise through the superposition function to generate input. Finally, the input is merged with the label tensor (label_tensor) to form a new input for the generated network. Therefore, the conditional variable C is regarded as the limiting design concept in icon design, which brings constraints to the design direction and output results of the training network.

Relatively speaking, the processing of discriminative networks is simpler. When processing inputs and conditions, operations are carried out through a fully connected layer to ensure that the output dimension is consistent with the number of labels. Next, after a single-layer normalization operation, the results are transmitted to the training process. In the formal training phase, the labels are first randomly sampled to obtain the sampled labels (sampled_labels) and generate new icons (gen_imgs). d_loss_real calculates the loss between the input imgs and the original label (img_labels), using the valid values (valids) as the input. d_loss_new evaluates the loss between the newly generated icon (gen_imgs) and the sampled label (sampled_labels), and the input is a false value (fake).

Wanda Parisien is a computing expert who navigates the vast landscape of hardware and software. With a focus on computer technology, software development, and industry trends, Wanda delivers informative content, tutorials, and analyses to keep readers updated on the latest in the world of computing.