Simulated annealing algorithm (SA)

The Simulated Annealing Algorithm (SA) serves as a global optimization methodology, drawing inspiration from the annealing process observed in metallurgy. As a metaphorical mimicry of cooling material to attain a state of minimum energy, this algorithm endeavors to secure the best possible solution to a given problem through a progressive refinement of a candidate solution from a pool of potential solutions. Figure 2 illustrates the flow chart of the SA algorithm.

The underlying principle of this algorithm lies in its iterative nature, where it initiates from an initial solution and progressively improves it by generating a sequence of neighboring solutions. During each iteration, the algorithm judiciously assesses the objective function of both the current solution and its neighboring counterparts. Subsequently, the algorithm deliberates on accepting or rejecting these neighboring solutions by a probability distribution, influenced by the prevailing temperature. Over the course of its execution, the temperature gradually diminishes, affording the algorithm the capacity to overcome local minima and efficiently explore the expansive search space.

Consonant with the principle of simulated annealing, wherein cooling facilitates minimizing material energy, the objective function in the optimization context equates to the role of energy. By repeatedly traversing through a sequence of candidate solutions, the algorithm endeavors to discern the state of minimum energy, effectively resolving the optimization problem at hand.

The algorithm’s calculation process unfolds as follows:

Step 1—Initialization: Commence by selecting an initial solution, alongside setting parameters, including the initial temperature T, cooling rate α, and a specified stopping criterion. The initial solution may be derived through random generation or heuristic methods. Temperature T and cooling rate α serve as pivotal controls governing the extent of search space exploration.

Step 2—Iteration: Embark on the iterative process, encompassing the following steps (a-e), until the stipulated stopping criterion is satisfied:

-

a.

Employ the perturbation mechanism to generate a neighboring solution. These neighboring solutions emerge by minutely altering the current solution, with the perturbation mechanism tailored to the specifics of the problem at hand.

-

b.

Calculate the variation in the objective function’s value, denoted as ΔE, representing the disparity between the objective function value of the new solution and that of the current solution.

-

c.

If ΔE is negative (i.e., the new solution exhibits improvement), unequivocally accept the new solution as the incumbent one for the subsequent iteration.

-

d.

Conversely, if ΔE is positive (i.e., the new solution proves to be suboptimal), acceptance of the new solution transpires probabilistically based on the function P(ΔE, T) = exp(-ΔE/T). This implies that, contingent upon the existing temperature and the degree of change in the objective function’s value, a new solution may be embraced, even if it falls short of the current solution.

-

e.

Progressively update the temperature through the cooling schedule: T = α * T. This serves to lower the temperature, facilitating the forthcoming iteration while orchestrating the exploration of the search space.

Step 3—Stopping Criterion: The algorithm terminates upon meeting the designated stopping criterion, which may be contingent upon factors such as the number of iterations, the temperature reaching a predefined threshold, or other specified criteria.

The simulated annealing algorithm demonstrates prowess in resolving intricate combinatorial optimization problems, distinguished by their vast search space and the existence of numerous local minima. The algorithm’s ability to transcend local minima by entertaining suboptimal solutions, as governed by the current temperature, combined with the gradual cooling of temperature, fosters a more efficient and expansive exploration of the search space.

Genetic algorithm (GA)

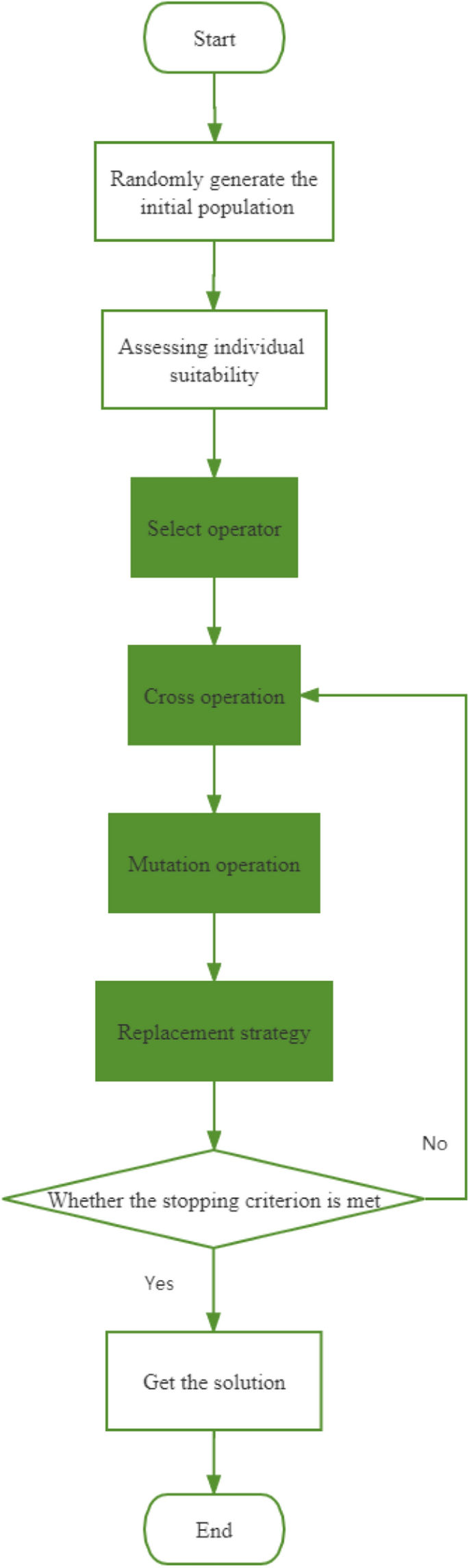

The Genetic Algorithm (GA) belongs to the class of optimization algorithms, drawing inspiration from natural selection and genetic processes. The algorithm operates upon a population of candidate solutions, symbolized as chromosomes, and progressively evolves by employing selection, crossover, and mutation operators. The flowchart illustrating the GA algorithm is presented in Fig. 3.

Step 1: At the outset, the GA algorithm generates an initial population of candidate solutions, which may be derived either randomly or through heuristic techniques. Each candidate solution finds representation as a chromosome, typically manifesting as a string of binary numbers. The population size is purposefully set to be sufficiently ample, affording ample diversity within the search space.

Step 2: The suitableness of each chromosome within the population is assessed by computing the value of the objective function for the corresponding solution. The fitness function, inherently contingent upon the specific problem, serves to gauge how effectively the solution addresses the optimization challenge.

Step 3: The selection operator comes into play, acting to elect parent chromosomes for reproduction, predicated on their respective fitness levels. Common selection methods encompass roulette selection and tournament selection. In roulette selection, each chromosome is assigned a probability of selection proportionate to its fitness. Alternatively, in tournament selection, a subset of chromosomes is randomly selected from the population, and the most suitable chromosome emerges as the designated parent.

Step 4: Crossover, signifying the process of amalgamating genetic material from two parental chromosomes to engender offspring, is executed. The crossover operation entails selecting a junction point on a chromosome and orchestrating the exchange of genetic material between the two parents. As a result, two new offspring chromosomes materialize, inheriting genetic information from both parents.

Step 5: The mutation operator is introduced to introduce random changes to the offspring’s chromosomes. To prevent excessive perturbation to the genetic material, mutations are typically applied with low probability. Mutation involves random alterations to a few genes within a chromosome.

Step 6: The replacement operator takes charge of substituting the current population with the newly generated offspring. The replacement strategy may adopt a generational approach, wherein the entire population is supplanted by offspring, or a steady-state approach, wherein only a segment of the population undergoes replacement.

The algorithm perpetuates its iterative cycle, repeatedly traversing through steps 2 to 6, until a stipulated stopping criterion is met, such as reaching the maximum number of generations or attaining the targeted fitness level. The algorithm continues its iterative cycle, cycling through steps 2 to 6, until it meets a predetermined stopping criterion, such as reaching the maximum number of generations or achieving the desired fitness level. Ultimately, the final solution materializes as the chromosome manifests the highest fitness within the conclusive population.

GA algorithms effectively operate on a population of candidate solutions and leverage the selection, crossover, and mutation operators to engender progressively enhanced solutions. Through the iterative process encompassing population generation, fitness evaluation, parent selection, offspring generation, random alterations, and population replacement, the algorithm seeks to identify the optimal solution until the specified stopping criterion is fulfilled.

Hybrid simulated annealing and genetic algorithm (SA–GA)

The Hybrid Simulated Annealing and Genetic Algorithm (SA–GA) entails the strategic amalgamation of the Genetic Algorithm (GA) and the Simulated Annealing Algorithm (SA). Within this innovative framework, GA is harnessed to optimize the SA, effectively capitalizing on the strengths of each method. The GA serves as a global exploration tool, adept at navigating the search space on a broader scale, while SA assumes the role of a local search method, skillfully annealing the offspring generated by GA. In essence, GA is entrusted with the task of generating offspring through crossover and mutation, while SA takes the reins in further optimizing these offspring before they are considered for selection and eventual inclusion in the succeeding generation.

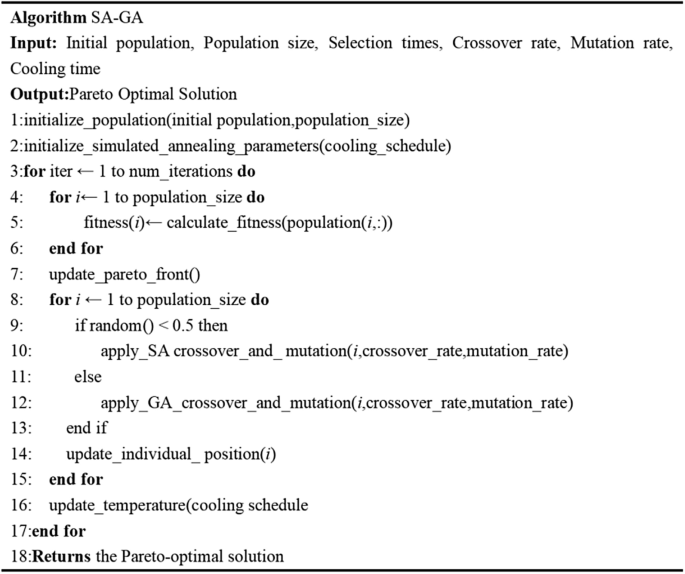

The overarching objective of SA–GA centers on elevating the algorithm’s overall performance through the fusion of both methodologies. GA adroitly embarks on exploring the expansive search space, upholding a diverse cohort of candidate solutions, whereas SA excels in fine-tuning solutions and adroitly circumventing local optima. By harmonizing these two approaches, SA–GA endeavors to converge more effectively toward the true Pareto-optimal front, simultaneously preserving a diverse ensemble of solutions. The pseudocode illustrating the SA–GA methodology is aptly depicted in Fig. 4, and the specific steps of the algorithm are elucidated as follows:

SA–GA algorithm pseudo code.

Step 1: Initialization.

Generate an initial population of candidate solutions using either random or heuristic methods. Each solution comprises a combination of decision variables, encompassing the replenishment quantity from the supplier to the PI-hub (x_ij), the replenishment quantity from the PI-hub to the retailer (y_jk), and the source selection decisions for replenishment (z_i and w_jk).

Set the initial temperature and cooling schedule parameters, critical components within the Simulated Annealing (SA) phase.

Define the Genetic Algorithm (GA) parameters, including the population size, crossover and mutation rates, and termination criteria.

Step 2: Simulated Annealing (SA) Phase.

Commence with an initial solution for the Virtual Workbench (VW).

Proceed with a predefined number of SA iterations.

Execute a local search within the vicinity of the current solution by perturbing the decision variables. This entails adjustments to the replenishment quantities from the supplier to the PI-center (x_ij) or from the PI-center to the retailer (y_jk), and/or modifications to the source selection decisions (z_i and w_jk).

Evaluate the objective function for the new solution, incorporating the total cost to be minimized. The objective function accounts for inventory holding cost, ordering cost, transportation cost, and penalty cost, and is formulated as follows:

$$\begin{aligned} & {\text{Minimize }}\Sigma \left( {\text{i},\text{j}} \right) \, [\text{C}\_{\text{x}} \, *{\text{ distance }}* \, \text{x}\_{\text{ij}}\, + \,\text{C}\_{\text{o}} \, * \, \text{z}\_{\text{i}} \\ & \quad + \text{C}\_{\text{t}} \, * \, \left( \text{x}\_{\text{ij}}\, + \,\Sigma \left( \text{k} \right) \, \text{y}\_{\text{jk}} \right)\, + \,\text{C}\_{\text{s}} \, * \, \left( \text{w}\_{\text{jk}} \, *{\text{ Demand}}\_{\text{k}} \right)]. \\ \end{aligned}$$

In this context, C_x represents the unit transportation cost from the supplier to the PI-hub or between PI-hubs, distance denotes the distance between each node in the PI network, C_o signifies the ordering cost for replenishment from the supplier or PI-hub, C_t reflects the transportation cost per unit distance, C_s denotes the unit inventory holding cost of the retailer, and Demand_k signifies the demand of retailer k.

Accept or reject new solutions based on a probability function contingent upon the target value and the current temperature. The probability of acceptance is governed by the Metropolis criterion, conventionally employed within the SA algorithm.

Update the temperature by the cooling schedule, progressively diminishing the likelihood of accepting suboptimal solutions.

Reiterate the SA iterations until the temperature reaches the specified stopping criterion.

Step 3: Genetic Algorithm (GA) Phase.

Evaluate the fitness of each solution within the current population by computing the objective function value.

Employ selection operators (e.g., tournament selection or roulette selection) to elect parents for replication. The selection probability of each solution is contingent upon its fitness value.

Conduct crossover and mutation operations to engender offspring solutions. Crossover engenders new solutions by exchanging genetic information between the selected parent solutions, while mutation introduces small random changes to the solutions.

Assess the fitness of the offspring solution utilizing the objective function.

Implement elitism, integrating the best-performing solutions from the current population into the next generation to preserve their excellence.

Replace the current population with the descendant population.

Continue the genetic algorithm operation until a termination condition is met, such as reaching the maximum number of generations or achieving convergence.

Step 4: Termination.

Examine the termination conditions of the SA–GA algorithm, considering factors such as attaining the maximum number of iterations or achieving convergence of the solution. This determination can be predicated on the rate of improvement or stability of the objective function value.

If the termination condition is met, proceed to the next step. Otherwise, return to Step 2.

Step 5: Solution Extraction.

Extract the best solution from the final population, epitomizing the optimization of the replenishment process within a PI environment, to minimize the total cost while satisfying the retailer’s inventory requisites.

Throughout the SA–GA algorithm, the decision variables x_ij, y_jk, z_i, and w_jk undergo manipulation and updating at each iteration to navigate the solution space. The objective function is rigorously evaluated to direct the search for an enhanced solution, pursuing the minimization of the total cost while simultaneously fulfilling the retailer’s inventory requirements.

Tyler Fields is your internet guru, delving into the latest trends, developments, and issues shaping the online world. With a focus on internet culture, cybersecurity, and emerging technologies, Tyler keeps readers informed about the dynamic landscape of the internet and its impact on our digital lives.