Design philosophy

Whether leveraged as small stand-alone applications or employed as edge nodes to build larger systems, edge devices call for edge hardware with unprecedented low latency and low power. In theory, neuromorphic intelligence is well suited for edge computing scenarios as it can perceive and process information sparsely. In this work, we propose a neuromorphic system to mine and cash in these unique gifts of neuromorphic intelligence through top-level co-design of hardware, algorithms, software, and applications. Our top-level design derives from two well-known common principles in computational neuroscience. First, the human brain integrates visual information from the eyes, which perceive scenes sparsely. At the same time, only a fraction of neurons in the brain respond to visual stimuli. Secondly, even if the eye perceives lots of information, some global advanced information processing mechanisms in the human brain, such as attention, will allow the brain to ignore some information to alleviate the processing burden directly.

Eye-brain integrated hardware design

Speck is a sensing-computing neuromorphic SoC with the spike-based sparse computing paradigm. DVS simulates biological visual pathways, asynchronously and sparsely generates events (spikes with address information) when scene brightness changes, which can drop redundant data at the source. Neuromorphic chips only activate a portion of spiking neurons to perform computations when an input event occurs (i.e., event-driven). As low-level abstractions of the human eye and brain, the sparse sensing of a single pixel in DVS and the sparse computing of a single spiking neuron in neuromorphic chip are the basic building blocks for the structure and function realization of Speck. After the physical combination of DVS and neuromorphic chip, three key designs inject soul into Speck’s eye-brain integrated processing are: (a) DVS and chip interface system design, the basis of high-speed and efficient data transmission. (b) SNN convolution core design, the basis of low-latency and low-power machine intelligence. (c) Full asynchronous logic design, the basis of Speck’s high-speed processing and low resting power.

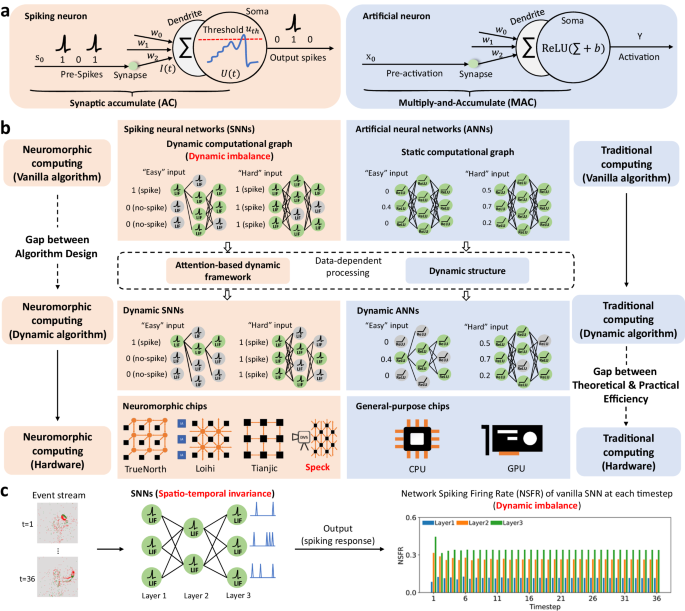

High-level brain mechanism mapping

The dynamic response mechanism is a high-level abstraction of human brain functions, which requires global regulation of the spike firing of neurons in each brain region based on the stimulus. The human brain allocates attention according to the importance of the input. Since sensing and computing in Speck are asynchronously spike-based event-driven, at least one simple dynamic response can be realized: masking the stream of unimportant events for a period of time so that the neuromorphic chip does not perform any computations during this temporal window.

Comprehensively, the low-level abstraction of the human eye and brain is the basis for Speck’s high-speed, low-latency, and low-power computing. The high-level attention abstraction can be downward compatible with the low-level abstraction, improving computational efficiency. This organic integration of multi-level brain mechanisms benefits from our firm grasp of the philosophy of brain-like spike-based sparse sensing and computing in the design of Speck.

Chip design

We define Speck as an efficient medium-scale neuromorphic sensing-computing edge hardware. It has four main components (Fig. 2g): DVS with a spatial resolution of 128 × 128, sensor pre-processing core, 9-SNN cores enabling combined convolution and pooling or fully connected SNN layers, and readout core. The connection of each component mentioned is done through a universal event router. Combining such low latency, high dynamic range, and sparse sensor with an event-driven spiking Convolutional Neural Network (sCNN) processor, that excels in real-time low latency processing on a single SoC is a natural technological step. To complement the architectural advantages of always-on sparse sensing and computation, the SoC is built in a fully asynchronous fashion. The asynchronous data flow architecture provides low latency and high throughput processing when requested by sensory input while inherently shifting to a low power/idle state when the sensory input is absent. Thoroughly investigating and verifying many edge computing vision application scenarios, Speck was designed to comprise 328 K neuron units spread over nine high-speed, low latency, and low-power SNN cores.

Sensing-computing coupling

Current technologies exploit USB connections or other interface technologies to connect to the vision sensor and neuromorphic chips/processors. Moving data over chip boundaries and long distances impacts latency and increases power dissipation for robust signal transmission significantly. While advanced CIS-CMOS processes can couple dedicated high-quality vision sensors (vision optimized fabrication process) and neural network compute chips (logic optimized fabrication process) in a single package35, by combining both sensor and processing on a single die into a smart sensor, we lower unit production costs significantly while saving energy on high-speed and low-latency data communication, as the raw sensory data never has to leave the chip.

The sensor

The sensor of Speck consists of 128 × 128 individually operating event-based vision pixels, also called dynamic vision pixels20. In contrast to the frame-based cameras, these pixels encode the incident light intensity temporally on a logarithmic intensity scale, also known as Temporal Coding (TC) encoding. In other words, the sensor transmits only novel information in the field of view, sparsifying the data stream significantly and seizing transmission on no visual changes. Each pixel is attached to a single handshake buffer to enable the pixel to work fully independently from the arbitration readout system. The arbitration is built out of one arbiter tree for column arbitration and one for rows36,37. The event address is encoded from the acknowledge signals of the arbitration trees and handed off as an Address Event Representation (AER) word to the event pre-processing block. The events are encoded as 1-bit polarity (ON-increasing illumination / OFF-decreasing illumination), 7-bit x-address, and 7-bit y-address (total of 15-bit data per pixel event). A complete arbitration process with ID encoding takes approximately 2.5 − 7.5 ns for a single readout. The arbitration endpoint with buffer in the pixel itself is optimized to limit the transistor count, resulting in a fill factor of 45% front illumination for each pixel. The refractory of each pixel is around 500 μs. Under the worst-case condition where the activity rate of the image is around 100%, the data needs to be transmitted during refractory is around 250 Kb (128 × 128 × 15 bit), i.e. 500 Mb/s data rate. For a typical condition, 10% to 20% of the pixel area is estimated to be active, which causes a 50 to 100 Mb/s data rate. Opposed to power-hungry high-speed low latency off-chip communication, transmitting this sparse data stream on-chip yields a significant reduction in power consumption, proportional to the data rate.

DVS pre-processing core

To conform the raw AER event stream from the sensor to the requirements of the sCNN, a pre-processing stage is required. The image may be flipped, rotated or cropped if only a Region of Interest (ROI) of the image is required. A lower image resolution might be required, or the polarity can be ignored. To accomplish this, the sensor event pre-processing pipeline consists of multiple stages enabling the following adjustments in the sensor event stream: polarity selection as ON only, OFF only, or both, region of interest adjustment, image mirroring in x, y or both axes, pooling in x or y coordinates separately or together, shifting the origin of the image to another location by adding an offset, etc. In addition, there is also an option to filter out the noisy events coming out from the DVS by using full-digital low-pass or high-pass filters. The output of the pre-processing core has a maximum of two channels, with each channel indicating whether the event belongs to the ON or OFF category. After the pre-processing of the sensory events, the data output is transmitted to the Network on Chip (NoC).

Network on chip

The NoC router follows a star topology. The routing system operates non-blocking for any feed-forward network model and routes events via AER connections. The mapping system allows data to be sent from one convolution core to up to 2 other cores and for one core to receive events from multiple sources without addressing superposition with up to 1024 incoming feature channels. On every incoming channel, the routing header of every AER packet is read, and the payload is directed to the destination. This is done by establishing parallel physical routing channels that do not intersect for any network topology that does not contain recurrence. This prevents skew due to other connections and deadlocks by loops inside the pipeline structure. The routing header information is stripped from the word during transport, and the payload is delivered to its intended destination.

SNN core

In conventional CNN, a frame-based convolution is done. Thus, the camera’s full frame must be available before starting the convolution operation. In contrast to CNN, event-driven sCNN does not operate on a full frame basis: for every arriving pixel event, the convolution is computed for only that pixel position. For a given input pixel, all output neurons that are associated with its convolution are traversed, as opposed to a kernel that is swept pixel-by-pixel over a complete image. An incoming event includes the x and y coordinates of the active pixel as well as the input channel c it belongs to, as depicted in Fig. 2i and Fig. S4. The event-driven convolution implemented step-by-step in Speck with the following components (Fig. S5):

-

a.

Zero padding: the event is padded to retain the layer size if needed. The image field, i.e. the address of the events, is expanded by adding pixels to the borders to retain the image size after the convolution if needed.

-

b.

Kernel anchor and address sweep: In the kernel mapper, the event is first mapped to an anchor point in the output neuron and the kernel space. The behavior is seen in Fig. S4. Using this anchor the kernel, represented by an address, is linked to an address point in the output space. The referenced kernel is swept over the incoming pixel coordinate. The kernel address and the neuron address are swept inversely to each other. For every channel in the output neuron space, the kernel anchor address is incremented so that a new kernel for the new output channel is used. The sweep over the kernel is repeated. If a stride is configured in either the horizontal or vertical direction, the horizontal and vertical sweeps are adjusted to jump over kernel positions accordingly.

-

c.

Address space compression: To effectively use the limited memory space, the verbose kernel address and the neuron address are compressed to avoid unused memory locations. Depending on the configuration, the address space gets packed so that there are no avoidable gaps inside the address that are not used by the configuration.

-

d.

Synaptic kernel memories: The kernel addresses are then distributed on the parallel kernel memory blocks according to the compressed addresses, and the specific signed 8-bit kernel weight is read. The weight and the compressed neuron address are then directed to the parallel neuron compute-in-memory-controller blocks according to the address location. Kernel positions with 0 weight are skipped during reading and are not forwarded to the neuron.

-

e.

Neuron compute units: The compute-in-memory-controller block model an Integrate and Fire (IF) neuron with a linear leak for every signed 16-bit memory word. Besides classic read and write, the memory controller has a read-add-check_spike-write operation. Whenever the accumulated value reaches a configured threshold, an event is sent out as shown in Fig. 2h and the neuron state variable has a threshold subtraction or reset written back.

-

f.

Bias and leak address sweep and memory: The leak (or bias) is modeled via an additional memory controller. The Leak/bias controller has a neuron individual signed 16-bit weight stored for every output channel map. An update event with this bias is sent to all its active neurons on a time reference tick. The reference tick is supplied from off-chip and is fully user-configurable.

-

g.

Pooling: The output events are finally merged into a pooling stage. The pooling stage operates on the sum pooling principle, i.e., it merges the events from 1,2 or 4 neurons in both x and y coordinates individually.

-

h.

Channel shift and routing: Before entering the routing NoC, the channels are shifted, and a prefix with routing information is added. One event is sent per destination for up to 2.

Speck has 9-SNN cores (layers) with different computational capabilities and memory sizing. For example, SNN core0, SNN core1, and SNN core2 have larger neuron memory sizes because the first layer, which connects to the dynamic vision sensor part, requires more neuron states with fewer input channels. As the network gets deeper, the synaptic operation or kernel memory requirement increases. The intermediate cores usually require a larger kernel memory size for generating more output channels using different kernel filters.

A key point for our presented architecture is synaptic memory utilization. Especially for CNN-based architectures, the on-the-fly computation of synaptic connections allows for minimizing memory requirements. This, in turn, saves area and energy – in the case of SRAM, both running and static. Our dedicated sCNN approach allows for many more synaptic connections by using the kernel weights stored in memory and computing all the synapses that share weights compared to standard SNN hardware implementations8,10,38 with minimal additional compute required. As shown in Table S1, on-the-fly synaptic kernel mapping allows the deployment of bigger network models to larger feature-size CMOS, thus significantly more cost-effective fabrication technologies while matching state-of-the-art performance. Besides exploiting SNN cores as convolutional layers, any SNN core that can be utilized as a fully connected layer with synaptic connections up to 64k, 32k, or 16k. In general, this is preferred at the last stages of the SNN.

Readout core

The optimal readout engine of the Speck is essential to receive the classification output directly from the chip. The last core output of the SNN can be connected to the readout engine. Unlike the neuromorphic chip’s other cores (layers), only one SNN core can be connected to the readout layer for a given network configuration. The readout layer can simultaneously calculate 15 different classifications connected to 15 different output channels of the last SNN core. Each channel has a parallel processing engine that calculates the average of spike counts over 1, 16, or 32 slow clock cycles, in the range of 1 kHz to 50 kHz operation. Furthermore, an optional asynchronous internal clock is also generated by the event activity of the DVS and can be used as a timing tick of the spike count averaging function. After computing the average of each spiking channel, the average value is compared by a global threshold that is the same for all 15 readout engines. The average values that exceed this threshold are compared, and the one with the maximum value is selected as the classification winner. The index of the winner neuron or spiking channel is directly sent to the readout pins. When a network requires more than 15 classifications, the readout layer can be bypassed or not used, and the spike information of up to 1024 output feature maps can be read from the last SNN core output. To get a reasonable identification, an external processor is required to do the averaging over time and find the right class selection.

Asynchronous logic design methodology

DVS has μs level temporal resolution due to the asynchronous visual perception paradigm. To complement the architectural advantages of sparse sensing and computing, the neuromorphic chip in Speck is built in a fully asynchronous fashion. The asynchronous data flow architecture provides low latency, and high processing throughput when requested by sensory input, and immediately switches to a low power/idle state when the sensory input is absent. This is archived by building on the well-established Pre Charge Full Buffer low latency pipeline designs39. As each component is naturally gated in this design approach, no complex or slow wake-up procedures must be implemented, thereby reducing running power consumption and obtaining an always-on feature with no additional latency. Asynchronous chips make the data follow event-based timestamps rather than clock rising or falling edges. Therefore, during the idle period, there is no switching output from the DVS pixel array, and there is no information routed to the chip that leads to no running power consumed in any processing unit other than the readout layer. However, there is still a static power consumption from the DVS pixel array, and leak currents from logic and memory, which are reduced by the before-mentioned architecture resource optimizations and optional independent voltage scaling for both logic and memory.

In our asynchronous design flow, we implemented an extensive library of asynchronous data flow templates. Each function in our library is built using a 4-phase handshake and Quasi Delay Insensitive (QDI) Dual Rail (DR) data encoding, making it robust to an extended range of supply voltages, operation conditions, and temperatures17,40. The main functions implemented in our library are Latch/Buffer, Compose, Splits, Non-conditional Splits, Conditional Pass, and Merge functions40. At the last stage of the asynchronous chain, we serialize the data to be monitored to reduce the pin count. Before the serialization operation, we convert the dual rail encoded information to Bundle Data (BD) encoding. Performance is ensured by hierarchically detailed automatic floor planning that employs extensive guides and fences for the individual components and pipeline stages.

Chip fabrication

Speck was fabricated using a 65 nm low-power 1P10M CMOS-logic process. The die layout is shown in Fig. S1. The total die size is “6.1 mm × 4.9 mm”. The whole pixel array, including the dummy rows/columns and the peripheral bias circuitry, occupies an area of “2.8 mm × 3 mm”. The vision sensory part consists of 128 × 128 DVS pixels using n-well photodiodes with a pixel pitch of 20 μm and close to 45% of fill factor41. The chip’s logic implementation is fully done by foundry-based standard cells. This also helps to transfer the logic implementation easily to lower or different technology nodes. For the SNN core and DVS pre-processing circuitry, a total of 7.8 Mb foundry-based Static Random-Access Memory (SRAM) cells are used. Each SNN core is designed with separate memory for its local computing unit, i.e., convolutional kernel and neuron states. Different cores do not share memory accessibility. The memory size distribution follows the standard CNN structure characteristics, which typically require higher neuron memory for the shallow layers and increasing kernel amount for deeper layers. Concerning the 128 × 128 as the largest possible input size, the biggest neuron and synaptic memory of one SNN core supporting this input size are 1.05 Mb and 0.13 Mb, respectively. Besides, each SNN core has a 16Kb bias memory for the independent channel-wise bias configuration. Each SNN core can support up to 1024 fan-in/fan-out (input/output) channels, and the kernel size can be flexibly set up to 16 × 16. The bandwidth of the core is defined as the number of Synaptic Operations (SynOp) per second that a layer can maximally process without latency, where a SynOp is defined as all the steps involved in the life-cycle of a spike arriving at a layer until it updates the neuron states and generates a spike if applied. SNN core0, the first SNN layer that typically receives the highest number of events, implements a fully symmetric parallel computation path to improve throughput up to 100 M SynOps/s. The bandwidth of other SNN cores is 30 M SynOps/s. Featured by the unique property of sCNN and LIF neuron models, the activity of the sparse sensor event stream is further reduced by every processing layer, truly exploiting the always-on only-compute-on-demand features of this architecture. The summary and performance of Speck are listed in Table S1 compared with existing neural network platforms.

Chip performance evaluation

We here evaluate Speck’s performance in terms of power and latency. Speck is an event-driven asynchronous chip whose power consumption and output latency vary with the number of SynOp.

Power evaluation

The total power consumption of speck contains four power rails:

$$P_{{{{{{\rm{total}}}}}}}=\underbrace{P_{{{{{{\rm{pixel}}}}}},{{{{{\rm{analog}}}}}}}+P_{{{{{{\rm{pixel}}}}}},{{{{{\rm{digital}}}}}}}}_{P_{{{{{{\rm{DVS}}}}}}}}+\underbrace{\overbrace{P_{{{{{{\rm{pre}}}}}}}+P_{{{{{{\rm{NoC}}}}}}}+P_{{{{{{\rm{SNN}}}}}}}+P_{{{{{{\rm{readout}}}}}}}}}^{P_{{{{{{\rm{Logic}}}}}}}+P_{{{{{{\rm{RAM}}}}}}}}_{P_{{{{{{\rm{processor}}}}}}}},$$

(2)

where PDV S indicates the power of DVS (see Fig. S7), Pprocessor denotes the power of the Speck processor. The power consumption of the DVS pixels contains the analog part (biases and power of the analog circuits, i.e., \({P}_{{{{{{{{\rm{pixel}}}}}}}},{{{{{{{\rm{analog}}}}}}}}}\)) and the digital part (asynchronous circuits generating and routing out the events, i.e., Ppixel,digital). The power consumption of the processor also includes two parts, PLogic and PRAM. Due to the split of the power tracks, we exploit the number of SynOps to measure all the power consumption of the processor, this covers all the power consumed by RAM and Logic. A SynOp includes the following steps: logic→Kernel RAM→logic→Neuron RAM Read→Neuron RAM Write→Logic. The processor’s power consumption is accordingly divided into logic power (all computations for neuron dynamics, i.e., Plogic) and RAM power (read/write of kernel and neuron RAMs, i.e., PRAM). In summary, Speck’s power breakdown can be categorized into four power tracks based on the utilization of its four modules. Power measurements are not conducted in isolation for individual functional modules but rather based on their actual usage in terms of {pixel analog, pixel digital, logic, and RAM}. Thus, PLogic and PRAM can be considered to be the sum of the power consumption of the DVS pre-processing core (\({P}_{{{{{{{{\rm{pre}}}}}}}}}\)), NoC (PNoC), SNN cores (PSNN), and readout core (Preadout).

For each power rail, the energy consumption can be divided into resting and running power, which could be measured separately in the following way:

-

1.

Design a list of stimuli which will induce known events/SynOps rate r1, r2,… at the circuit under test (e.g., flickering light with fixed frequency for DVS, pseudo-random input spike stream for SNN processor, etc.)

-

2.

Measure the average power consumption P1, P2,… over time for each stimulus

-

3.

Fit a straight line to P = Prest + rErun. The estimated resting power is Prest and the running energy per spike/operation is Erun.

The power results on Speck are shown in Figs. S6, S7, and Table S2. The spiking firing rate can effectively affect power consumption with respect to event-driven processing. At a supply voltage of 1.2 V, the DVS and processor on Speck typically consume resting power consumption of 0.15 mW and 0.42 mW, respectively. In Table 1, we employ three public datasets to test the power of Speck. Since the DVS part of Speck is not exploited, the power in Table 1 is only Pprocessor, including the pre-processing power, the NoC power, the total computational power, and memory r/w power that is consumed by the SNN cores, and the readout power.

Latency evaluation

We measure the latency of the Speck by calculating the difference between an input event and an output event. Speck can be flexibly configured to include different modules in the pipeline, including the DVS pixels, pre-processing, and different SNN layers.

-

1.

DVS response latency, defined as the time difference between the change of light intensity and the generation of the corresponding event, is measured to range from 40 μs to 3 ms, depending on the bandwidth configuration.

-

2.

DVS pre-processing layer latency, defined as the time to adapt the raw DVS events to SNN input spikes, including pooling, ROI (Region of Interest selection), mirroring, transposition, and multicasting, is measured to be 40 ns.

-

3.

SNN processor latency (per layer), defined as the time it takes to perform the event-driven convolution. It is measured by configuring a kernel with ones in a stride × stride square, and threshold = 1 (thus guarantees exactly one output spike is generated for every input spike). The value is related to the kernel size and the relative position of the neuron in the kernel and ranges from 120 ns to 7 μs (per layer).

-

4.

IO delay: Speck uses a customized serial interface for event input and output. The transmission time is 32 and 26 IO interface clock cycles for spike input and output, respectively. The data are converted to/from parallel lines inside the chip for fast intra-chip transmission and processing. The conversion time, including both input and output, is 125 ns.

To evaluate the end-to-end latency of the SNN processor on Speck, we map the network in Table 1 onto the chip and record the input and output spikes. Since the chip is fully asynchronous and feed-forward, an output spike only takes place after the corresponding input spike triggers at least one spike in each layer sequentially. We measure the minimum delay between any input-output spike-pair to be 3.36 us (averaged over all samples). This includes the input spike transmission time of 1.28 us at 25 MHz IO interface clock.

Spiking neuron models

Spiking neurons are the fundamental computation units of SNN, communicating via spikes coded in binary activations, which closely mimic the behaviors of biological neurons. The key difference between traditional artificial neurons and spiking neurons is that the latter considers the dynamics of the temporal dimension. The dynamics of a spiking neuron can be described as accumulating membrane potential over time from either the environment (via input information to the network) or from internal communications (typically via spikes from other neurons in the network); when the membrane potential reaches a certain threshold, the neuron fires spikes and updates the membrane potential. In this work, to evaluate the proposed dynamic framework and deploy SNNs on hardware for event-based tasks, we exploit three spiking neuron models, which differ in the membrane potential update and spiking firing rules.

LIF spiking neuron

The leaky integrate-and-fire (LIF)42,43 model is one of the most commonly used spiking neuron models since it is a trade-off between the complex spatio-temporal dynamic characteristics of biological neurons and the simplified mathematical form. LIF neurons are suitable for large-scale SNN simulations and can be described by a differential function:

$$\tau \frac{dU\left(t\right)}{dt}=-U\left(t\right)+I\left(t\right),$$

(3)

where τ is the time constant, and \(U\left(t\right)\) and \(I\left(t\right)\) are the membrane potential of the postsynaptic neuron and the input collected from presynaptic neurons, respectively. Solving Eq. (3), a simple iterative representation of the LIF neuron44,45 for easy inference and training can be obtained. For the convenience of describing our dynamic SNN, here we give the expression of a layer of LIF-SNN (Fig. 3b):

$$\left\{\begin{array}{l}{{{{{{{{\bf{U}}}}}}}}}^{t,n}={{{{{{{{\bf{H}}}}}}}}}^{t-1,n}+{{{{{{{{\bf{X}}}}}}}}}^{t,n}\quad \hfill \\ {{{{{{{{\bf{S}}}}}}}}}^{t,n}={{{{{{{\rm{Heaviside}}}}}}}}\left({{{{{{{{\bf{U}}}}}}}}}^{t,n}-{u}_{{{{{{{{\rm{th}}}}}}}}}\right)\quad \hfill \\ {{{{{{{{\bf{H}}}}}}}}}^{t,n}={V}_{{{{{{{{\rm{reset}}}}}}}}}{{{{{{{{\bf{S}}}}}}}}}^{t,n}+\left(\gamma {{{{{{{{\bf{U}}}}}}}}}^{t,n}\right)\odot \left({{{{{{{\bf{1}}}}}}}}-{{{{{{{{\bf{S}}}}}}}}}^{t,n}\right),\quad \end{array}\right.$$

(4)

where t and n denote the timestep and layer, Ut,n means the membrane potential which is produced by coupling the spatial feature Xt,n and the temporal input Ht−1,n (the internal state of spiking neurons from the previous timestep), uth is the threshold to determine whether the output spiking tensor St,n should be given or stay as zero, Heaviside( ⋅ ) is a Heaviside step function that satisfies \({{{{{{{\rm{Heaviside}}}}}}}}\left(x\right)=1\) when x ≥ 0, otherwise \({{{{{{{\rm{Heaviside}}}}}}}}\left(x\right)=0\), Vreset denotes the reset potential which is set after activating the output spiking, and \(\gamma={e}^{-\frac{dt}{\tau }} \, reflects the decay factor. Spatial feature Xt,n can be extracted from the original input St,n−1 by fully connected (FC) or convolution (Conv) operations. When using the Conv operation,

$${{{{{{{{\bf{X}}}}}}}}}^{t,n}={{{{{{{\rm{AvgPool}}}}}}}}\left({{{{{{{\rm{BN}}}}}}}}\left({{{{{{{\rm{Conv}}}}}}}}\left({{{{{{{{\bf{W}}}}}}}}}^{n},{{{{{{{{\bf{S}}}}}}}}}^{t,n-1}\right)\right)\right),$$

(5)

where AvgPool(⋅), BN(⋅) and Conv(⋅) mean the average pooling, batch normalization46, and convolutional operation respectively, Wn is the weight matrix, St,n−1(n ≠ 1) is a spike tensor that only contains 0 and 1, and \({{{{{{{{\bf{X}}}}}}}}}^{t,n}\in {{\mathbb{R}}}^{{c}_{n}\times {h}_{n}\times {w}_{n}}\) (cn is the number of channels, hn and wn are the size of the channels).

The LIF layer integrates the spatial feature Xt,n and the temporal input Ht−1,n into membrane potential Ut,n. Then the fire and leak mechanism is exploited to generate spatial spiking tensors for the next layer and the new neuron states for the next timestep. Specifically, when the entries in Ut,n are greater than the threshold uth, the spatial output of spiking sequence St,n will be activated, the entries in Ut,n will be reset to Vreset, and the temporal output Ht,n should be decided by the Xt,n since 1 − St,n must be 0. Otherwise, the decay of the Ut,n will be used to transmit the Ht,n, since the St,n is 0, which means there is no activated spiking output. After the Conv operation, all tensors have the same dimensions, i.e., \({{{{{{{{\bf{X}}}}}}}}}^{t,n},{{{{{{{{\bf{H}}}}}}}}}^{t-1,n},{{{{{{{{\bf{U}}}}}}}}}^{t,n},{{{{{{{{\bf{S}}}}}}}}}^{t,n},{{{{{{{{\bf{H}}}}}}}}}^{t,n}\in {{{{{{{{\bf{R}}}}}}}}}^{{c}_{n}\times {h}_{n}\times {w}_{n}}\).

IF spiking neuron

Another commonly exploited spiking neuron is integrate-and-fire (IF), a supported neuron model on Speck. IF does not leak membrane potential after spiking firing. That is, the decay factor γ = 1 in equation (4).

M-IF spiking neuron

When using SNNs to process event-based tasks, there is a problem of loss of accuracy after model synchronous training and asynchronous deployment (detailed later). To alleviate this problem, we designed a Multi-spike IF (M-IF) neuron model and integrated it into the programmable framework Sinabs. M-IF can be described as:

$$\left\{\begin{array}{l}{{{{{{{{\bf{U}}}}}}}}}^{t,n}={{{{{{{{\bf{H}}}}}}}}}^{t-1,n}+{{{{{{{{\bf{X}}}}}}}}}^{t,n}\quad \hfill \\ {{{{{{{{\bf{S}}}}}}}}}^{t,n}=\,{{\mbox{M-Heaviside}}}\,\left({{{{{{{{\bf{U}}}}}}}}}^{t,n},{u}_{{{{{{{{\rm{th}}}}}}}}}\right)\quad \hfill \\ {{{{{{{{\bf{H}}}}}}}}}^{t,n}={V}_{{{{{{{{\rm{reset}}}}}}}}}{{{{{{{\rm{Heaviside}}}}}}}}\left({{{{{{{{\bf{U}}}}}}}}}^{t,n}-{u}_{{{{{{{{\rm{th}}}}}}}}}\right)+{{{{{{{\rm{MOD}}}}}}}}({{{{{{{{\bf{U}}}}}}}}}^{t,n},{u}_{{{{{{{{\rm{th}}}}}}}}})\odot \left({{{{{{{\bf{1}}}}}}}}-{{{{{{{\rm{Heaviside}}}}}}}}\left({{{{{{{{\bf{U}}}}}}}}}^{t,n}-{u}_{{{{{{{{\rm{th}}}}}}}}}\right)\right),\quad \end{array}\right.$$

(6)

where M-Heaviside(Ut,n, uth) is a Multi-spike Heaviside function that satisfies M-Heaviside(Ut,n, uth) = [Ut,n/uth] when Ut,n − uth≥0, M-Heaviside(Ut,n, uth) = 0 when Ut,n − uth \({{{{{{{\rm{MOD}}}}}}}}(\cdot )\) is the remainder function, and [⋅] is the floor function. Comparing Eq. (4) and (6), it can be seen that the main difference between the two is that γ = 1 in M-IF and multiple spikes can be fired at a timestep when the membrane potential is greater than the threshold.

To facilitate the understanding of the difference in the dynamics of these three spiking neurons, we present a simple example. Assume that at a certain timestep, the membrane potential of the spiking neuron is one, and the threshold uth = 0.3. Then, in LIF and IF, a spike is fired, and the internal state (i.e., Ht,n) of the neuron is Vreset; and in M-IF, three spikes are fired, and Ht,n = Vreset. Speck supports LIF and IF spiking neurons, and we exploit IF for algorithm testing.

Synchronous training and asynchronous deployment

Due to the novel sensing paradigm of event cameras, extracting information from event streams to unlock the advantages of the camera is a challenge. Event cameras output events with μs temporal resolution, which are asynchronous and spatially sparse. One of the most common ways to meet this challenge is to convert the asynchronous event stream into other synchronous representations and then exploit corresponding algorithms for processing20. Specifically, when using the SNN algorithm, the event stream is aggregated into an event-based frame sequence and then sent to the network for training. The chip will perform asynchronous event-by-event processing on the input event stream by deploying the trained model to an asynchronous neuromorphic chip, thereby obtaining the minimum output latency. This training and deployment process is called “synchronous training and asynchronous deployment” (Fig. S12).

Here, we show how to convert an event stream into a sequence of frames, namely, frame-based representation. An event-based camera outputs event steam that comprises four dimensions (address event representation format): the timestamp, the polarity of the event, and two spatial coordinates (Fig. 5c). The polarity indicates an increase (ON) or decrease (OFF) of brightness, where ON/OFF can be represented via +1/-1 values. All events with the same timestamp \({t}^{{\prime} }\) can form a set

$${E}_{{t}^{{\prime} }}=\left\{{e}_{i}| {e}_{i}=\left[{t}^{{\prime} },{p}_{i},{x}_{i},{y}_{i}\right]\right\},$$

(7)

where (xi, yi) indicates the coordinates and pi represents the polarity of the i-th event. The event set can be reassembled into an event (spike) pattern tensor \({{{{{{{{\bf{X}}}}}}}}}_{{t}^{{\prime} }}\in {{\mathbb{R}}}^{2\times {h}_{0}\times {w}_{0}}\), where h0 = w0 = 128 (spatial resolution of the DVS128) and 2 is the channel number (ON/OFF). All elements in the tensor are either 0 or 1 (the aforementioned +1/-1 is just for convenience to indicate which channel the event is on).

Assume the temporal window of two adjacent timestamps is \(d{t}^{{\prime} }\) (μs in DVS128), we select η consecutive spike pattern tensors to aggregate into one frame with a new temporal window \(dt=d{t}^{{\prime} }\times \eta\). Formally, the event-based frame of input layer at t time \({{{{{{{{\bf{S}}}}}}}}}^{t,0}\in {{\mathbb{R}}}^{2\times {h}_{0}\times {w}_{0}}\) based on dt can be got by

$${{{{{{{{\bf{S}}}}}}}}}^{t,0}={{{{{{{\rm{q}}}}}}}}({{{{{{{{\bf{X}}}}}}}}}_{{t}^{{\prime} }})$$

(8)

where \({t}^{{\prime} }\) is an integer index whose value range is \(\left[\eta \times t,\eta \times \left(t+1\right)-1\right]\), \(t\in \left\{1,2,\cdots \,,T\right\}\) is timestep, q(⋅) is aggregation function that could be selected27,47 such as non-polarity aggregation, accumulate aggregation, AND logic operation aggregation, etc. We choose the accumulation function with polarity information as q(⋅) by default.

However, there is a model accuracy error between synchronous training and asynchronous deployment because the inputs during training and actual deployment are different. The inputs are frames when training on the GPU, while neuromorphic chips are processing event streams in an asynchronous event-by-event manner that updates the system state upon the arrival of a single event (Fig. S12). An effective method is to set dt as small as possible during training (to reduce the difference with \(d{t}^{{\prime} }\)) and set the timestep T to be larger (to increase the total input data). But the price is a significant boost in training time.

Another effective way is to exploit the proposed spiking neuron model in equation (6). The basic idea is that the frame-based representation will increase the value of the input, and these modifications will eventually be reflected in the membrane potential of the spiking neuron. In this situation, it is irrational to give one spike at a time while disregarding the true value of the membrane potential. We, therefore, introduced the M-IF neuron that can fire multiple spikes at once depending on the membrane potential. When deploying tasks in real scenarios, we employ M-IF to train the model, and then map it to the Speck supporting the IF model. Model accuracy will scarcely be lost when a synchronously trained model is deployed asynchronously to Speck thanks to the reasonable design of dt and T and the use of the M-IF neuron model.

Attention-based dynamic framework

Our goal is to optimize the membrane potential via an attention-based module to tune spiking responses in a data-dependent manner dynamically. SNNs’ building blocks, convolutional and recurrent operations, each process a single local neighborhood at a time. According to this viewpoint, the introduction of non-local operations48 for capturing long-range dependencies is the main way for the attention mechanism to optimize membrane potential. In our dynamic framework, two types of policy functions, refinement, and masking, are covered, which can be implemented in three steps.

Step 1: Capture global information

(Figure S13a). Average-pooling and max-pooling are two commonly used global information acquisition methods49,50. The former can learn the degree information of the object, while the latter can learn the discriminative features of the object. Global information of various dimensions can be gathered by applying pooling processes in different dimensions. In a certain layer of SNN, the intermediate feature maps at all timesteps can be expressed as \({{{{{{{{\bf{X}}}}}}}}}^{n}=[{{{{{{{{\bf{X}}}}}}}}}^{1,n},\cdots \,,{{{{{{{{\bf{X}}}}}}}}}^{t,n},\cdots \,,{{{{{{{{\bf{X}}}}}}}}}^{T,n}]\in {{\mathbb{R}}}^{T\times {c}_{n}\times {h}_{n}\times {w}_{n}}\). The vectors \({{{{{{{{\bf{F}}}}}}}}}_{{{{{{{{\rm{avg}}}}}}}}},{{{{{{{{\bf{F}}}}}}}}}_{\max }\in {{\mathbb{R}}}^{T\times 1\times 1\times 1}\), for instance, can be obtained by exploiting pooling compression in the temporal dimension, which represents the global information of the time dimension. Similarly, we can compress \({{{{{{{{\bf{X}}}}}}}}}^{t,n}\in {{\mathbb{R}}}^{{c}_{n}\times {h}_{n}\times {w}_{n}}\) to \({{{{{{{{\bf{F}}}}}}}}}_{{{{{{{{\rm{avg}}}}}}}}},{{{{{{{{\bf{F}}}}}}}}}_{\max }\in {{\mathbb{R}}}^{{c}_{n}\times 1\times 1}\) in the channel dimension. In addition, it is also conceivable to apply Favg and \({{{{{{{{\bf{F}}}}}}}}}_{\max }\) simultaneously by developing techniques to capture global information more effectively51.

Step 2: Model long-range dependencies

(Figure S13a). Long-range dependencies can be modeled with the global information obtained in step 1. There are many specific modeling methods, and various methods can be designed according to the needs of specific application scenarios in terms of accuracy, computation efficiency, and parameter number52. The most classic method is to model long-range dependencies, namely, attention scores, through a learnable two-layer FC network49,50. Here, we only show this approach for simplicity. Formally, the typical two-layer attention function50 is described by:

$${{{{{{{\rm{f}}}}}}}}(\cdot )=\sigma \left({{{{{{{{\bf{W}}}}}}}}}_{1}({{{{{{{\rm{ReLU}}}}}}}}({{{{{{{{\bf{W}}}}}}}}}_{0}({{{{{{{\rm{AvgPool}}}}}}}}(\cdot ))))+{{{{{{{{\bf{W}}}}}}}}}_{1}({{{{{{{\rm{ReLU}}}}}}}}({{{{{{{{\bf{W}}}}}}}}}_{0}({{{{{{{\rm{MaxPool}}}}}}}}(\cdot ))))\right),$$

(9)

where AvgPool(⋅) and MaxPool(⋅) represent the results of average-pooling and max-pooling respectively, the output of f(⋅) is a vector with the same dimensions as AvgPool(⋅) and MaxPool(⋅), σ(⋅) means the sigmoid function, W0, and W1 are the weights of linear layers in the shared FC.

In our framework, the input of f(⋅) is Xn if temporal-wise attention is performed. The pooling operations first infer the T-dimensional vectors, then the two-layer shared FC network achieves a new T-dimensional vector. Each element in this vector is an attention score, which measures the importance of the intermediate feature maps at different timesteps. For the modeling of channel-wise attention, we only need a new trainable two-layer FC network and set the input to Xt,n. Consequently, we can get a cn-dimensional channel-wise attention vector at each timestep. Note that channel attention vectors can be shared across the temporal dimension to reduce computation (Fig. 3c in this work), or solved independently at each timestep28. In terms of final task accuracy, there is little difference between the two designs.

Step 3: Mask information

(Figure S13b). A more aggressive policy masks part of the information based on attention scores. The value internal of the attention score in f(⋅) is (0, 1). The masking policy directly sets part of the attention scores to 0. In this work, we employ the reparameterization winner-take-all function that retains the largest top-K values in f(⋅) and replaces other values assigned to 0:

$${{{{{{{\rm{g}}}}}}}}(\cdot )={{{{{{{\rm{g}}}}}}}}({{{{{{{\rm{f}}}}}}}}(\cdot )),$$

(10)

where the output dimensions of masking function g(⋅) and f(⋅) are exactly the same.

Finally, the feature maps optimized by the Attention-based Refine (AR) and Attention-based Mask (AM) modules can be expressed as:

$${{{{{{{{\bf{X}}}}}}}}}^{n}\leftarrow f({{{{{{{{\bf{X}}}}}}}}}^{n})\otimes {{{{{{{{\bf{X}}}}}}}}}^{n},$$

(11)

or

$${{{{{{{{\bf{X}}}}}}}}}^{n}\leftarrow g({{{{{{{{\bf{X}}}}}}}}}^{n})\otimes {{{{{{{{\bf{X}}}}}}}}}^{n}.$$

(12)

During multiplication ⊗, the attention scores are broadcasted (copied) accordingly, for example, the temporal-wise attention vector is broadcasted along both the channel and spatial dimension, and the channel-wise attention vector is broadcasted along the temporal and spatial dimension. In practice, functions f(⋅) and g(⋅) have a huge design space21,52 (Fig. S13c).

Details of algorithm evaluation

The attention-based dynamic framework is practiced as a plug-and-play module that can be integrated into pre-existing SNN architectures. Overall, we perform strict ablation experiments, where we add the dynamic modules to the baseline (vanilla) SNNs and keep all the rest of the experimental details consistent, including input-output coding and decoding, training methods, hyper-parameter setting, loss function, and network structure, etc. For simplicity, we choose some baselines from previous work27, and the typical experimental settings are given in Table S4.

Datasets

We test dynamic SNNs on two datasets of different scales. DVS128 Gesture30, DVS128 Gait-day31, and DVS128 Gait-night32 are three smaller datasets, which are mainly designed for the application of some special scenarios. On these three datasets, we first perform algorithmic characteristics evaluation (Fig. 4) and then deploy the dynamic SNNs to Speck (Fig. 5, Table 1). On the other hand, to verify the performance of the proposed dynamic framework on deep SNNs, we conduct tests on HAR-DVS33, which is currently the largest dataset for human action recognition. HAR-DVS records 300 categories and more than 100 K event streams with a DAVIS346 camera. The design of HAR-DVS considers many factors, such as movement speed, dynamic background, occlusion, multiple views, etc., making HAR-DVS a challenging event-based benchmark. The original HAR-DVS dataset is very large, over 4 TB. For ease of processing, the authors convert each event stream into frames via frame-based representation and randomly sample 8 frames as the official HAR-DVS dataset.

Training details

With the frame-based representation, both zero and non-zero integers are present in the values in the input frames St,0. Then the first layer of SNN is equivalent to the coding layer, which recodes these non-binary inputs into spike trains53. All other layers in the SNN communicate via spikes. After counting the number of spikes over the whole time window issued by the output neurons, a softmax function and cross-entropy are followed as loss functions. All models were trained using spatio-temporal backpropagation from scratch, which provides a surrogate gradient method to solve the non-differentiable problem of spike activity44,45. After choosing the baseline models, the general training setup is given in Table S4.

Network structure

In Table S5, we use three sizes of network structures for dynamic algorithm evaluation. In typical edge computing application scenarios, tasks are single and simple, so only lightweight networks are often deployed. On the Gesture, Gait-day, and Gait-night datasets, we exploit three-layer27 and five-layer54 Conv-based LIF-SNNs. Specifically, network structure (LIF-SNN27): Input-MP4-64C3S1-128C3S1-BN-AP2-128C3S1-BN-AP2-256FC-Output. Network structure (LIF-SNN54): Input-128C3S1-MP2-128C3S1-MP2-128C3S1-MP2-128C3S1-MP2-128C3S1-MP2-512FC-AP10-Output. AP2-average denotes pooling with 2 × 2 pooling kernel size, MP2-max pooling with 2 × 2 pooling kernel size, nC3Sm-Conv layer with n output feature maps, 3 × 3 weight kernel size, and m stride size, kFC-Linear layer with k neurons. We then employ Res-SNN-1855 to verify the performance of the proposed dynamic framework on deep SNNs and large-scale HAR-DVS. Experimental results are given in Table S5. We can see that our algorithm significantly improves task accuracy on lightweight networks. For instance, our module improves the task accuracy on the Gesture and Gait-day datasets by 4.2% and 6.1%, respectively, when it is integrated into the three-layer Conv-based LIF-SNN. Besides, our algorithm also works well on deep SNNs, with an accuracy gain of 0.9% on HAR-DVS.

Input time window

When deploying event-based applications in real scenarios, it is usually assumed that a task result will be output at regular intervals, and we call this interval the input time window, i.e., dt × T. Generally, the validity of the data increases and task performance improves with increasing input time window size. But the user’s experience with the product will be impacted if the input time window is set too broadly. In this work, we test the performance of the proposed dynamic framework with multi-scale input time windows on the Gesture, Gait-day, and Gait-night datasets. One option is to align the dt × T to some fixed length (called restricted input). Another option is to fix the value of T and then adaptively set all dt × T to the total duration of the sample (called full input). As shown in Table S5, we set up ablation experiments on restricted input, 540 ms = 15 ms × 36. Moreover, in Gesture, Gait-day, and Gait-night, the duration of each sample is about 6000 ms, 4400 ms, and 5500 ms, respectively. We also test the performance of vanilla and dynamic SNNs on full input. Experimental results show that dynamic SNNs performs better than vanilla SNNs in both accuracy and network average spiking firing rate at multiple input time window scales (Table S5).

Theoretical energy consumption evaluation

In traditional ANNs, the times of floating-point operations (FLOPs) are used to estimate computational burden, where almost all FLOPs are MAC56. Following this line of thinking, in SNNs, the theoretical energy consumption in the network is also evaluated by FLOPs28,55,57,58, which are mainly AC operations and are strongly correlated with the number of spikes. Although this evaluation view ignores the hardware implementation basis and the temporal dynamic of spiking neurons59, it is still useful for simple analysis and evaluation of algorithm performance and guidance for algorithm design. We employ this method to qualitatively analyze the energy consumption of dynamic SNNs.

In general, the energy consumption of SNNs is related to spiking firing, and energy efficiency improves with smaller spike counts. We define three kinds of spiking firing rates to comprehensively evaluate the impact of the proposed dynamic framework on firing. Firstly, we define Network Spiking Firing Rate (NSFR) as: at timestep t, a spiking network’s NSFR is the ratio of spikes produced over all the neurons to the total number of neurons in this timestep. Then, we define the Network Average Spiking Firing Rate (NASFR) as the average of NSFR over all timesteps T. NSFR is used to evaluate the change of the same network’s spike distribution at different timesteps; NASFR is exploited to compare the spike distribution of different networks. Finally, we define the Layer Average Spiking Firing Rate (LASFR) to finely evaluate the energy consumption of SNNs. At timestep t, a layer’s spiking firing rate (LSFR) is the ratio of spikes produced over all the neurons to the total number of neurons in that layer; then we define the LASFR that averages LSFR across all timesteps T. It should be noted that a set of NSFR, NASFR, and LASFR can be obtained for each sample. In this work, by default, we count these spiking firing rates of the samples in the entire test set and get their mean as the final data.

Specifically, the inference energy cost of vanilla SNN EBase is computed as

$${E}_{{{{{{{{\rm{Base}}}}}}}}}={E}_{{{{{{{{\rm{MAC}}}}}}}}}\cdot F{L}_{{{{{{{{\rm{SNNConv}}}}}}}}}^{1}+{E}_{{{{{{{{\rm{AC}}}}}}}}}\cdot \left({\sum}_{n=2}^{N}F{L}_{{{{{{{{\rm{SNNConv}}}}}}}}}^{n}+{\sum}_{m=1}^{M}F{L}_{{{{{{{{\rm{SNNFC}}}}}}}}}^{m}\right),$$

(13)

where N and M are the total number of layers of Conv and FC, EMAC and EAC represent the energy cost of MAC and AC operation, \(F{L}_{{{{{{{{\rm{SNNConv}}}}}}}}}^{n}={({k}_{n})}^{2}\cdot {h}_{n}\cdot {w}_{n}\cdot {c}_{n-1}\cdot {c}_{n}\cdot T\cdot {\Phi }_{{{{{{{{\rm{Conv}}}}}}}}}^{n-1}\) and \(F{L}_{{{{{{{{\rm{SNNFC}}}}}}}}}^{m}={i}_{m}\cdot {o}_{m}\cdot T\cdot {\Phi }_{{{{{{{{\rm{FC}}}}}}}}}^{m-1}\) are the FLOPs of n-th Conv and m-th FC layer, respectively, kn is the kernel size of the convolutional layer, I’m, and om are the input and output dimensions of the FC layer, \({\Phi }_{{{{{{{{\rm{Conv}}}}}}}}}^{n}\) and \({\Phi }_{{{{{{{{\rm{FC}}}}}}}}}^{m}\) are the LASFR of SNN at n-th Conv and m-th FC layer. The first layer is the encoding layer, thus its basic operation is MAC. Refer to previous work58,60, we assume the data for various operations are 32-bit floating-point implementation in 45 nm technology61, in which EMAC = 4.6 pJ and EAC = 0.9 pJ.

It can be seen from equation (13) that once the network structure and the simulation timestep T are determined, EBase is only related to the spiking firing rate, i.e., \({\Phi }_{{{{{{{{\rm{Conv}}}}}}}}}^{n}\) and \({\Phi }_{{{{{{{{\rm{FC}}}}}}}}}^{m}\). In the case of dynamic SNNs, we optimize the membrane potential using the attention module, which in turn drops the spiking firing. So the energy increase comes from MAC operations due to the regulation of membrane potential. The energy decrease comes from the drop of AC operations caused by sparser spiking firing. That is,

$${\Delta }_{E}={E}_{{{{{{{{\rm{Dyn}}}}}}}}}-{E}_{{{{{{{{\rm{Base}}}}}}}}}={E}_{{{{{{{{\rm{MAC}}}}}}}}}\cdot {\Delta }_{{{{{{{{\rm{MAC}}}}}}}}}-{E}_{{{{{{{{\rm{AC}}}}}}}}}\cdot {\Delta }_{{{{{{{{\rm{AC}}}}}}}}},$$

(14)

where EDyn is the energy cost of dynamic SNN, ΔMAC, and ΔAC are the number of operations increased to compute the attention scores and reduced in the SNN, respectively. The energy consumption of dynamic SNN is lower than that of vanilla SNN as long as ΔE rREC as:

$${r}_{{{{{{{{\rm{REC}}}}}}}}}=\frac{{\Delta }_{E}}{{E}_{{{{{{{{\rm{Base}}}}}}}}}}.$$

(15)

Equation (13) indicates that a sparse firing regime is the key to achieving the energy advantage of SNN. The ideal situation is that we can get the best task performance with the fewest spike, but the relationship between spike firing and performance is complex and has not been adequately explored. This work demonstrates that data-dependent attention processing can significantly improve performance while substantially reducing the number of spikes.

As shown in Eq. (14), we pay a price in the attention-based dynamic process, but overall the benefits outweighed the costs. Empirically, the firing of SNNs will be related to the dataset and network size. For example, on Gesture and Gait-day datasets, the network average spiking firing rate of the three-layer is greater than that of the five-layer Conv-based LIF-SNN (Table S5). Consequently, the reduction in the number of spikes of the three-layer SNN is also more obvious, e.g., on the Gait-day, the AM module drops the spiking firing rate by 87.2% resulting in rREC = −0.68. By contrast, in five-layer SNN, the spiking firing rate of dynamic SNN reduced by 60.0%, while rREC = −0.18 (there are too many MAC operations in the first encoding layer of baseline SNN that leads to higher EBase). Thus, we can observe that rREC is associated with the structure of the baseline. These analyses inspire us that when designing dynamic SNN algorithms for practical applications, we should comprehensively consider factors such as task scale, network scale, attention module design, spiking firing, task performance, etc., in order to achieve the highest task cost-effective.

Comparison with previous methods

In Table S5, we make a comparison with prior work. On the Gesture, Gait-day, and Gait-night datasets, we can get state-of-the-art or comparable results. Since we mainly want to verify the dynamic algorithm, we did not blindly expand the network scale on these datasets. Compared with other types of networks that process event streams, such as Graph Convolutional Networks (GCN), SNNs have obvious performance advantages when the input time window is limited. For example, we achieve an accuracy of 98.2% with 540 ms on Gait-night, which is higher than 94.9% obtained by 3D-GCN using the full input32. We also tested the accuracy of dynamic SNNs when restricting the input time window to 120ms on the Gait-day and Gait-night datasets. The performance advantage of dynamic SNN is more significant in this case. For instance, we get an accuracy of 84.8% on Gait-day, which outperforms about 38.5% with 200 ms in 3D-GCN32. Due to the lack of performance benchmarks in the SNN domain for HAR-DVS. We first use Res-SNN-1855 as a baseline and then incorporate our modules. Our dynamic SNN achieves an accuracy of 46.7% on HAR-DVS, which is close to the performance results obtained using ANN methods33. Although it is beyond the scope of this work, we anticipate that further effectiveness and efficiency gains will be achievable simultaneously by tailoring attention-based dynamic module usage for specific event-based tasks.

In addition, by observing the results in Table S5, we pose a valuable but complex open question: what factors will and how they affect the spiking firing rate of SNNs? Empirically, the firing of the network is related to dataset size, network scale, input time window and timestep, spiking neuron types, etc. For instance, for the Gesture dataset, the NASFR of the lightweight three-layer LIF-SNN27 (0.17) is significantly larger than that of the larger five-layer LIF-SNN54 (0.07). Consequently, once the NASFR of a vanilla SNN is already low, it is relatively hard to drop spiking firing further. These observations are crucial for understanding the sparse activations of SNNs, and we look forward to their theoretical and algorithmic inspiration for subsequent research.

Deployment of SNNs on Speck

Speck greatly eases the difficulty of deploying SNNs on neuromorphic chips by providing a complete toolchain (Figs. S8, S9). First, we offer Tonic, a data management tool that enables users to manage existing/handcraft event data. Tonic provides efficient APIs that allow users’ easy access to various available public event camera datasets. Then, to facilitate chip-compatible neural network development, we propose the Sinabs Python package. Sinabs is a deep learning library based on PyTorch for SNNs, with a focus on simplicity, fast training, and scalability. It supports various SNN training methods, such as ANN-to-SNN conversion62, direct training method based on Back Propagation Through Time (BPTT)44, etc. It also allows the user to freely define the synapse/spiking neuron model, and surrogate gradient function to enable advanced SNN development. Sinabs also comes with many useful plugins. For instance, with the Sinabs-Speck plugin, the user can easily quantize and transfer the model parameter and generate the compatible configuration for Speck; the EXODUS plugin optimizes the gradient flow and can accelerate the BPTT training 30 × faster.

Moreover, we provide Samna, the developer interface to the toolchain, and run-time environment for interacting with Speck. Developed towards efficiency and user friendly, a set of Python APIs is available in Samna with the core running in C++. Thus, users can utilize neuromorphic devices professionally and elegantly. Samna also features an event-based stream filter system that allows real-time, multi-branch processing of the event-based stream coming in or out from the device. With an integration of a just-in-time compiler in Samna, the flexibility of this filter system has been taken to an even higher dimension, which supports adding users’ defined filter functions at run-time to meet the requirements of any different scenarios.

Wanda Parisien is a computing expert who navigates the vast landscape of hardware and software. With a focus on computer technology, software development, and industry trends, Wanda delivers informative content, tutorials, and analyses to keep readers updated on the latest in the world of computing.