Classical reservoir computing

We start with a nonlinear dynamical network of N variables of the following general form,

$$\dot{{{{{{{{\bf{x}}}}}}}}}(t)={{{{{{{\boldsymbol{f}}}}}}}}[{{{{{{{\bf{x}}}}}}}}(t)],$$

(1)

where \({{{{{{{\bf{x}}}}}}}}(t)={[{x}_{1}(t),\ldots,{x}_{N}(t)]}^{\top }\) denotes the N-dimensional (N-D) state of the system at time t, and \({{{{{{{\boldsymbol{f}}}}}}}}[{{{{{{{\bf{x}}}}}}}}(t)]={\left({f}_{1}[{{{{{{{\bf{x}}}}}}}}(t)],{f}_{2}[{{{{{{{\bf{x}}}}}}}}(t)],\ldots,{f}_{N}[{{{{{{{\bf{x}}}}}}}}(t)]\right)}^{\top }\) is the N-D nonlinear vector field. In this article, we assume that neither the vector field f (equivalently, each element fi) nor the underlying complex interaction mechanism among these N variables is partially or completely unknown a prior. The only available information about the underlying system is the observational time series x(t) at the discrete time steps. Here, we choose a regularly sampled time increment Δt.

The traditional RC, a powerful tool for modeling time series data, embeds the observational data x(t) into an n-dimensional hidden state r(t) using an input matrix Win of dimension n × N. Then the hidden state r(t) evolves within the reservoir with a weighted adjacency matrix A of dimension n × n, given by

$${{{{{{{\bf{r}}}}}}}}(t+{{\Delta }}t)=(1-l)\cdot {{{{{{{\bf{r}}}}}}}}(t)+l\cdot \tanh \left[{{{{{{{{\bf{W}}}}}}}}}_{{{{{{{{\rm{in}}}}}}}}}{{{{{{{\bf{x}}}}}}}}(t)+{{{{{{{\bf{A}}}}}}}}{{{{{{{\bf{r}}}}}}}}(t)+{{{{{{{{\bf{b}}}}}}}}}_{{{{{{{{\rm{r}}}}}}}}}\right],$$

(2)

where l is the leaky rate and br is the bias term. Subsequently, an additional output layer is employed, typically implemented as a simple linear transformation using the matrix Wout, mapping the reservoir state space to the desired output space. Here, the output space is the original data space,

$$\hat{{{{{{{{\bf{x}}}}}}}}}(t+{{\Delta }}t)={{{{{{{\bf{x}}}}}}}}(t)+{{{{{{{{\bf{W}}}}}}}}}_{{{{{{{{\rm{out}}}}}}}}}{{{{{{{\bf{r}}}}}}}}(t+{{\Delta }}t),$$

(3)

where Woutr(t + Δt) can be explained as the predicted residue between x(t + Δt) and x(t), or equivalently the approximated integral operator \(\int\nolimits_{t}^{t+{{\Delta }}t}{{{{{{{\boldsymbol{f}}}}}}}}[{{{{{{{\bf{x}}}}}}}}(\tau )]{{{{{{{\rm{d}}}}}}}}\tau\). It is important to note that the only trained module is the output layer, i.e., Wout, which can be solved explicitly via the Tikhonov regularized regression54 with the loss unction:

$${{{{{{{{\mathcal{L}}}}}}}}}_{{{\Delta }}t}=\mathop{\sum}\limits_{t}{\left\{{{{{{{{{\bf{W}}}}}}}}}_{{{{{{{{\rm{out}}}}}}}}}{{{{{{{\bf{r}}}}}}}}(t+{{\Delta }}t)-[{{{{{{{\bf{x}}}}}}}}(t+{{\Delta }}t)-{{{{{{{\bf{x}}}}}}}}(t)]\right\}}^{2}+{\lambda }_{W}\cdot \parallel {{{{{{{{\bf{W}}}}}}}}}_{{{{{{{{\rm{out}}}}}}}}}\parallel,$$

(4)

where λW is the regularization coefficient. By leveraging the trained RC, one can accurately achieve dynamics prediction.

Higher-order structure in dynamical systems

To establish our framework, we first introduce a few important definitions about the higher-order structure for any given function of vector field based on the simplicial complexes summarized in55.

Definition 1

Separable and inseparable functions. Assume that g(s) is an arbitrarily given scalar function with respect to s = {v1, v2, . . . , vk}, a non-empty set containing k variables. If there are two variable sets s1, s2 ∈ {s1, s2∣s1 ⊄ s2, s2 ⊄ s1, s1 ∪ s2 = s}, and two scalar functions g1 and g2 such that

$$g({{{{{{{\bf{s}}}}}}}})={g}_{1}({{{{{{{{\bf{s}}}}}}}}}_{1})+{g}_{2}({{{{{{{{\bf{s}}}}}}}}}_{2}),$$

(5)

then g(s) is a separable function with respect to s, i.e., g(s) can be decomposed into the sum of two functions whose variable sets have no inclusion relationship; otherwise g(s) is an inseparable function.

Definition 2

Higher-order neighbors. Consider the nonlinear scalar differential equation \(\dot{u}=g({{{{{{{{\bf{s}}}}}}}}}_{u})\), where g(su) is a scalar function with respect to a set of variables su. We decompose the function g(su) into a sum of several inseparable functions gi(su,i) as

$$g({{{{{{{{\bf{s}}}}}}}}}_{u})={g}_{1}({{{{{{{{\bf{s}}}}}}}}}_{u,1})+{g}_{2}({{{{{{{{\bf{s}}}}}}}}}_{u,2})+…+{g}_{{D}_{u}}({{{{{{{{\bf{s}}}}}}}}}_{u,{D}_{u}}),\\ {{{{{{{{\bf{s}}}}}}}}}_{u}={{{{{{{{\bf{s}}}}}}}}}_{u,1}\cup {{{{{{{{\bf{s}}}}}}}}}_{u,2}\cup \cdots \cup {{{{{{{{\bf{s}}}}}}}}}_{u,{D}_{u}},\,\,{{{{{{{{\bf{s}}}}}}}}}_{u,i}\not\subset {{{{{{{{\bf{s}}}}}}}}}_{u,j},$$

(6)

for all i, j ∈ {1, 2, . . . , Du} with i ≠ j, where Du is the number of terms. Then, we name the set \({{{{{{{{\bf{s}}}}}}}}}_{u,i}=\{{v}_{{i}_{1}},{v}_{{i}_{2}},…,{v}_{{i}_{{k}_{i}}}\}\) as the (ki-1)-D simplicial complex, and the i-th higher-order neighbor of node u. Denote by \({{{{{{{{\mathscr{S}}}}}}}}}_{u}=\{{{{{{{{{\bf{s}}}}}}}}}_{u,1},{{{{{{{{\bf{s}}}}}}}}}_{u,2},…,{{{{{{{{\bf{s}}}}}}}}}_{u,{D}_{u}}\}\) the set of the higher-order neighbors of node u.

We construct a hypergraph or a hypernetwork, denoted by \({{{{{{{\mathcal{G}}}}}}}}=(V,S)\), of system (1) under consideration. Here, V = {x1, x2, . . . , xN} denotes the set of nodes, corresponding to the state variables of the system. According to Definitions 1 & 2, we introduce the concept of the higher-order neighbors \({{{{{{{{\mathscr{S}}}}}}}}}_{u}\) of an arbitrary node u ∈ V, yielding the set of higher-order neighbors for all nodes \(S=\{{{{{{{{{\mathscr{S}}}}}}}}}_{{x}_{1}},{{{{{{{{\mathscr{S}}}}}}}}}_{{x}_{2}},…,{{{{{{{{\mathscr{S}}}}}}}}}_{{x}_{N}}\}\). Hereafter, for simplicity of notation’s usage, node u is used as a placeholder of any element in the set V.

To better elucidate these concepts, we directly utilize the Lorenz63 system as an illustrative example. As shown in “Explanation (1)” of Fig. 1, for the third node u = z in system (12), we write out

$$\dot{z}={f}_{3}(x,\, y,\, z)=-\beta z+xy=g(x,\, y,\, z)={g}_{1}(z)+{g}_{2}(x,\, y),$$

(7)

where g1(z) ≜ − βz, g2(x, y) ≜ xy, and Dz ≜ 2. Consequently, according to Definitions 1 & 2, the set of the higher-order neighbors of node u = z is \({{{{{{{{\mathscr{S}}}}}}}}}_{z}=\{{{{{{{{{\bf{s}}}}}}}}}_{z,1},\, {{{{{{{{\bf{s}}}}}}}}}_{z,2}\}=\{\{z\},\{x,y\}\}\). Similarly, we have \({{{{{{{{\mathscr{S}}}}}}}}}_{x}=\{{{{{{{{{\bf{s}}}}}}}}}_{x,1},\, {{{{{{{{\bf{s}}}}}}}}}_{x,2}\}=\{\{x\},\{y\}\}\) for node u = x and \({{{{{{{{\mathscr{S}}}}}}}}}_{y}=\{{{{{{{{{\bf{s}}}}}}}}}_{y,1},\, {{{{{{{{\bf{s}}}}}}}}}_{y,2}\}=\{\{y\},\{x,z\}\}\) for node u = y. Consequently, we obtain the higher-order structure of the Lorenz63 system as \({{{{{{{\mathcal{G}}}}}}}}=(V,\, S)=((x,\, y,\, z),({{{{{{{{\mathscr{S}}}}}}}}}_{x},\, {{{{{{{{\mathscr{S}}}}}}}}}_{y},\, {{{{{{{{\mathscr{S}}}}}}}}}_{z}))\).

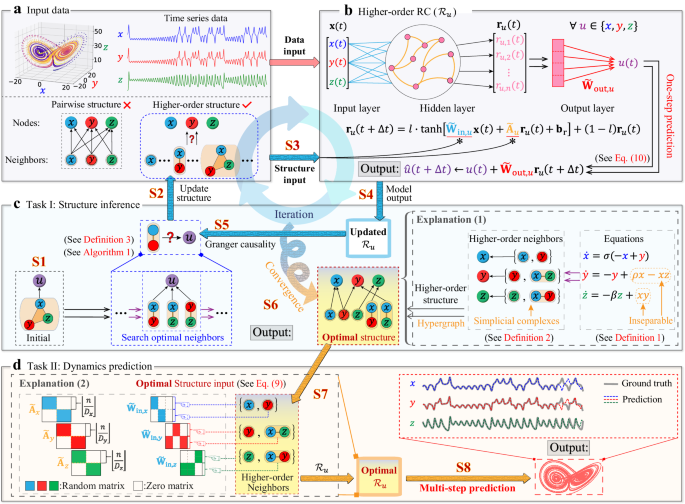

a The input data consists of time series data and higher-order structure information. b The new paradigm \({{{{{{{{\mathcal{R}}}}}}}}}_{u}\) using the higher-order structural information. c The HoGRC framework enables the inference of higher-order structures. d The HoGRC framework achieves multi-step dynamics prediction using the inferred optimal structure. The markers “S1”–“S8” correspond to the steps in Table 2. We offer “Explanation 1” to elucidate the concept of higher-order structures, and use “Explanation 2” to clarify the notion of the higher-order structure embedding. Due to the same process and the independently trained \({{{{{{{{\mathcal{R}}}}}}}}}_{u}\) for all nodes, it makes the HoGRC own the scalability or parallel merit40.

A paradigm of reservoir computing with structure input

Despite the tremendous success achieved by the traditional RC in dynamics predictions in many fields, a difficulty still lies in pushing for the limit of prediction accuracy while maintaining the low complexity of the model. We attribute this difficulty to a lack of direct utilization of the structural information from the underlying dynamical system, since the structure is an important component of the system. Actually, the PRC, the recent framework40 integrated pairwise structures to predict dynamics in complex systems. However, they cannot reveal the higher-order structures, a more precise representation of the complex interactions in complex dynamical systems.

Thus, we introduce a new computing paradigm into the RC, termed higher-order RC, to incorporate the time-series data with the higher-order structure to make accurate dynamics predictions. Specifically, as shown in Fig. 1b, we model each state variable (i.e., node u, as defined above) of the original system independently with a block of n neurons in a reservoir network. Then we incorporate the higher-order neighbors of node u into the corresponding RC, defined as \({{{{{{{{\mathcal{R}}}}}}}}}_{u}\). Subsequently, inspired by but different from the classical RC method (2), the hidden dynamics in the higher-order \({{{{{{{{\mathcal{R}}}}}}}}}_{u}\) is given by

$${{{{{{{{\bf{r}}}}}}}}}_{u}(t+{{\Delta }}t)=(1-l)\cdot {{{{{{{{\bf{r}}}}}}}}}_{u}(t)+l\cdot \tanh \left[{\tilde{{{{{{{{\bf{W}}}}}}}}}}_{{{{{{{{\rm{in}}}}}}}},u}{{{{{{{\bf{x}}}}}}}}(t)+{\tilde{{{{{{{{\boldsymbol{A}}}}}}}}}}_{u}{{{{{{{{\bf{r}}}}}}}}}_{u}(t)+{{{{{{{{\bf{b}}}}}}}}}_{{{{{{{{\rm{r}}}}}}}}}\right],$$

(8)

for different u ∈ V. Thus, we establish a total of ∣V∣ sub-RC networks, where ∣V∣ denotes the number of the elements in the set V. In contrast to the traditional RC method (2) that solely relies on a single random matrix Win and a single random matrix A without including any higher-order structural information, the framework (8) operates at node level, notably incorporating the corresponding higher-order structural information. Specifically for each node u ∈ V, this framework embeds the higher-order structural information directly into the matrices \({\tilde{{{{{{{{\bf{W}}}}}}}}}}_{{{{{{{{\rm{in}}}}}}}},u}\) and \({\tilde{{{{{{{{\bf{A}}}}}}}}}}_{u}\) in the following forms:

$${\tilde{{{{{{{{\bf{W}}}}}}}}}}_{{{{{{{{\rm{in}}}}}}}},u}={\left[{\psi }^{\top }({{{{{{{{\bf{s}}}}}}}}}_{u,1}),{\psi }^{\top }({{{{{{{{\bf{s}}}}}}}}}_{u,2}),…,{\psi }^{\top }({{{{{{{{\bf{s}}}}}}}}}_{u,{D}_{u}})\right]}^{\top }\in {{\mathbb{R}}}^{n\times N},\quad \psi ({{{{{{{{\bf{s}}}}}}}}}_{u,i})\in {{\mathbb{R}}}^{\lfloor n/{D}_{u}\rfloor \times N},\\ {\tilde{{{{{{{{\bf{A}}}}}}}}}}_{u}={{{{{{{\rm{diag}}}}}}}}\{\varphi ({{{{{{{{\bf{s}}}}}}}}}_{u,1}),\varphi ({{{{{{{{\bf{s}}}}}}}}}_{u,2}),…,\varphi ({{{{{{{{\bf{s}}}}}}}}}_{u,{D}_{u}})\}\in {{\mathbb{R}}}^{n\times n},\quad \varphi ({{{{{{{{\bf{s}}}}}}}}}_{u,i})\in {{\mathbb{R}}}^{\lfloor n/{D}_{u}\rfloor \times \lfloor n/{D}_{u}\rfloor },$$

(9)

where ⌊ ⋅ ⌋ is the floor function, and the integer n is selected as a multiple of Du. Different from Win, a randomly initialized matrix in its entirety, in the traditional RC framework (2), each ψ(su,i) in \({\tilde{{{{{{{{\bf{W}}}}}}}}}}_{{{{{{{{\rm{in}}}}}}}},u}\) is a random (resp., zero) block submatrix of dimension ⌊n/Du⌋ × N such that, if xj ∈ (resp., ∉ )su,i for j ∈ {1, 2, . . . , N}, all elements of the j-th column of ψ(su,i) are set as random values (resp., zeros), and φ(su,i) represents a random sparse submatrix of dimension ⌊n/Du⌋ × ⌊n/Du⌋. Actually, these block configurations in the reservoir facilitate a more precise utilization of the higher-order structural information.

To enhance the transparency of the above configurations, we provide a visual representation in “Explanation (2)” of Fig. 1, where depicted is the true higher-order RC structure (i.e., the optimal network finally obtained in the following inference task, see the next subsection) under consideration of the Lorenz63 system. Specifically, as mentioned above, for node u = z, the set of the higher-order neighbors becomes {{z}, {x, y}} with Dz = 2. Thus, we obtain \({\tilde{{{{{{{{\bf{W}}}}}}}}}}_{{{{{{{{\rm{in}}}}}}}},z}={[{\psi }^{\top }(z),{\psi }^{\top }[(x,y)]]}^{\top }\) according to the notations set in (9), where the third column of ψ⊤(z) and the first and the second columns of ψ⊤[(x, y)] are the random sparse submatrices, and the remaining parts are zero submatrices. Moreover, we obtain \({\tilde{{{{{{{{\bf{A}}}}}}}}}}_{z}={{{{{{{\rm{diag}}}}}}}}\{\varphi (z),\, \varphi [(x,y)]\}\), which is a block diagonal matrix comprising two random sparse submatrices. Additionally, we provide a simple illustrative example about the difference between the traditional RC method (2) and the newly proposed higher-order RC framework (8) in Supplementary Note 1.3.

Now, by embedding the higher-order structural information into the dynamics of the reservoir in the above manner, we obtain the n-D hidden state \({{{{{{{{\bf{r}}}}}}}}}_{u}(t)={[{r}_{u,1},{r}_{u,2},…,{r}_{u,n}]}^{\top }(t)\) for each u ∈ V. This allows us to predict the system’s state u in the next time step as

$$\hat{u}(t+{{\Delta }}t)=u(t)+{\tilde{{{{{{{{\bf{W}}}}}}}}}}_{{{{{{{{\rm{out}}}}}}}},u}{{{{{{{{\bf{r}}}}}}}}}_{u}(t+{{\Delta }}t),$$

(10)

where \({\tilde{{{{{{{{\bf{W}}}}}}}}}}_{{{{{{{{\rm{out}}}}}}}},u}\) represents an output matrix of dimension 1 × n, employed for the prediction of u.

Significantly, our framework fully inherits the parallel merit of the existing work40. In particular, the above process operates at the node level, focusing exclusively on every node u, and such a process can be applied across all nodes in V. Different from the classical RC, we use a specific higher-order \({{{{{{{{\mathcal{R}}}}}}}}}_{u}\) to model each node u, thereby requiring a smaller reservoir size n or resulting in a lightweight model. Moreover, since all lightweight reservoirs \({{{{{{{{\mathcal{R}}}}}}}}}_{u}\,(u\in V)\) are independently trained, our framework can be efficiently processed in a parallel manner, which in turn makes our framework scalable to higher-dimensional systems.

Integration of structure inference and dynamics prediction

In the preceding section, the setup of the higher-order \({{{{{{{{\mathcal{R}}}}}}}}}_{u}\) requires the exact information of the structures. However, in real-world scenarios, the specific form as well as the higher-order structures of a system are always unknown before the setup of \({{{{{{{{\mathcal{R}}}}}}}}}_{u}\). So, we design an iterative algorithm to seek the optimal structure for \({{{{{{{{\mathcal{R}}}}}}}}}_{u}\) which is initially endowed with a structure containing all possible candidates or only partially known information. To carry out this design, we novelly integrate the concept of the Granger causality (GC) into the higher-oder \({{{{{{{{\mathcal{R}}}}}}}}}_{u}\) (see Table 1). Subsequently, the inferred structures are utilized to update \({{{{{{{{\mathcal{R}}}}}}}}}_{u}\), thereby further enhancing its prediction performance. This iterative procedure is repeated until the model achieves optimal prediction accuracy. Consequently, we refer to this integrated model as the HoGRC framework, as depicted in the composite of Fig. 1a-d.

Particularly, we develop an efficient greedy strategy, as outlined in Table 1 of the Methods section, to infer the true higher-order structure of system (1) solely from the time series data. As shown in Fig. 1c, for any node u, we employ the one-step prediction error of \({{{{{{{{\mathcal{R}}}}}}}}}_{u}\) based on the concept of the GC (see Definition 3) to iteratively refine the initial and coarse-grained candidate neighbors into the optimal and fine-grained higher-order neighbors, until an optimal structure is obtained, tending to align with the true higher-order structure defined in Definition 2. In the iterative procedure, the GC inference and the dynamics prediction using \({{{{{{{{\mathcal{R}}}}}}}}}_{u}\) are complementarily and mutually reinforcing. As depicted by the blue loop in Fig. 1, the structure discovered by the GC significantly enhances the predictability of \({{{{{{{{\mathcal{R}}}}}}}}}_{u}\), and conversely, the updated \({{{{{{{{\mathcal{R}}}}}}}}}_{u}\) in the iterative procedure makes the GC discover the structure in a more effective manner.

Furthermore, as indicated by the orange arrows in Fig. 1d, we obtain the optimal \({{{{{{{{\mathcal{R}}}}}}}}}_{u}\) for all nodes u based on the input of the optimal higher-order structure. Then, these optimal models can perform multi-step prediction by continually adding the most recent forecasted values to the input data, which significantly outperforms the traditional prediction methods. Therefore, the HoGRC framework, integrating the node-level RC and the GC inference, simultaneously achieve two functions: (I) structures inference (Fig. 1c) and (II) dynamics prediction (Fig. 1d). To enhance comprehension of the HoGRC workflow, we provide a summary of the key execution steps in Table 2, where the steps correspond to the markers “S1″–”S8″ in Fig. 1. For more detailed information about the HoGRC framework, please refer to Methods section.

Evaluation metrics

To demonstrate the efficacy of the two tasks achieved by the proposed framework, we conduct experiments using several representative systems from different fields. For Task (I), we utilize the one-step extrapolation prediction error produced by the HoGRC framework to search the higher-order neighbors of all dimensions in order to identify the higher-order structure with higher accuracy. For Task (II), we test the classical RC, the PRC40, and the HoGRC, respectively, on several representative dynamical systems and compare their prediction performances (see Methods section for the differences among these three methods). For a clearer illustration, we define the valid predictive steps (VPS) as the predictive time steps when the prediction accuracy exceeds a certain threshold. Additionally, we adopt the root mean square error (RMSE) as a metric to quantitatively evaluate the prediction error,

$${{{{{{{\rm{RMSE}}}}}}}}(t)=\sqrt{\frac{1}{N}\mathop{\sum }\limits_{i=1}^{N}{\left[\frac{{\hat{x}}_{i}(t)-{x}_{i}(t)}{{\sigma }_{i}}\right]}^{2}},$$

(11)

where σi is the standard deviation of xi(t). In our work, we use the VPS to evaluate the prediction performance of the HoGRC, i.e., \({{{{{{{\rm{VPS}}}}}}}}=\inf \{s:{{{{{{{\rm{RMSE}}}}}}}}(s{{\Delta }}t) \, > \, {\epsilon }_{{{{{{{{\rm{r}}}}}}}}}\}\), where ϵr is the positive threshold and Δt is the time step size. In the following numerical simulations, without a specific statement, we always set ϵr = 0.01.

Performances in representative dynamical systems

Here, we aim to demonstrate the effectiveness of the HoGRC framework using several representative dynamical systems. We take a 3-D Lorenz63 system and a 15-D coupled Lorenz63 system as examples. Additional experiments for more systems are included in Supplementary Note 2.

First, we consider the Lorenz63 system56 which is a typical chaotic model described by the following equations:

$$\dot{x}= {f}_{1}(x,y,z)=\sigma (y-x),\\ \dot{y}= {f}_{2}(x,y,z)=\rho x-y-xz,\\ \dot{z}= {f}_{3}(x,y,z)=-\!\beta z+xy,$$

(12)

where σ, β, ρ are system parameters. In the simulations, we take the first 60% of the data generated by the system as the training set, and reserve the remaining data for testing purposes.

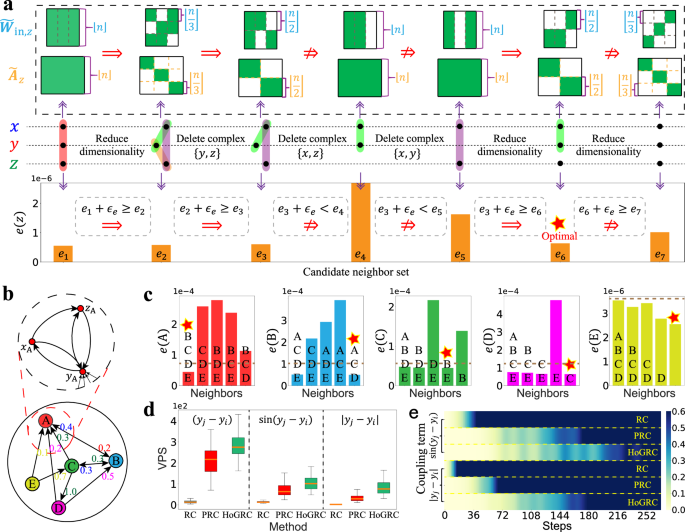

We begin our analysis by using the proposed method to identify the higher-order neighbors of the considered system. All the other hyperparameters of the RC, the PRC, and the HoGRC are specified, respectively, in Supplementary Note 3. Subsequently, we employ Algorithm 1 of Table 1 to infer the higher-order neighbors of all nodes in the Lorenz63 system. Specifically, Fig. 2a presents an inference process for node z using Algorithm 1 of Table 1, a greedy strategy. At the beginning, when no information regarding the network structure is available, the set of the higher-order neighbors for node z is initially assigned as \({{{{{{{{\mathscr{C}}}}}}}}}_{z}={{{{{{{{\mathscr{C}}}}}}}}}_{z}^{0}=\{\{x,\, y,\, z\}\}\). Thus, \({\tilde{{{{{{{{\bf{W}}}}}}}}}}_{{{{{{{{\rm{in}}}}}}}},z}\) and \({\tilde{{{{{{{{\bf{A}}}}}}}}}}_{z}\), the input and the adjacency matrices, are constructed with \({{{{{{{{\mathscr{C}}}}}}}}}_{z}^{0}\), and \({{{{{{{{\mathcal{R}}}}}}}}}_{z}^{0}\), the corresponding higher-order RC, is utilized to calculate the one-step prediction error e(z), designated as e1. Next, one needs to decide whether to rectify \({{{{{{{{\mathscr{C}}}}}}}}}_{z}\) by reducing the dimensionality based on Algorithm 1 of Table 1. To do so, set \({{{{{{{{\mathscr{C}}}}}}}}}_{z}^{1}=\{\{x,\, y\},\{y,\, z\},\{x,\, z\}\}\), and then the prediction error e2 is obtained using \({{{{{{{{\mathcal{R}}}}}}}}}_{z}^{1}\) with \({{{{{{{{\mathscr{C}}}}}}}}}_{z}^{1}\). Here, by setting a small threshold ϵe (e.g., 10−7), it is found that e1 + ϵe ≥ e2, which implies a prediction promotion and thus, results in a resetting \({{{{{{{{\mathscr{C}}}}}}}}}_{z}={{{{{{{{\mathscr{C}}}}}}}}}_{z}^{1}\) based on Definition 3. Then, one needs to decide whether to delete any element, e.g. {y, z}, in the current set \({{{{{{{{\mathscr{C}}}}}}}}}_{z}\). To do so, set \({{{{{{{{\mathscr{C}}}}}}}}}_{z}^{2}=\{\{x,y\},\{x,z\}\}\). Thus, the prediction error e3 is obtained using \({{{{{{{{\mathcal{R}}}}}}}}}_{z}^{2}\) with \({{{{{{{{\mathscr{C}}}}}}}}}_{z}^{2}\), which further yields e2 + ϵe ≥ e3. This prediction promotion leads us to reset \({{{{{{{{\mathscr{C}}}}}}}}}_{z}={{{{{{{{\mathscr{C}}}}}}}}}_{z}^{2}\). However, as the sets \({{{{{{{{\mathscr{C}}}}}}}}}_{z}^{3}=\{\{x,z\}\}\) and \({{{{{{{{\mathscr{C}}}}}}}}}_{z}^{4}=\{\{x,\, z\}\}\) are, respectively, taken into account, e3 + ϵe < e4 and e3 + ϵe < e5 are obtained using \({{{{{{{{\mathcal{R}}}}}}}}}_{z}^{3}\) with \({{{{{{{{\mathscr{C}}}}}}}}}_{z}^{3}\) and \({{{{{{{{\mathcal{R}}}}}}}}}_{z}^{4}\) with \({{{{{{{{\mathscr{C}}}}}}}}}_{z}^{4}\), respectively. These inequalities indicate that there is no improvement in prediction and, consequently, no rectification needed for the set \({{{{{{{{\mathscr{C}}}}}}}}}_{z}\) at this stage. Therefore, the set should remain unaltered as \({{{{{{{{\mathscr{C}}}}}}}}}_{z}={{{{{{{{\mathscr{C}}}}}}}}}_{z}^{2}\). In what follows, one still needs to decide whether to further rectify \({{{{{{{{\mathscr{C}}}}}}}}}_{z}\) by reducing the dimensionality based on Algorithm 1 of Table 1. To do so, set \({{{{{{{{\mathscr{C}}}}}}}}}_{z}^{5}=\{\{x,y\},\{z\}\}\). Thus, e6 and e3 + ϵe ≥ e6 are obtained, which leads us to further reset \({{{{{{{{\mathscr{C}}}}}}}}}_{z}={{{{{{{{\mathscr{C}}}}}}}}}_{z}^{5}\). As suggested in Fig. 2, prediction is not improved by further reducing the dimensionality of \({{{{{{{{\mathscr{C}}}}}}}}}_{z}\) as \({{{{{{{{\mathscr{C}}}}}}}}}_{z}^{6}=\{\{x\},\{y\},\{z\}\}\). This, with the greedy strategy we use, indicates an iteration terminal for inferring the higher-order neighbors with an output \({{{{{{{{\mathscr{S}}}}}}}}}_{z}={{{{{{{\mathscr{C}}}}}}}}={{{{{{{{\mathscr{C}}}}}}}}}_{z}^{5}\). Here, actually \({{{{{{{{\mathcal{R}}}}}}}}}_{z}^{5}\) with \({{{{{{{{\mathscr{S}}}}}}}}}_{z}={{{{{{{{\mathscr{C}}}}}}}}}_{z}^{5}\) after training is the optimal higher-order RC of dynamics reconstruction and prediction for the state of node z. In addition, the inferred results of nodes x and y can be found in Supplementary Note 4.1.

a The successively iterative results on higher-order neighbors inference for node z using Algorithm 1 in Table 1, where the red pentagram indicates the inferred higher-order neighbors \({{{{{{{{\mathscr{S}}}}}}}}}_{z}\). b The underlying coupling network of the CL63 system. c The inferred coupling neighbors for each subsystem of the CL63 system. d, e The average number of predictable steps and average prediction error of different methods for the nonlinear coupling cases, respectively. The orange line in the middle of the box represents the median, the upper and lower boundaries of the box represent the upper and lower quartiles, respectively. The boundaries of the upper and lower whiskers represent the maxima and minima, respectively. We set parameters as σ = 10, ρ = 28 and β = 8/3. Here, we use a time-step size Δt = 0.02 and a time-step number T = 5000.

In Task (II), we perform multi-step prediction using different methods, we find that the HoGRC framework yields the best prediction despite utilizing information solely from higher-order neighbors (see Supplementary Note 4.1). Additionally, in Supplementary Note 2, we also conduct similar experiments using other classic chaotic systems. Our findings indicate that systems with stronger nonlinearity and more complex structures tend to exhibit better prediction performance using the HoGRC framework.

Next, we investigate the coupled Lorenz63 (CL63) system34 with a more complex structure and stronger nonlinear interactions, in which the dynamical behaviors of each subsystem is described by:

$${\dot{x}}_{i} =-\sigma \left[{x}_{i}-{y}_{i}+\gamma \mathop{\sum }\limits_{j =1}^{m}{w}_{ij}{g}_{ij}({y}_{i},{y}_{j})\right],\\ {\dot{y}}_{i} =\rho (1+{h}_{i}){x}_{i}-{y}_{i}-{x}_{i}{z}_{i},\,\,{\dot{z}}_{i}={x}_{i}{y}_{i}-\beta {z}_{i},$$

(13)

where m denotes the number of the subsystems, hi is the scale of the i-th subsystem, γ represents the coupling strength, wij is the coupling weight, and gij denotes the coupling function. We consider a 15-dimensional CL63 system with 5 subsystems, and the structure and the coupling weights are depicted in Fig. 2b. We generate data with the coupling strength γ = 0.5 and the coupling function gij = (yj − yi). Based on this data, we calculate the Lyapunov Exponents (LE’s) of the system (see Supplementary Note 4.2), which suggests a higher-degree complexity emerging in the system, as more than half of the LEs are positive.

Our HoGRC framework considers complexes {yi} and {yj} as the higher-order neighbors of xi if subsystem j has a coupling effect on i. Thus, by virtue of Definition 3, we are able to infer such a coupling relationship between any two subsystems. As depicted in Fig. 2c, we initially present the one-step prediction error for any subsystem i, considering all four other subsystems are treated as neighbors. Subsequently, we proceed to present the prediction errors when each neighboring subsystem is individually removed. The experimental results demonstrate that with the removal of subsystem j, the stronger the coupling effect of subsystem j on i, the worse the prediction performance of subsystem i is. This enables us to directly infer the true interaction network among subsystems (marked by the red pentagrams).

For our second task, we perform multi-step predictions on the CL63 system using different methods. We randomly select 50 points from the testing data as starting points and use the predictable steps to quantify the prediction performances for the various methods. Figure 2d displays a boxplot of the predictable steps for various methods on 50 testing sets. The results clearly indicate that the HoGRC framework outperforms the other two methods, highlighting its superior ability in the extrapolation prediction. Furthermore, we extend our analysis by generalizing the linear coupling term gij = (yj − yi) to two more nonlinear forms, namely \(\sin ({y}_{j}-{y}_{i})\) and ∣yj − yi∣. Correspondingly, we include the complex {yi, yj} in the higher-order neighbors of xi. The heatmap of the prediction errors along with the time steps for various methods is illustrated in Fig. 2e. Combining with Fig. 2d, it becomes apparent that the HoGRC framework maintains its superiority in terms of prediction performance.

Investigations on network dynamics

In recent years, network dynamical systems (NDS) have gained significant attention for their broad range of applications. As a special form of system (1), NDS often exhibits a higher number of dimensions and more complex structural information. Therefore, our framework has become an efficient tool for NDS’s structural inference and dynamic prediction. Generally, NDS’s dynamics are modeled as:

$${\dot{{{{{{{{\bf{x}}}}}}}}}}_{i}=F({{{{{{{{\bf{x}}}}}}}}}_{i})+\gamma \mathop{\sum }\limits_{j=1}^{m}{\omega }_{ij}G({{{{{{{{\bf{x}}}}}}}}}_{i},\, {{{{{{{{\bf{x}}}}}}}}}_{j}),$$

(14)

where \({{{{{{{{\bf{x}}}}}}}}}_{i}={({x}_{i}^{1},\ldots,{x}_{i}^{N})}^{\top }\) denotes the N-D state of the i-th subsystem, F represents the self-dynamics, G represents the interaction dynamics, γ is the coupling strength, wij is the interaction weight of subsystem j to i. Before presenting the results of our numerical investigations, we first make three remarks. (i) Since the HoGRC framework is a node-level based method, here we set the coupling network structure between any two subsystems as depicted in Fig. 2d. (ii) A very small coupling strength implies a weak coupling effect on the dynamics, while sufficiently strong coupling tends to increase predictability due to a high probability of synchronization occurrence (see Supplementary Note 4.6 for details). Therefore, in our investigations, we selected a moderate level of coupling strength to increase prediction difficulty. (iii) In addition to the RC and the PRC methods, we use two recently proposed powerful methods, namely the Neural Dynamics on Complex Network (NDCN)15 and the Two-Phase Inference (TPI)16, as the baseline methods for NDS predictions. The NDCN combines the graph neural networks with differential equations to learn and predict complex network dynamics, while the TPI automatically learns some basis functions to infer dynamic equations of complex system behavior for network dynamics prediction. Refer to Supplementary Note 5 for further details.

We first consider the coupled FitzHugh–Nagumo system (FHNS)57 that describes the dynamical activities of a group of interacted neurons with

$$F({{{{{{{{\bf{x}}}}}}}}}_{i})= F({x}_{i}^{1},\, {x}_{i}^{2})={\left({x}_{i}^{1}-{({x}_{i}^{1})}^{3}-{x}_{i}^{2},\, a+b{x}_{i}^{1}+c{x}_{i}^{2}\right)}^{\top },\\ G({{{{{{{{\bf{x}}}}}}}}}_{i},\, {{{{{{{{\bf{x}}}}}}}}}_{j})= G({x}_{i}^{1},\, {x}_{j}^{1})=\frac{1}{{k}_{i}^{{{{{{{{\rm{in}}}}}}}}}}({x}_{i}^{1}-{x}_{j}^{1}),$$

(15)

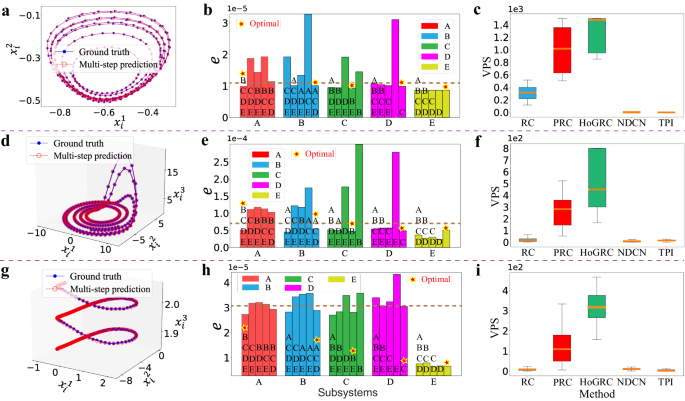

in network dynamics (14). Here, we set γ = 0.5, a = 0.28, b = 0.5, c = −0.04, and m = 5 to generate experimental data. As shown in Fig. 3a, the trajectory predicted by our HoGRC framework closely matches the true trajectory of the FHNS system. In task (I), we begin by examining the inference of the coupling network among subsystems. Figure 3b displays the prediction errors for each subsystem under different coupling structures. The bar chart above includes multiple letters indicating the candidate neighbors of the corresponding subsystem. It is evident that the inferred coupling structures, illustrated with red pentagrams, align with our initial setting. Furthermore, in Supplementary Note 4.3, we provide the inference of higher-order neighbors for individual nodes within the subsystem as well, which further validates the effectiveness of our method. For task (II), we conduct the multi-step prediction experiments and compared our results to the baseline methods on 50 testing sets. The results, depicted in Fig. 3c, demonstrate that our method outperforms the other methods in terms of the extrapolation prediction performance.

The corresponding results using the HoGRC framework and two other methods for the FHNS (a–c), CRoS (d–f), and CsH2S (g–i) network systems. The orange line in the middle of the box represents the median, the upper and lower boundaries of the box represent the upper and lower quartiles, respectively. The boundaries of the upper and lower whiskers represent the maxima and minima, respectively. The experimental data for these systems are generated by setting T = 5000 and using Δt = 0.25, 0.1, and 0.04, respectively.

We also investigate two other network dynamics, namely the coupled Rossler system (CRoS)58 and the coupled simplified Hodgkin-Huxley system (CsH2S)59. The CRoS has the form

$$F({{{{{{{{\bf{x}}}}}}}}}_{i})= F({x}_{i}^{1},\, {x}_{i}^{2},\, {x}_{i}^{3})={\left(-{h}_{i}{x}_{i}^{2}-{x}_{i}^{3},\, {h}_{i}{x}_{i}^{1}+a{x}_{i}^{2},\, b+{x}_{i}^{3}({x}_{i}^{1}+c)\right)}^{\top },\\ G({{{{{{{{\bf{x}}}}}}}}}_{i},\, {{{{{{{{\bf{x}}}}}}}}}_{j})= G({x}_{i}^{1},\, {x}_{j}^{1})={x}_{j}^{1}-{x}_{i}^{1},$$

(16)

in network dynamics (14), with hi representing the scale of the i-th subsystem, and with a = 0.2, b = 0.2, c = − 6, γ = 1 and m = 5. The CsH2S has the form

$$F({{{{{{{{\bf{x}}}}}}}}}_{i})= F({x}_{i}^{1},{x}_{i}^{2},{x}_{i}^{3}) \\ = {\left({x}_{i}^{2}-a{({x}_{i}^{1})}^{3}+b{({x}_{i}^{1})}^{2}-{x}_{i}^{3}+{I}_{{{{{{{{\rm{ext}}}}}}}}},c-u{({x}_{i}^{1})}^{2}-{x}_{i}^{2},\, r[s({x}_{i}^{1}-{x}_{0})-{x}_{i}^{3}]\right)}^{\top },\\ G({{{{{{{{\bf{x}}}}}}}}}_{i},{{{{{{{{\bf{x}}}}}}}}}_{j})= G({x}_{i}^{1},\, {x}_{j}^{1})= ({V}_{{{{{{{{\rm{syn}}}}}}}}}-{x}_{i}^{1})\cdot \mu ({x}_{j}^{1}),\,\mu (x)=\frac{1}{1+{{{{{{{{\rm{e}}}}}}}}}^{-\lambda (x-{{{\Omega }}}_{syn})}},$$

(17)

in network dynamics (14), with a = 1, b = 3, c = 1, u = 5, s = 4, r = 0.005, x0 = − 1.6, γ = 0.1, Vsyn = 2, λ = 10, Ω = 1, Iext = 3.24, and m = 5. The investigation results, respectively, presented in Fig. 3d–f, g–i, suggest that our HoGRC framework possesses extraordinary capability in dynamics reconstructions and predictions using the inferred information of higher-order structures. It is noted that, in the examples above, the performances of the NDCN and the TPI are not satisfactory. This is because the NDCN is a network-level method that may not achieve good performance in complex nonlinear systems, and because the interaction function weights wij in front of G(xi, xj) are different, so the TPI method cannot learn the accurate basis function (refer to Supplementary Note 5 for the detailed illustration).

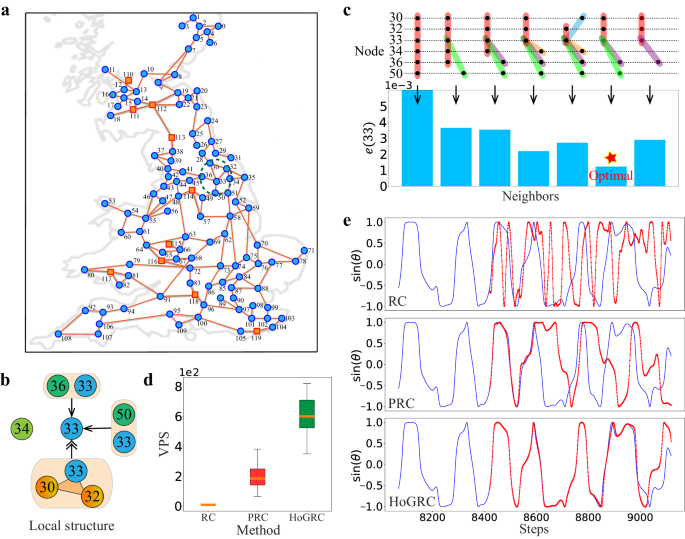

Application to the UK power grid system

Finally, we apply the HoGRC framework to a real power system. We choose the UK power grid60 as the network structure, which includes 120 units (10 generators and 110 consumers) and 165 undirected edges, as shown in Fig. 4a. To better describe the power grid dynamics, we consider a more general Kuramoto model with higher-order interactions61, which can be represented as:

$${\dot{\theta }}_{i}={\omega }_{i}+{\gamma }_{1}\mathop{\sum }\limits_{j=1}^{N}{A}_{ij}\sin ({\theta }_{j}-{\theta }_{i})+{\gamma }_{2}\mathop{\sum }\limits_{j=1}^{N}\mathop{\sum }\limits_{k=1}^{N}{B}_{ijk}\sin ({\theta }_{j}+{\theta }_{k}-2{\theta }_{i}),$$

(18)

where θi and ωi denote the phase and natural frequency of the ith oscillator respectively, γ1 and γ2 are the coupling strengths, while pairwise and higher-order interactions are encoded in the adjacency matrix A and adjacency tensor B. Under specific coupling settings, this kind of system exhibits extremely complex chaotic dynamics rather than synchronization.

a The UK power grid. b Local coupling structure of node 33. c Higher-order neighbors inference of node 33. d The average predictable steps of the entire system in the test set. The orange line in the middle of the box represents the median, the upper and lower boundaries of the box represent the upper and lower quartiles, respectively. The boundaries of the upper and lower whiskers represent the maxima and minima, respectively. e Extrapolation prediction of node 33 under different methods, with the true value shown in blue and the predicted value in red. We set T = 10,000, Δt = 0.08, γ1 = 0.4, and γ2 = 0.4.

Due to the special form of this model and the prediction challenges posed by higher-order terms, we need to apply a special treatment when using the HoGRC framework. We take the 2-D data \((\sin (\theta (t)),\cos (\theta (t)))\) as the input of the HoGRC framework at time t and Δθ = (θ(t + 1) − θ(t))/Δt as the output. Therefore, the predicted value in the next step is \(\hat{\theta }(t+1)={{\Delta }}\theta {{\Delta }}t+\theta (t)\). Thus, in multi-step prediction tasks, we can use the predicted value \((\sin (\hat{\theta }(t+1)),\cos (\hat{\theta }(t+1)))\) as the input for iterative prediction. For fairness, the RC and PRC methods also adopt the same treatment in the subsequent comparative tests.

To verify the advantages of our method, we consider the higher-order interactions which are constructed by identifying each distinct triangle from the UK power grid and generated data for the experiment, and Fig. 4b shows the local coupling network of node 33 (see Supplementary Note 4.4 for details of all higher-order interactions). Figure 4c shows the one-step prediction error for cases with different neighbors. We observe that the real higher-order neighbors correspond to the lowest prediction error. In the prediction task, our method outperforms the RC and PRC methods (see Fig. 4d, e), thanks to the structural complexity and high nonlinearity of the model, which make traditional methods prone to overfitting. Our method can learn the real dynamics of the system, leading to accurate predictions over a longer range.

Different role of noise perturbation

Noise perturbation is a major factor that can affect the efficacy of any method in dealing with data. Hence, to demonstrate the robustness of our method against noise perturbations, we introduce noises of different intensities into the generated data.

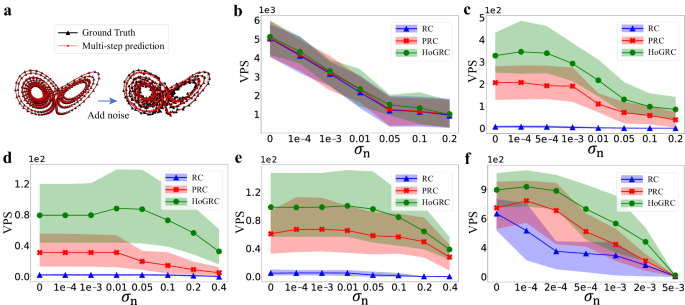

In particular, we use Gaussian noise with zero mean and standard deviation σn to introduce noise into the data. Empirically, due to the presence of noise, we increase the threshold ϵr to 0.03. Figure 5a shows the prediction performances for cases without and with added noise. With a certain level of noise intensity, such as σn = 0.2, our method is able to infer higher-order neighbors for both the Lorenz63 system and the CL63 system (refer to Supplementary Note 4.5 for specific details). Figure 5b–e shows the prediction performances when increasing noise intensity for the Lorenz63 and CL63 systems, while Fig. 5f shows the results for the hyperchaotic system (see Supplementary Note 2.2). Clearly, our method works robustly on data with noise intensity in a certain range.

a Dynamics reconstruction and prediction with and without noise for the Lorenz63 system. Prediction performances using different methods change with the noise intensity for the Lorenz63 system (b), for the CL63 system (c–e), where the corresponding coupling terms are selected, respectively, as (yj − yi), \(\sin ({y}_{j}-{y}_{i})\), and ∣yj − yi∣), and for the hyperchaotic system (f).

To be candid, the excessive noise can adversely affect the accuracy of predictions across various examples. However, we interestingly find that in some cases, a moderate amount of noise can promote predictions, as shown in Fig. 5c–f. This type of noise can enhance the generalization ability of our method, especially when the HoGRC framework experiences overfitting issues even after sufficient training. If the structures or dynamics of the learned dynamical system are not too complex, the HoGRC framework after training can approximate the original dynamics with high fidelity. Nevertheless, noise generally has a negative effect.

Influence of training set sizes and coupling network

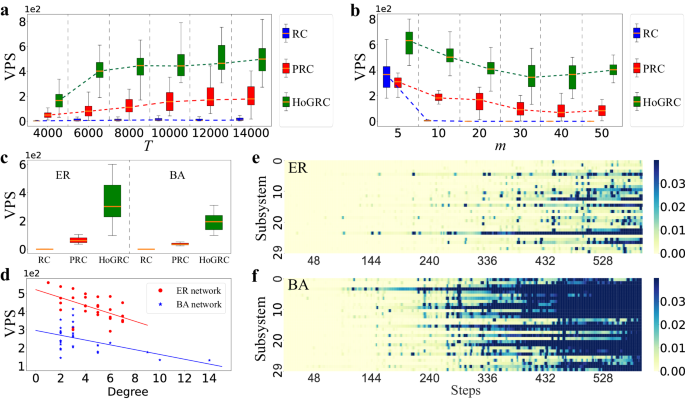

Training set sizes and network structures are factors that significantly influence dynamic predictions. Typically, machine learning methods learn and predict unknown dynamics better with larger training set sizes or simpler network structures. Although all methods follow this general rule, our HoGRC method still has several advantages. To demonstrate this, we conduct the following numerical experiments.

On one hand, we use CRoS as an example to generate experimental data with different time lengths (other settings are the same as above). As shown in Fig. 6a, increasing the training data size initially improves prediction accuracy, which then levels off. Our method outperforms baseline methods even with a sufficient amount of training data, suggesting that our method can learn dynamics with fewer data points and more accurately capture real dynamical mechanisms. On the other hand, we investigate the impact of different network structures. We begin by considering regular networks with varying numbers of subsystems and generate experimental data using CRoS with a length of 5000 and Δt = 0.1. As shown in Fig. 6b, the network scale does affect the prediction accuracy in that, for a long-term prediction task, the prediction failure of one subsystem in the network can impact the prediction of the other subsystems via its neighbors. Compared to baseline methods, our method is less affected by network size and presents better predictability for large-scale systems. These advantages persist when considering the Erdös–Rényi (ER) networks62 and the Barabasi-Albert (BA) networks63 containing 30 subsystems, as demonstrated in Fig. 6c, e, f. Here, the average degrees of the regular, the ER, and the BA networks, respectively, are 2, 2.2, and 1.87. We randomly generate the coupling weights connecting every two subsystems in these networks.

a The prediction performances with different training set sizes. b The prediction performances in the regular networks with varying numbers of subsystems. c The prediction performances in ER and BA networks with 30 subsystems. The orange line in the middle of the box represents the median, the upper and lower boundaries of the box represent the upper and lower quartiles, respectively. The boundaries of the upper and lower whiskers represent the maxima and minima, respectively. d The predictable steps change with the degree of the subsystems, respectively, for ER and BA networks. e, f The prediction errors of the subsystems change with the time evolution using the HoGRC framework for the ER and the BA networks, respectively.

Additionally, from Fig. 6e, f, we interestingly find that, under the same average degrees, predicting the system using the BA network seems to be more difficult, while using the regular network makes prediction much easier. This finding is understandable since the degree distribution of the BA network follows a power law distribution, which creates more complex structures and more fruitful dynamics in the system. To further verify this finding, we use the degree of subsystems as an indicator to reveal the complexity of subsystems and depict different negative correlations between the number of predictable steps and the degree of each subsystem for different network settings, as shown in Fig. 6d.

Direct and indirect causality

In our framework, the GC inference and the RC prediction are performed simultaneously and complement each other. Notably, the HoGRC framework does not require precise learning of the system structure through GC. Instead, our framework focuses on optimizing the coupling structures to further maximize the prediction accuracy. As a result, both direct and indirect causality can be inferred in the inference task. Despite this, our framework consistently and accurately infers the high-order structures in multiple experiments conducted in this study (see Supplementary Note 1.4 for specific reasons).

To further identify the direct and indirect causality, we can extend our HoGRC framework by combining it with the existing methods. In particular, we propose two strategies: (1) conditional Granger causality and (2) further causal identification. We provide the details of the above two strategies and experimental validation in Supplementary Note 1.4. The experimental results demonstrate the high flexibility and generality of our framework, enabling it to identify direct and indirect causality in conjunction with some existing techniques.

Wanda Parisien is a computing expert who navigates the vast landscape of hardware and software. With a focus on computer technology, software development, and industry trends, Wanda delivers informative content, tutorials, and analyses to keep readers updated on the latest in the world of computing.