The dataset

The datasets used in model training and evaluation of the methodology are generated from the videos recorded on June 27, 2020, on commercial Nephrops grounds in Skagerrak using an underwater stereo camera system13,20. The YOLO models require images with bounding box annotations for training. Therefore, a dataset of 4044 images was generated from the videos recorded during the hauls. The images in the dataset were selected according to the presence of Nephrops or other catch items. 12.5% of these images were randomly selected as a test set and the remaining proportion was increased by 1000 additional images generated using copy-paste augmentation to form the training set28.

On the other hand, the ultimate performance assessment of the methodology should be performed on videos, rather than images, to evaluate overall processing speed and Nephrops counting accuracy. For this purpose, five videos that do not have any Nephrops objects in common with those in the training set were selected. Each video has a different duration, varying Nephrops ground truth counts, and different object densities, providing diverse scenarios that better represent the cases in real applications29. More information on the videos is available in Table 1. Since the performance of the proposed method depends on the Nephrops distribution throughout the videos, frame-based details (such as ratios of frames with Nephrops and ranges of frame numbers for each Nephrops presence) for the test videos are provided in the Supplementary Information file. The stereo camera was set to record videos with a resolution of 1280 × 720 pixels at 60 frames per second (FPS), and only the videos from the right camera were processed. For benchmarking purposes, both the image and video datasets are the same as those used in Avsar et al.26.

Deep learning models for Nephrops detection

The two very recent versions of YOLO, YOLOv7 and YOLOv8, are used in this study to perform the object detection task. YOLO uses a single-stage object detection approach, hence the models of YOLO family are known to be fast and accurate. In general, YOLO architectures have the major building blocks of backbone, neck, and prediction head. YOLOv7 uses novel computational units in its backbone called extended efficient layer aggregation network (E-ELAN)30. E-ELAN units enable improved learning through expand, shuffle, and merge cardinality operations in their structure while keeping the original gradient transmission path. In addition, it features module re-parameterization meaning that some sets of model weights are averaged for enhancing model performance. In the prediction head of YOLOv7, an additional auxiliary head is introduced to assist in the training operation, and eventually achieve better predictions by the lead head. The technical details of the experiments and ablation studies on ultimate model structure are available in the YOLOv7 paper30. A tiny version of YOLOv7, YOLOv7-Tiny, was also developed to be used on edge GPU devices. Different from the YOLOv7 model, YOLOv7-Tiny uses the Rectified Linear Unit (ReLU) as the activation function and it possesses a smaller number of computational layers which yields a reduction in the number of parameters as well.

In the backbone of YOLOv8, a cross-stage partial (CSP) network that allows concatenation of the features from different hierarchical levels is used. Usage of anchor boxes with predefined aspect ratios has been a bottleneck for both speed and accuracy for YOLO models. YOLOv8 eliminates the need of the anchor boxes at the prediction phase by detecting the object center directly. As another improvement, it involves specific convolutional units called C2f modules enabling a better gradient flow during learning. The number of residual connections in the Cf2 modules, as well as the number of channels in the intermediate convolutional layers, can be adjusted by depth and width multipliers, respectively. In other words, these two hyperparameters are useful for customizing the feature extraction capability and the number of parameters of the YOLO model, which makes it possible to adjust the processing speed and detection accuracy of the model. Therefore, in the public repository of YOLOv8, five different versions have been made available; nano (n), small (s), medium (m), large (l), and extra-large (xl). YOLOv8 can be defined as an improved version of YOLOv5, however, none of these methods have an associated paper where the model details are explained31.

The trained models have been applied to the individual frames of the test videos sequentially to find the bounding boxes of the Nephrops objects. The bounding box information is input to the tracking algorithm before processing the next frame.

Tracking and counting of the Nephrops

The detection of Nephrops individuals alone is not sufficient to be able to determine catch rates (counts) in the trawl. Therefore, these detections should be tracked throughout the video and the tracks satisfying certain conditions should be considered as catch items. It was shown earlier, that checking the tracks generated by the Simple Online and Real-time Tracking (SORT) algorithm against three different cases related to the bounding box coordinates achieves a promising counting performance26. Therefore, the same strategy has been followed in this study. However, for completeness, it is mentioned here as well.

The Nephrops tracks were generated using SORT, a computationally light algorithm for tracking objects in 2D that uses a Kalman filter to predict the states of the tracks for the next frame32,33. However, not all the tracks account for true Nephrops catches because the SORT algorithm may lose the track of the Nephrops in the video or Nephrops may swim in the opposite direction of trawling after floating for while in the field of view of the camera. To determine tracks corresponding to true Nephrops catches, a horizontal level is introduced at the top 4/5 of the frame height, where the position of the bounding boxes relative to the horizontal level determine whether the individual is recorded or not (Fig. 1). In particular, tracks are considered as a true Nephrops catch when one of the following conditions are satisfied:

-

i.

When the bottom of the bounding box crosses the horizontal level.

-

ii.

When the center of the bounding box crosses the horizontal level.

-

iii.

When the height of the bounding box is greater than 2/3 of the frame height.

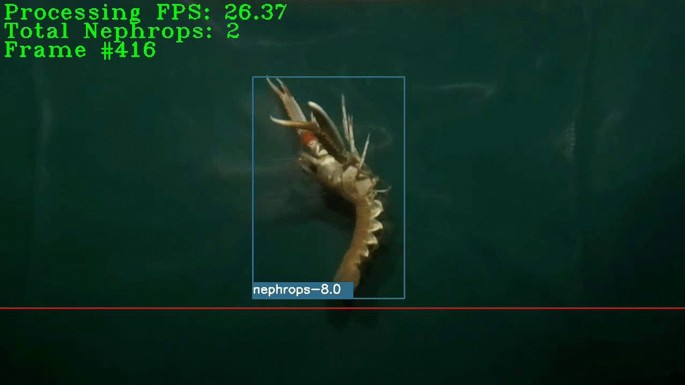

A sample processed frame showing the horizontal level (red line), bounding box and some information about the status of the processing.

Details of the training and test time settings

Efficient training of the model is essential for accurate detection of the Nephrops individuals. This affects the overall assessment of the system performance during testing that involves tracking and counting of the Nephrops in the videos. Therefore, numerous settings have been experimented with to understand how the counting performance and the processing speed changes as functions of these settings.

As mentioned earlier, the Nephrops detection step has been tested with YOLOv7 and YOLOv8 models separately. In addition to regular YOLOv7, its lightweight version YOLOv7-Tiny has been used for detection. As for YOLOv8, five variants with different scales and computational loads have been used. As a result, a total of seven models are involved in the detection step. It is possible to train these models using different optimizers, batch sizes, and image dimensions, each of which influences the training performance and consequently the weights of the output model. Stochastic gradient descent (SGD)34 and Adam35 are the two optimizer functions considered. Batches of 32 or 64 images were randomly generated for the model training by resizing the input images to dimensions 256, 416, or 640 pixels. These options allowed for obtaining 12 different combinations for training settings and all seven models were trained with each of these combinations, which amounts to training of 84 different models. The training operation continued for 200 epochs and the weights achieving the highest mean average precision were used during the test phase. For the remaining model parameters, default settings and values provided in the related repositories are used31,36.

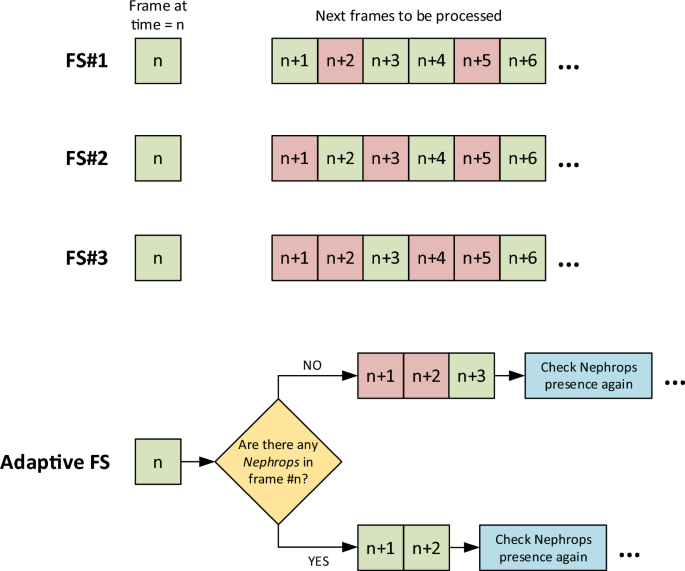

During the testing of the models, the videos mentioned in Table 1 were processed and their counting performance assessed together with the frame processing speed. The processing of the videos was accomplished by dealing with the frames individually (i.e., one by one with a batch size of 1 frame). Prediction of the bounding boxes in the frames was done with a confidence threshold value of 0.5. Since the frame processing speed has a critical value for this study, improvements in processing speed have been prioritized at a cost of sacrificing the correct counts to some extent. For this purpose, four different frame skipping ideas have been implemented and their effects on the Nephrops counting performance and average processing speed evaluated. Three of the frame skipping approaches skip some intermediate frames according to predefined settings. The fourth one uses the detection output of the model to determine whether to process or skip the next frames. Therefore, the fourth approach is called adaptive frame skipping. Details of these frame skipping ideas are given below and illustrated in Fig. 2.

-

i.

Frame skipping #1 (FS#1) Skip every third frame and process the others.

-

ii.

Frame skipping #2 (FS#2) Skip every second frame and process the others.

-

iii.

Frame skipping #3 (FS#3) Skip every second and third frames and process the others.

-

iv.

Adaptive frame skipping: Determine whether to process or skip the next frames according to the content of the current frame. If there are no Nephrops in the current frame, skip the next two frames and process the third one. Else, process the next two frames. Check the presence of Nephrops in every processed frame and implement the same condition until the end of the video.

Illustration of frame skipping approaches. Red and green boxes represent skipped and processed frames, respectively.

In particular, the first three frame skipping approaches are expected to affect the overall processing speed in a positive way while degrading the counting performance to some degree. The purpose of the adaptive frame skipping is to resolve this issue by processing the frames more often whenever a Nephrops is detected in the video. As a result, it is aimed to achieve higher overall FPS values and counting performance simultaneously.

In addition to the counting performance and the processing speed, it is also important to consider the power consumption of the edge device because such hardware may be required to run in remote locations with limited power resources. In the case of Nephrops fisheries, the next design stage may be to process the videos underwater without streaming them to an onboard station. This implies powering the edge device with an external battery that should last at least until the end of the haul (typical haul durations in the Nephrops fisheries range from 4 to 6 h). Therefore, optimizing the power consumption is necessary for effective utilization of the power resources and determine the battery requirements. In order to understand how the lowered power consumption of the edge device affects the frame processing speed, the same experiments have been repeated after introducing a restriction on the power consumption of the edge device. For this purpose, the edge device was first used in max power mode which may consume up to 60 W37. Next, an upper limit of 50 W was introduced for allowed power consumption and changes in the frame processing speed observed. Note that such a limitation does not affect the counting performance.

In summary, seven different models are included in this study, where each model has been trained with various combinations of optimizers, input image sizes, and batch sizes. During testing, four different frame skipping ideas were implemented together with the case where all frames were processed (i.e. no frame skipping). In addition, the same experiments were performed after changing the power mode of the edge device.

Specifications of the coding environments

For training of the models, a Tesla A100 GPU with 40 GB RAM that is available at high performance computing clusters of Technical University of Denmark was used together with cudnn v8.2.0.53 and CUDA v11.338. The training codes were written with Python v3.9.11 utilizing PyTorch framework v1.12.1 and torchvision v0.13.1.

All the trained model files were transferred to NVIDIA Jetson AGX Orin developer kit, the state-of-the-art edge device used for performing the experiments involved in this study. This is a single board computer optimized for deep learning applications containing 64 GB memory, 2048-core NVIDIA Ampere architecture GPU with 64 Tensor Cores operating at 1.3 GHz, and 12-core ArmCortex CPU with a maximum frequency of 2.2 GHz39. On the edge device, all the codes were written in Python v3.8.10 using PyTorch v1.14.0 and torchvision v0.15.0. The GPU support was accomplished through cudnn v8.6.0 and CUDA v11.4.19.

Performance evaluation metrics

The performance metrics considered within the experiments are categorized under two groups; counting performance and processing speed. For evaluating the counting performance, each Nephrops track counted by the algorithm is labelled as either a true positive (TP) track or a false positive (FP) track after comparing them with the ground truth (GT) tracks. Furthermore, those Nephrops that are visible in the video but not counted by the algorithm are labelled as false negative (FN) tracks. The number of true positive tracks is important to assess the rate of the correctly counted Nephrops. Therefore, the first counting performance metric, namely the correct count rate, is defined as

$$Correct\, Count \,Rate=100\times \frac{TP}{GT}.$$

False positive and false negative tracks are two important types of counts that influence the overall performance of a method. However, these numbers are not used when calculating the correct count rate. Therefore, the F-score that considers true and false counts together is calculated as the second metric using the formula below.

$$F{\text{-}}score=\frac{TP}{TP+0.5\times \left(FP+FN\right)}.$$

As for the processing speed evaluation, the time taken to process each individual frame is recorded and its reciprocal is calculated as the processing speed in frames per second (FPS). The total processing time for an individual frame contains durations for detection and tracking together. Minimum, maximum, and mean FPS values for all the frames in a test video are reported to determine the suitability of the method for real-time applications. In case of frame skipping, the FPS value of an individual frame is multiplied by a factor equal to one more than the number of skipped frames and the result of this multiplication is recorded as the FPS value for that frame. For instance, if it takes 50ms for a frame to be processed with FS#2, the corresponding FPS value for that frame will be calculated as 40. This value is obtained by multiplying 20, the original FPS value, by 2 because only one frame is skipped before the processed frames.

Intermediate metrics, such as detection performance of the YOLO models on the test set and tracking performance of the SORT algorithm, have not been considered in this study because they are not directly reflecting counting performance. In addition, these details are already provided in an earlier study where other versions of YOLO were used26.

Wanda Parisien is a computing expert who navigates the vast landscape of hardware and software. With a focus on computer technology, software development, and industry trends, Wanda delivers informative content, tutorials, and analyses to keep readers updated on the latest in the world of computing.