It’s impossible to navigate the internet today in any capacity without encountering bots, yet in recent months (particularly on X), there has been an observable phenomenon of bot-generated content posts, which might neglect to link the video they refer to, write in obviously generated sentences or use artificially generated images of things which don’t exist — and yet they go viral. Checking the replies, they nearly all riff on two or three general ideas, sometimes using the same words or phrases (or using the exact same response). You might also see a deluge of generated replies to posts (viral and nonviral alike) advertising adult content on the user’s account, most of which are obvious scams. Bots clog the replies of sites like Reddit or YouTube, while artificially generated images clog Facebook. What explains this influx of bot-generated replies, and more novel, bot-generated viral content, and what does it mean for user experience on social media?

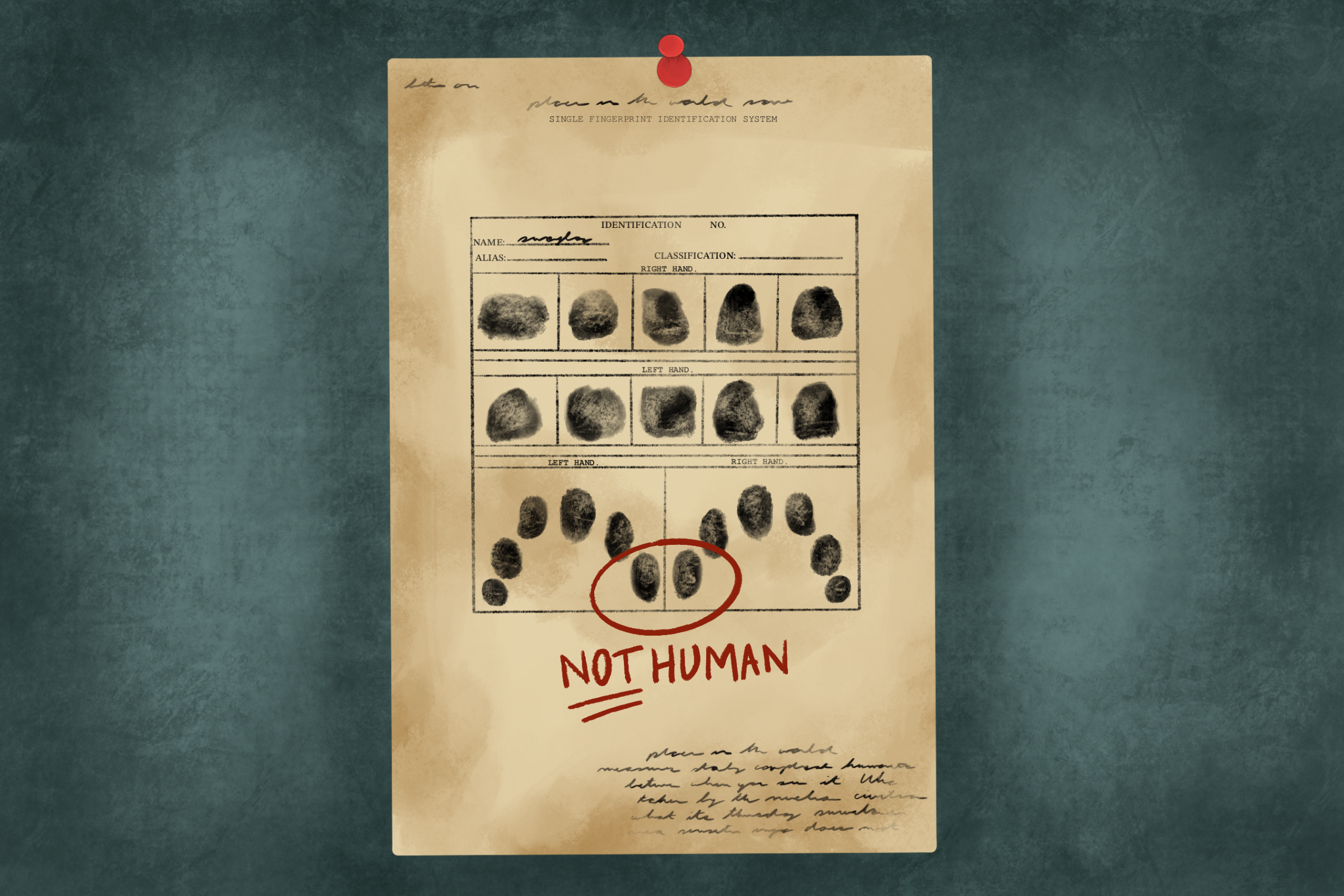

The Dead Internet Theory emerged in recent years on internet chat forums, taking note of the sudden uptick of bot activity on the internet. The theory proposes that social media is sustained mostly by interactions between bot accounts, driven by algorithms. A semi-awareness of the ridiculous scale of the theory has always accompanied any reference to it, but the broader idea of bot-produced and sustained viral content created for general consumption, in the context of the current scale of obvious bot activity, is less and less dismissible. In navigating social media, we are inundated with advertisements using the language of whatever seems algorithmically profitable, contained within the norms of the discourse of the site, farming engagement through artificial virality, mindless content and false emergencies, alike, enable a profit off of advertisements and views.

Further confusing the unreality of this landscape is the emergence of technologies like ChatGPT. LLMs — meaning “large language models,” which are essentially predict-the-next-token-style generators that can be trained on human writing styles — and image generators like BingAI have made the creation of bots accessible to anyone. These bots can be trained on the “style” of virality, predicting what wording and even how many words are likely to spread most efficiently. Images created in the interest of gathering attention, from explosions at the Pentagon to imaginary fashion, often posted without disclosure of artificial-intelligence generation, spread like wildfire. And this technology only gets better and more seamless; while AI-generated video footage and images caused scoffing a year ago, they now can reliably fool younger generations who grew up on the internet. From the dramatic and incendiary to the everyday, being surrounded by layers of unreality — images, replies, posters and events — creates a sense of displacement or even placelessness, accentuated by the immaterial nature of the internet.

It’s also worth noting that this generated content — textual, visual, whatever form it may take — relies on gaming the algorithms of various platforms. Generated content that goes viral on X looks different from that of Facebook, and even within the platforms, content that seeks different audiences will vary widely. Concern with the nature of the positive feedback loop promoted by internet algorithms is most famous in the oft-discussed “alt-right pipeline,” a phenomenon in which the algorithm of a social media site shows users more extreme (and thus attention-grabbing) versions of a type of (here ideologically loaded) content which they have consumed previously.

Taking advantage of algorithmic function means that generated viral content will both echo the anxieties or desires of the broader culture while also considering the niches individual bots are designed to cater to, playing on the perceived levers of its audience. Without any journalistic integrity, whatever generates traffic, attention and revenue from views and advertising is prioritized. Returning to the falsified images of the explosions at the Pentagon, these ideas are not random; visually impressive threats with instant consequences to high levels of government are much sexier than a similarly devastating event. Contrast explosions at the Pentagon to a large oil spill in a freshwater deposit like the one of the Great Lakes. Even when not falsifying such a level of catastrophe, generated posts are often designed to farm a significant engagement in a short amount of time over and over again; it doesn’t matter if the information can be quickly disproved as long as it reaches the largest number of people possible. The superfluity of these images is beginning to cause fatigue when navigating this kind of content; serious fabrications make other generated posts less offensive by contrast. If it’s not at the level of a direct threat, it’s not interesting. By contrast, among the engagement baiting, artifice becomes expected.

The internet and social media, much like language, were developed to bring people together and make the world smaller. But gamifying engagement to generate ad revenue has created a haven for bot-created images, videos and brief messages where generation is less obvious. Navigating the internet with the understanding that much of what you see could be fake obscures the connections social media was meant to create. The core of the Dead Internet Theory, only some years ago still a semi-ironic observation, is verified in the annals of bots interacting with bots. What was meant to be a conduit for information and interaction is a reflection of the confusion of virality, the motivation of profit, existing desires and anxieties played on by engagement-seeking generators. It’s hollow, disingenuous, disorienting and shaping the way an entire generation interacts with media and the internet — either with unawareness and confusion or with suspicion and disinterest, muddying the waters of a huge, fast-moving river easy to slip into entirely.

Daily Arts Contributor Nat Johnson can be reached at [email protected].

Related articles

Tyler Fields is your internet guru, delving into the latest trends, developments, and issues shaping the online world. With a focus on internet culture, cybersecurity, and emerging technologies, Tyler keeps readers informed about the dynamic landscape of the internet and its impact on our digital lives.