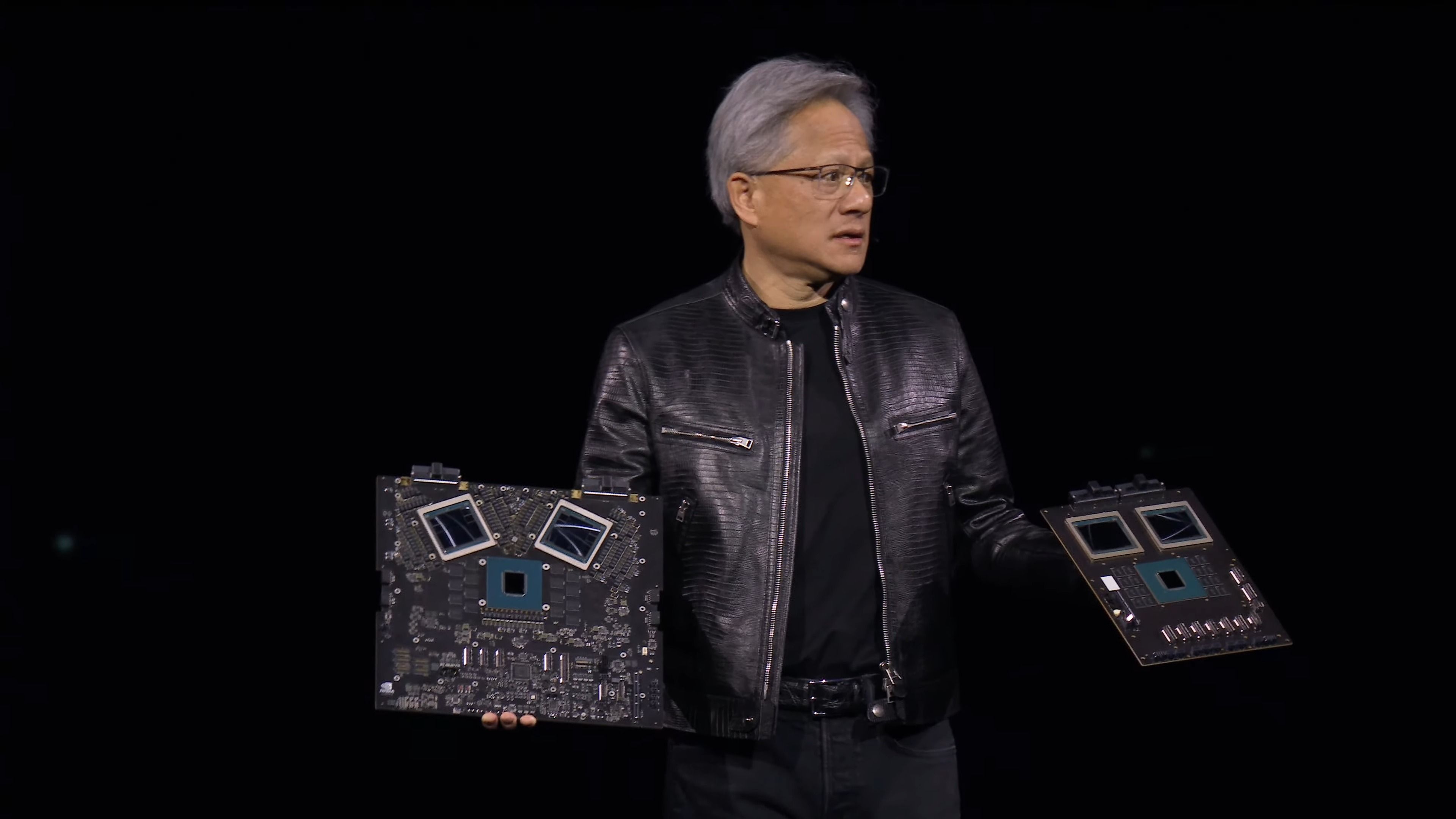

Jensen Huang, CEO, Nvidia | Image:Nvidia

Nvidia’s Blackwell: On March 18th, Nvidia’s CEO Jensen Huang in the GTC 2024 keynote, announced the arrival of Nvidia’s Blackwell platform, which according to the chipmaking giant, can enable organisations globally to deploy and operate real-time generative AI using trillion-parameter large language models, while consuming up to 25 times less cost and energy compared to its forerunner.

Jensen Huang, CEO, Nvidia | Image credit: Nvidia

The Blackwell GPU architecture introduces six innovative technologies for accelerated computing, set to catalyse improvements in data processing, engineering simulation, electronic design automation, computer-aided drug design, quantum computing, and generative AI — all representing burgeoning industry prospects for NVIDIA. In this report, we are diving deeper into understanding the upgraded Nvidia’s brand new GPU brings to the table and how it can impact the AI landscape in the coming years.

What is Nvidia’s Blackwell?

Blackwell is Nvidia’s latest graphics processing unit (GPU) microarchitecture, succeeding the Hopper and Ada Lovelace designs. Named in honour of famous statistician and mathematician David Blackwell, Nvidia officially announced the B100 and B40 models of the Blackwell series in October 2023 during an investor presentation, as outlined in the company’s roadmap.

Image credit: Nvidia

The official announcement of the Blackwell architecture took place at the Nvidia GTC 2024 keynote on March 18, 2024. In the launch event, Nvidia CEO Jensen Huang described Blackwell as “a processor tailored for the generative AI era,” underlining its potential role in the emerging artificial intelligence space that is in dire need for more efficient graphic processing units.

“There is currently nothing better than NVIDIA hardware for AI.” – Elon Musk

Blackwell’s architecture is tailored to meet the demands of both data centre computing applications, gaming, and workstation needs, featuring dedicated dies for each purpose. The GB100 die caters to Blackwell data centre products, while the GB200 series dies are earmarked for the upcoming GeForce RTX 50 series graphics cards.

Here are some of the major architectural advancements that Nvidia’s Blackwell GPUs are launched with. These updates will take Artificial Intelligence computing to another level, taking the developments closer than ever to Artificial General Intelligence.

Processing powerhouse

The Blackwell-architecture GPUs will come with 208 billion transistors. Transistors are the basic units of electronic devices, and the sheer number within these GPUs underlines extraordinary processing power. The higher the number of transistors in a GPU, the higher its capability to perform complex computations with exceptional speed and efficiency.

Jensen Huang, CEO, Nvidia | Image credit: Nvidia

Manufactured using a custom-built 4NP TSMC process, these GPUs are the best one can have one’s hands on. TSMC, short for Taiwan Semiconductor Manufacturing Company, is known for its expertise in semiconductor fabrication.

The GPUs also incorporate a chip-to-chip link mechanism, facilitating seamless communication between individual GPU dies. With a speed of 10 TB/second, this chip-to-chip link enables efficient data transfer, allowing the GPUs to function as a cohesive unit.

Second-generation transformer engine

The new GPU engine benefits from micro-tensor scaling support and NVIDIA’s dynamic range management algorithms, integrated into frameworks like TensorRT-LLM and NeMo Megatron.

Image credit: Nvidia

These updates enable Blackwell to support double the compute and model sizes while introducing 4-bit floating point AI inference capabilities, enhancing overall AI performance.

This means AI developers can work with larger and more complex models, achieving higher levels of accuracy and sophistication in their applications.

Fifth-generation NVLink

The new NVLink also offers an unprecedented bidirectional throughput of 1.8TB/s per GPU. This high-speed communication capability is crucial for accelerating performance in multitrillion-parameter AI models, facilitating seamless data exchange among up to 576 GPUs.

Interactivity per user tokens per second | Image credit: Nvidia

For complex language and multimodal models, which require massive amounts of data and computational resources, such seamless communication is crucial for accelerating training and inference processes.

The improved NVLink facilitates faster model convergence, higher accuracy, and more sophisticated AI applications, advancing the capabilities of AI models to tackle real-world challenges effectively.

RAS engine

Blackwell-powered GPUs feature a dedicated engine for reliability, availability, and serviceability (RAS). Additionally, the architecture integrates AI-based preventative maintenance capabilities at the chip level, enabling diagnostics and reliability forecasting. These features maximise system uptime, improve resiliency, and reduce operating costs, vital for large-scale AI deployments.

Jensen Huang, CEO, Nvidia | Image credit: Nvidia

The Blackwell also enables new encryption protocols to ensure data privacy, particularly important for industries like healthcare and financial services where data protection is paramount.

Blackwell’s decompression engine accelerates database queries by supporting the latest data formats. This enhancement boosts performance in data analytics and science, aligning with the growing trend of GPU-accelerated data processing, which promises significant efficiency gains and cost savings for companies.

Wanda Parisien is a computing expert who navigates the vast landscape of hardware and software. With a focus on computer technology, software development, and industry trends, Wanda delivers informative content, tutorials, and analyses to keep readers updated on the latest in the world of computing.