Two days before the New Hampshire primary, Gail Huntley was just one of thousands of people who supposedly received a call from President Joe Biden telling them not to vote.

“It’s important that you save your vote for the November election,” said the call. “Voting this Tuesday only enables the Republicans in their quest to elect Donald Trump again.”

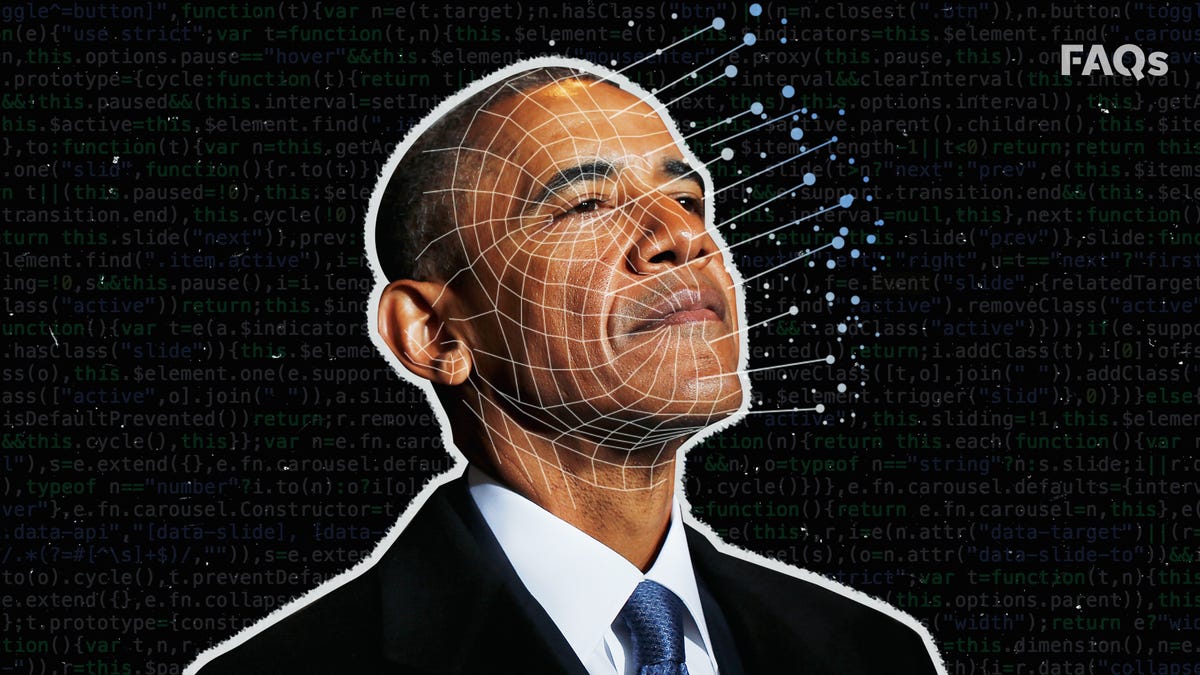

But the call wasn’t from Biden. It was a deep fake AI-generated message created by Texas-based Life Corporation that mimicked the president’s voice.

“I was just hoping that folks getting that call would know to disregard that message and get out to vote,” said Huntley.

More: Tech giants pledge crackdown on 2024 election AI deepfakes. Will they keep their promise?

Following the New Hampshire election interference, the Federal Communications Commission made illegal the use of robocalls using artificial intelligence-generated voices.

“Bad actors are using AI-generated voices in unsolicited robocalls to extort vulnerable family members, imitate celebrities, and misinform voters. We’re putting the fraudsters behind these robocalls on notice,” said FCC Chairwoman Jessica Rosenworcel. “State Attorneys General will now have new tools to crack down on these scams and ensure the public is protected from fraud and misinformation.”

What is a deep fake?

First, let’s define the term: the AI-generated deepfakes in question are videos, images, and audio that digitally manipulate the appearance, voice, or actions of political candidates and election officials. The issue is whether or not political advertising intentionally or inadvertently misleads voters about when, where, and how to vote.

What is being done at the federal level?

To date, there are no federal laws against deepfakes.

While congressional action on taming AI-generated content significantly lags, the White House Artificial Intelligence Council met in January, three months after Biden signed an executive order to reduce the risks of AI involving national security and consumer rights.

House Speaker Mike Johnson of Louisiana and House Minority Leader Hakeem Jeffries of New York recently announced a joint task force consisting of a dozen Democrats and a dozen Republicans to work together to regulate the use of artificial intelligence, especially in politics.

Unfortunately, the committee will focus on future political campaigns – not 2024.

“There is going to be a tsunami of disinformation in 2024. We are already seeing it, and it is going to get much worse,” said Darrell West, a senior fellow at the Center for Technology Innovation at the Brookings Institution, in early February. “People are anticipating that this will be a close election, and anything that shifts 50,000 votes in three or four states could be decisive.”

What are states doing to limit AI deepfakes?

Five states already have laws to restrict AI in political communications: Minnesota, Michigan, California, Washington, and Texas. But what about the rest of the nation?

Since January, more than 30 states have introduced over 50 bills to regulate deepfakes in elections, focusing on disclosure requirements and bans, according to Public Citizen. Whether or not the bills can neutralize deep fakes in this year’s election cycle remains to be seen.

Highlights of state legislation include:

Minnesota

The North Star State passed a bipartisan bill in 2023 with near-unanimous consent that criminalizes multiple forms of deepfakes. The law criminalizes the non-consensual dissemination of deep fake imagery used to influence elections within 90 days of an election. The repercussions for creating deepfakes range from thousands of dollars to five years in prison.

Colorado

In Colorado, the Candidate Election Deepfake Disclosures bill was introduced and, if passed, would require a disclosure, similar to political advertisement, on any deepfake AI communication related to a candidate for elective office. Meaning? Candidates could sue the deepfake creators for exact and punitive damages.

New Hampshire

Similarly, a bill introduced in New Hampshire would require a disclosure of AI usage in political advertising. The bill would prohibit deepfakes or deceptive AI within 90 days of an election unless full disclosure exists.

Hawaii

In the Aloha State, the proposed legislation would entrust the Hawaii Campaign Spending Commission with investigating and imposing fines for AI-generated deceptive information. Like Colorado and New Hampshire, one of the bills would require an AI disclaimer and authorize the state’s campaign spending commission to impose penalties within 90 days of elections.

California

Among the five most recent bills to hit the Rotunda’s floors in February was the California AI Accountability Act, which would require that state agencies notify users when interacting with AI, introduced by state Sen. Bill Dodd of northern San Francisco.

Assemblymember Gail Pellerin, who represents southwest San Jose, introduced legislation to ban “materially deceptive” political deep fakes four months before Election Day and two months after.

Nebraska

The Nebraska legislature is considering two bills that ban the dissemination of AI-generate deep fakes 60 days before an election and prohibits explicitly deep fakes that intend to mislead voters by misrepresenting the secretary of state and election commissioners.

Virginia

Virginia’s legislature is working with Gov. Glenn Youngkin to tackle the challenges of artificial intelligence. Recommendations include creating a task force to assess the impacts of deepfakes, misinformation, and data privacy implications.

Other AI-focused legislation includes a bill that regulates the use of the technology by developers, a bill that requires an impact assessment before a public body uses the technology, and a bill that forbids the creation and use of deep fakes.

How much AI will voters see this 2024 election, and what can they do?

Craig Holman, a Capitol Hill lobbyist who works in governmental ethics for the nonprofit Public Citizen, believes 2024 will become the first deepfake election cycle, where AI will influence voters and impact election results.

“Artificial intelligence has been around for a while, but only in this election cycle have we seen it advance to the point where most people cannot tell the difference between a deepfake and reality. It’s sort of breathtaking how good the AI has become,” Craig said.Public Citizen didn’t always focus on election deepfakes. This is the first year the nonprofit is tackling the issue head-on. Holman said what changed for him was seeing an ad by the Republican National Committee immediately after Biden announced he would seek re-election in 2024.What he saw shocked him. The ad showed scenes of President Biden and Vice President Harris laughing together in a room, China bombing Taiwan, thousands of people swarming over the border into the United States, and San Francisco being locked down due to the fentanyl crisis.None of it was real. And even though he knew the images were fake, he couldn’t visually tell the difference.

More: As the cradle of tech, California looks to be leader in AI regulation

It’s clear from the Biden deep fake in New Hampshire that Holman’s prediction is already coming true. Are we too late in putting the AI genie back in the bottle?

No, says Ashley Casovan, the Managing Director of the International Association of Privacy Professionals Artificial Intelligence Governance Center.

“It’s really important to not only understand how [AI technology is] being used, and then how they’re being used, sometimes maliciously, but then what types of different mitigation measures we need to put in place … we really need hard legislation,” Casovan said.

“While these acts are continuing to happen and the technologies are starting to get more and more pervasive, it’s never going to be too late to put appropriate rules, appropriate training, and other types of safeguards in place.”

With additional reporting by Elizabeth Beyer, Melissa Cruz, Margie Cullen, Sarah Gleason, Maya Marchel Hoff, Kathryn Palmer, Sam Woodward, and Jeremy Yurow

Eugen Boglaru is an AI aficionado covering the fascinating and rapidly advancing field of Artificial Intelligence. From machine learning breakthroughs to ethical considerations, Eugen provides readers with a deep dive into the world of AI, demystifying complex concepts and exploring the transformative impact of intelligent technologies.