“Biomedical engineering represents the integration of technology and medicine at the point of care,” said Adam Alkhato, administrative director for biomedical engineering at Stanford Medicine’s Technology and Digital Solutions. “We value developing strong relationships with our medical service lines within Stanford Medicine to explore, advance and deploy the latest health technology. This is a great example of that collaboration.”

A seamless stream on a single screen

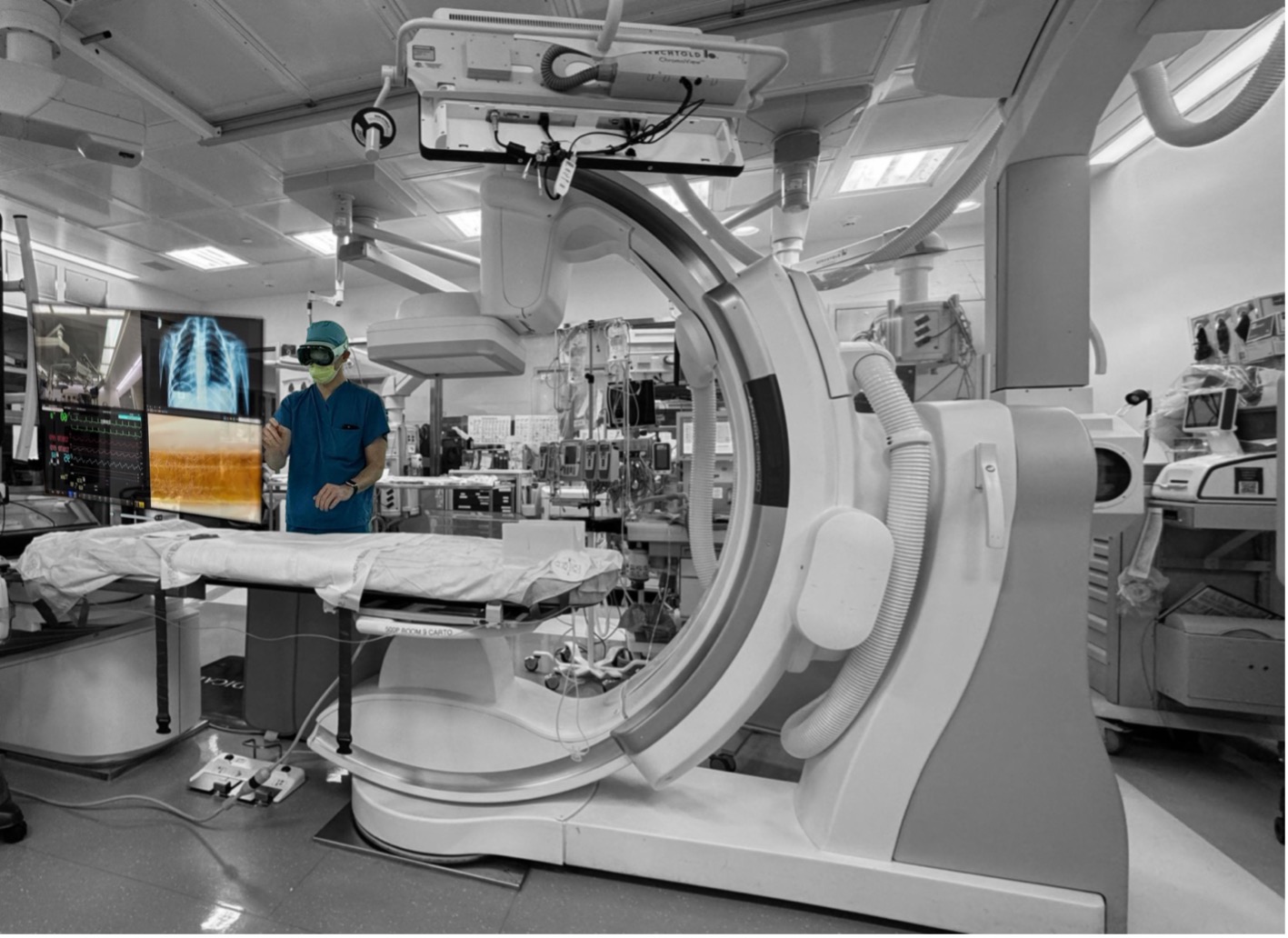

During the procedure, Alexander Perino, MD, a cardiac electrophysiologist at Stanford Health Care, performed an ablation, a common procedure that treats heart arrythmias, which cause rapid or irregular heart rates. During an ablation, Perino typically looks at a variety of monitors, including one that shows a real-time, anatomically accurate representation of the patient’s heart and the equipment he uses to treat areas of the heart causing arrhythmias. Other monitors show critical information needed to perform the procedure, such as ultrasound images, X-rays and the patient’s vital signs.

“There can be up to eight screens that depict distinct real-time data, with insufficient real estate in an operating room for these screens to be conveniently located and the data on them reviewed,” Perino said. “Current systems do not allow for surgeons and proceduralists to interact with the data directly, requiring staff members to assist with data manipulation and processing, which can be inefficient.”

With the spatial computing headset, which receives secured real-time data from a workstation and displays it, Perino is able to manipulate the virtual monitors quickly and without help. These monitors contain all the data needed to perform the procedure. What’s more, while wearing the headset, Perino can see the patient and operating room as he usually would.

The headset tracks his eye movements, interpreting where his attention is focused. With a pinch of his fingers or quick hand movement, he can zoom in on various data streams or reorganize the screens.

“I can independently move the virtual monitors to a more ergonomic position, then make it twice as big and easier to see,” Perino said.

An augmented future

During the procedure, the surgical team also had conventional monitors displaying what Perino was seeing through the headset. “Right now, we’re not taking away anything that we normally use; we’re just adding a new layer. Our current goal is to experience the output of the new technology and ensure that it’s performing as well as, if not better than, the setup we currently have.”

Whether and how the technology will be harnessed in surgical suites more broadly is yet to be determined. But Perino and others are intrigued by their first successful use — and he and others are already thinking up other uses for the spatial computing system, including for educational and training purposes, as well as more sophisticated uses in the operating room.

“There’s a lot to learn,” Perino said. “For now, we hope that this first demonstration will help establish the tool as something surgeons and proceduralists can use to reduce barriers to quickly and easily review and manipulate intraprocedural data, increasing efficiency and clinician enjoyment. Ultimately, translating these improvements can help our patients.”

Maria Malik is your guide to the immersive world of Virtual Reality (VR). With a passion for VR technology, she explores the latest VR headsets, applications, and experiences, providing readers with in-depth reviews, industry insights, and a glimpse into the future of virtual experiences.