Nvidia is the Taylor Swift of tech companies.

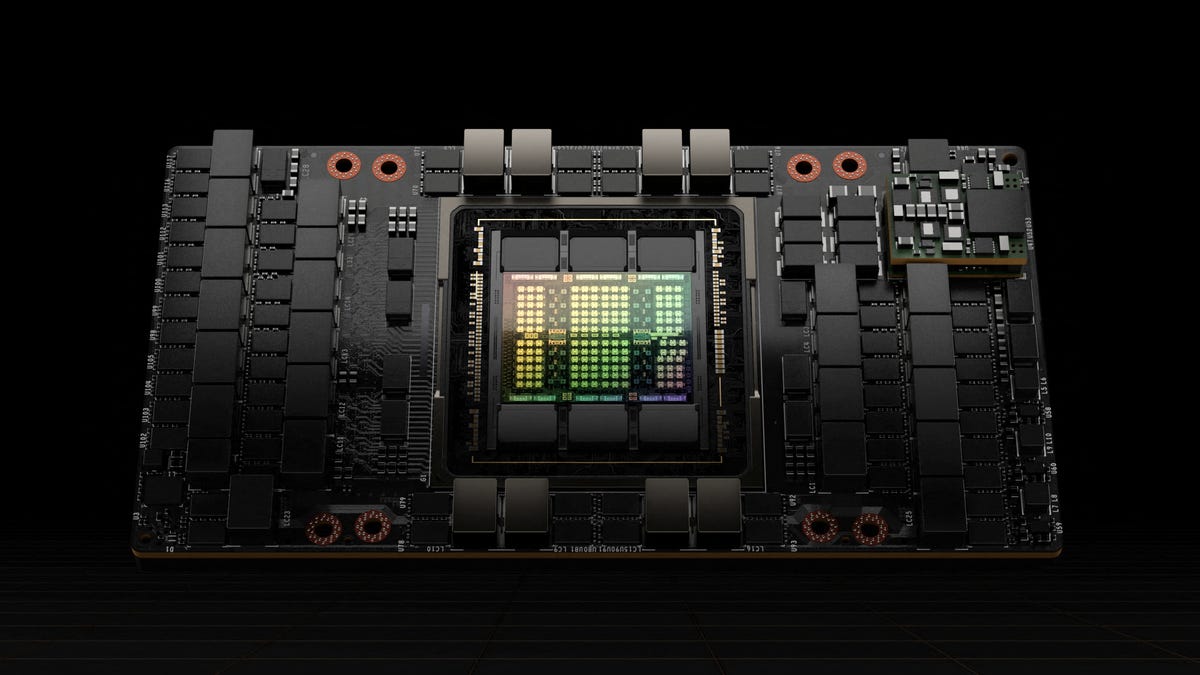

Its AI customers shell out $40,000 for advanced chips and sometimes wait months for the company’s tech, remaining loyal even as competing alternatives debut. That devotion comes from Nvidia being the biggest AI chipmaker game in town. But there are also major technical reasons that keep users coming back.

For example, it’s not simple to swap out one chip for another — companies build out their AI products to the specification of those chips. Switching to a different option could mean going back and reconfiguring AI models, a time-consuming and expensive pursuit. It’s also not easy to mix and match different types of chips. And it’s not just the hardware: CUDA, Nvidia’s software to control AI chips known as GPUs, works really well, said Ray Wang, CEO of Silicon Valley-based Constellation Research. Wang said that helps strengthen Nvidia’s market dominance.

“It’s not like there’s a lock-in,” he said. “It’s just that nobody’s really spent some time to say, ‘Hey, let’s go build something better.’”

That could be changing. Over the last two weeks, tech companies have started coming for Nvidia’s lunch, with Facebook parent Meta, Google parent Alphabet, and AMD all revealing new or updated chips. Others including Microsoft and Amazon have also made recent announcements about homegrown chip products.

While Nvidia is unlikely to be unseated anytime soon, these efforts and others could threaten the company’s estimated 80% market share by picking at some of the chipmaker’s weaknesses, taking advantage of a changing ecosystem — or both.

Different chips are better at different AI tasks, but switching between various options is a headache for developers. It can even be difficult for different models of the same chip, Wang said. Building software that can play nice between a variety of chips creates new opportunities for competitors, with Wang pointing to oneAPI as a startup already working on a product just like that.

“People are gonna figure out sometimes they need CPUs, sometimes they need GPUs, and sometimes they need TPUs, and you’re gonna get systems that actually walk you through all three of those,” Wang said — referring to central processing units, graphics processing units, and tensor processing units, three different kinds of AI chips.

In 2011, venture capitalist Marc Andreessen famously proclaimed that software was eating the world. That still holds true in 2024 when it comes to AI chips, which are increasingly driven by software innovation. The AI chip market is undergoing a familiar shift with echoes in telecommunications, in which business customers moved from relying on multiple hardware components to integrated software solutions, said Jonathan Rosenfeld, who leads the FundamentalAI group at MIT FutureTech.

“If you look at the actual advances in hardware, they’re not from Moore’s Law or anything like that, not even remotely,” said Rosenfeld, who is also co-founder and CTO of the AI healthcare startup somite.ai.

This evolution points toward a future in which software plays a critical role in optimizing across different hardware platforms, reducing dependency on any single provider. While Nvidia’s CUDA has been a powerful tool at the single-chip level, a shift to a software-dependent landscape required by very large models that span many GPUs won’t necessarily benefit the company.

“We’re likely going to see consolidation,” Rosenfeld said. “There are many entrants and a lot of money and definitely a lot of optimization that can happen.”

Rosenfeld doesn’t see a future without Nvidia as a major force in training AI models like ChatGPT. Training is how AI models learn how to do tasks, while inference is when they use that knowledge to perform actions, such as responding to questions users ask a chatbot. The computing needs for those two steps are distinct, and while Nvidia is well-suited for the training part of the equation, the company’s GPUs aren’t quite as set up for inference.

Despite that, inference accounted for an estimated 40% of the company’s data-center revenue over the past year, Nvidia said in its latest earnings report.

“Frankly, they’re better at training,” said Jonathan Ross, the CEO and founder of Groq, an AI chip startup. “You can’t build something that’s better at both.”

Training is where you spend money, and inference is supposed to be where you make money, Ross said. But companies can be surprised when an AI model is put into production and takes more computing power than expected — eating into profits.

Plus, GPUs, the main chip Nvidia manufactures, aren’t particularly speedy at spitting out answers for chatbots. While developers won’t notice a little lag or delay during a month-long training, people using chatbots want responses as fast as possible.

So Ross, who previously worked on chips at Google, started Groq to build chips called Language Processing Units (LPUs), which are built specifically for inference. A third-party test from Artificial Analysis found that ChatGPT could run more than 13 times faster if it was using Groq’s chips.

Ross doesn’t see Nvidia as a competitor, although he jokes that customers often find themselves moved up in the queue to get Nvidia chips after buying from Groq. He sees them more as a colleague in the space — doing the training while Groq does the inference. In fact, Ross said Groq could help Nvidia sell more chips.

“The more people finally start making money on inference,” he said, “the more they’re going to spend on training.”

Eugen Boglaru is an AI aficionado covering the fascinating and rapidly advancing field of Artificial Intelligence. From machine learning breakthroughs to ethical considerations, Eugen provides readers with a deep dive into the world of AI, demystifying complex concepts and exploring the transformative impact of intelligent technologies.