Photo-Illustration: Intelligencer; Photo: Getty Images

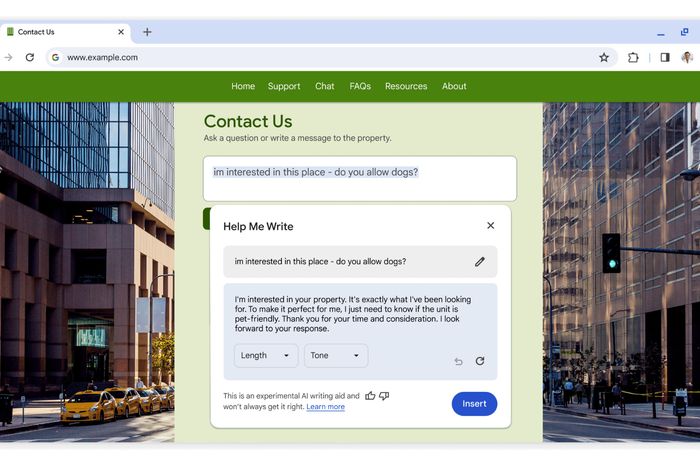

Starting next month, Google will begin rolling on a new experimental feature in Chrome, the most popular browser on Earth, and the portal through which an estimated 3 billion people read and contribute to the web: an AI writing assistant. “Writing on the web can be daunting, especially if you want to articulate your thoughts on public spaces or forums,” the company says. Chrome’s new tool will help users “write with more confidence,” whether they want to “leave a well-written re6view for a restaurant, craft a friendly RSVP for a party, or make a formal inquiry about an apartment rental.”

AI assistants have been popping up in popular software about as fast as developers can code them. Google has been testing generative AI in Gmail — Android messaging and Docs for months; Microsoft has added similar features to Office, its Edge browser, and Windows itself. This was, in other words, basically inevitable: as new features in an interface, “help me write” buttons and features are a natural extension of suggested replies and even spellcheck; Google has the technology to build this, and reason to believe at least some Chrome users will be excited to use it. Millions of people already use software like ChatGPT and Anthropic to generate text that they then deploy in basically every imaginable context. Google building something similar into Chrome was only a matter of time — as soon as it could, it would.

Illustration: Screencap

At launch, this will be a right-click feature in limited testing. If it ends up anything like the generative AI tools Google has been building into Docs, it’ll be as easy to ignore as it is to use.

As generative AI rollouts go, though, this is a big one. For the first time, potentially billions of people will be confronted with the option to have software write on their behalf, in virtually every online context: not just emails or documents, but social-media sites, comment sections, forums, product reviews, feedback forums, job applications, and chat platforms. Instead of, or in addition to, people posting something themselves, Google will offer users statistically likely responses, as well as options for making them shorter or longer or adjusting their tone. The web as we know it is, basically, the result of billions of people typing into billions of browser text boxes with the intention of reaching other people, or at least another person. The experiences of searching, reading, shopping, and wandering on the web have depended on varying extents on the presence of text and media that other users have contributed, often for free and under the auspices of participation in human-centered systems — that is, as themselves, or some version of themselves, with other people in mind. What happens when the text boxes can fill themselves?

Features like this will be, among other things, a massive test of how people actually want to use generative AI. Text generation is already popular in situations where people have to perform highly artificial tones and styles — from the jump, ChatGPT users have found it appealing for writing résumés and cover letters, for example (although it’s unclear, for a variety of reasons, whether this is a good idea). Does a “help me write” button make sense to anyone in the context of a Reddit thread, a fired-up comment section, a jokey response to a friend’s post, a Goodreads review, or an Amazon product page? These are contexts where evidence of humanity is valuable, and where the averaged-out tones of generated text might read as competent spam.

Optimistically, based on having access to a few similar tools for the last six months, my guess is that, in many and maybe most cases, they’ll feel absurd or inappropriate to use — like productivity hacks jammed into what are basically social situations, bug-eyed Clippys hovering awkwardly next to comment threads, tools presenting themselves in contexts where their use would be strange and borderline offensive.

Illustration: Screencap

AI text generation makes some sort of sense when content is being extracted from people who don’t particularly want to produce it: in the form of an assignment, a mundane work task, or as a form that just needs to get filled. These are situations that are already in some way dehumanized. In less antagonistic scenarios, where writing as yourself is the point — basically, the entire category you might call elective uncompensated “posting,” or, uh, “social interaction” — using generative AI could seem like an odd choice, antisocial, self-defeating, ineffective, and indistinguishable from spam. If you’re using AI to generate a Facebook comment, why post at all? Perhaps text generation presents itself to billions of users and most of them say no thanks, most of the time, and the transformative era of generative AI instead takes the form of a somewhat more assertive grammar checker. This would amount to — or at least preview — a deflation in expectations about where this tech is heading in general; in any case, Google will be finding out soon.

It’s worth imagining weirder outcomes, though, in part because of what automation has already done to the web, and how comparatively niche uses cases have started affecting the internet for everyone else. Since the arrival of tools like ChatGPT, search results — which Google is also planning to supplement or replace with generated text — have been overwhelmed by passable (to Google, at least) generated content, tipping the already barely managed problem of SEO bait into a full-blown crisis for the basic function of one of the core tools for finding things online. Last year, in an attempt to mitigate declining search quality, Google started surfacing more “perspectives,” which is its term for posts by people with “firsthand experience” on “social media platforms, forums and other communities” — basically, posts that are categorically less likely to be SEO-driven spam, created as they were for human audiences rather than a search algorithm. Soon, with Chrome, Google will be inviting more synthetic content into every remaining source of such “perspectives” left on the open web, which, setting aside what this means for users, is a big part of the corpus on which Google trains its AI tools in the first place, leaving Google to train its next generation of AI on material generated by the last, which doesn’t sound ideal for Google.

In the narrow context of Google’s story, then, text generation is best understood as a continuation of a trend, an escalation of a process of increasingly assertive automation — of content discovery, categorization, distribution, and now creation — that was only ever leading in one direction. In the context of the slow death of the open web for reasons that predate AI — namely, the commercialization of all online interactions and subsequent collapse of the business model with which they were commercialized — it’s probably more symbolic than consequential.

Still, at the very least, we can expect the wide rollout of text generation tools to change the texture of the parts of the web that remain more or less intact and functional as spaces where people talk to each other and voluntary, helpfully share information — look out, Reddit and Wikipedia — and to subtly alter users’ feelings about contributing to such projects in the first place. We have the technology, in other words, for a web that publishes itself. Will anyone want to read it?

Tyler Fields is your internet guru, delving into the latest trends, developments, and issues shaping the online world. With a focus on internet culture, cybersecurity, and emerging technologies, Tyler keeps readers informed about the dynamic landscape of the internet and its impact on our digital lives.