This section describes the experiments and the results.

Experiment preparation

In our approach, a dataset comprising 6000 images has been used to train the autoencoder. The trained model will be used in the experiments to perform dimensionality reduction with the image data at the edge. The training performed on a machine has the following benefits:

And the training model parameters include:

-

Optimizer: Adam

-

Epochs: 50

-

Activation: ReLu

The dataset, which comprises 6000 images, was selected from both the COCO and DIV2K datasets:

-

The Microsoft Common Objects in Context (MS COCO) dataset21 is a large-scale dataset used for object detection, segmentation, key-point detection, and captioning. It comprises over 328K images with varying sizes and resolutions, each annotated with 80 object categories and five captions describing the scene.

-

The DIV2K dataset22 comprises 1000 diverse 2K-resolution RGB images. All images were manually collected and have a resolution of 2K pixels on at least one axis (vertical or horizontal). DIV2K encompasses a wide diversity of content, ranging from people, handmade objects, and environments to natural scenery, including underwater scenes.

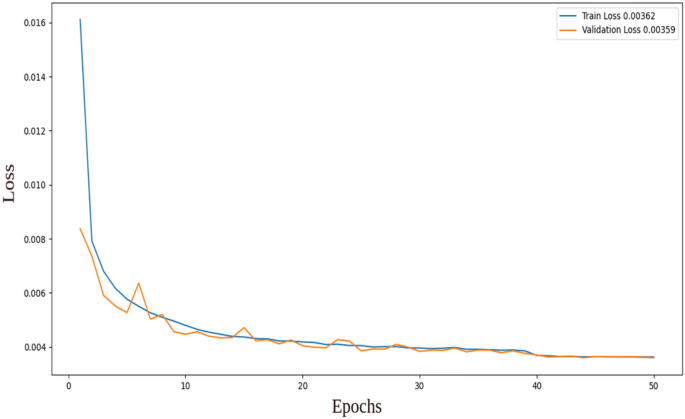

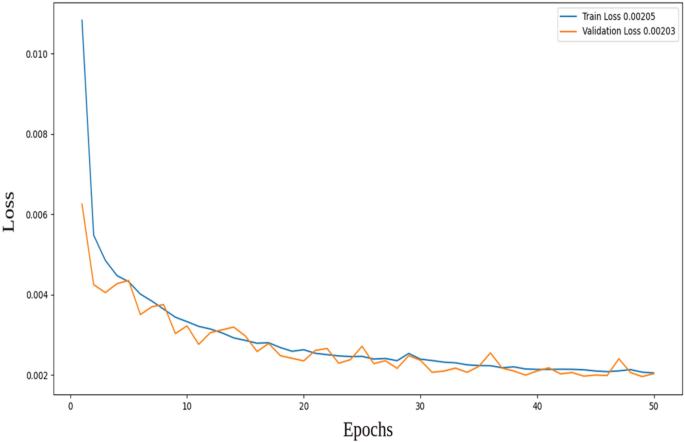

Figures 4 and 5 display the training and validation losses for the two-layer and three-layer autoencoders, respectively. In the two-layer autoencoder, the training loss was 0.00362, and the validation loss was 0.00359. For the three-layer autoencoder, the training loss was 0.00205, and the validation loss was 0.00203. Additionally, the Structural Similarity Index Measure (SSIM)23 is calculated for both models. SSIM is a method for predicting the perceived quality of digital television, cinematic pictures, and other types of digital images and videos. It is employed to measure the similarity between two images. The training shows that the Multi-Scale Structural Similarity Index Measure (MS-SSIM) on validation is 0.85716 for the two-layer autoencoder and 0.88425 for the three-layer autoencoder. This indicates a higher-quality reconstruction for the three-layer autoencoder compared to the two-layer autoencoder.

Training and validation loss for the two-layer autoencoder.

Training and validation loss for the three-layer autoencoder.

When we increased the number of epochs to more than 50 and the number of hidden layers to more than three layers, overfitting occurred. Increasing the epoch size and the number of hidden layers provides the model with more time to converge to an optimal solution, potentially resulting in improved accuracy. However, there is a risk of overfitting during training, where the model may become too specialized for the training data, capturing noise. This could lead to a reduction in accuracy on the validation or test set.

In machine learning, it is important to maintain the accuracy of the final machine learning task as high as possible. Since the primary objective of the proposed architecture is to reduce network traffic and latencies, considering the amount of data that can be reduced at the edge is also important.

Machine learning task

The proposed approach has been evaluated for the task of image object detection using YOLO, which stands for ‘You Only Look Once’. YOLO is a technique employed for real-time object recognition and detection in various images. It treats object detection as a regression problem, providing class probabilities for observed images. Convolutional neural networks (CNN) are utilized in the algorithm for rapid object identification. As the name implies, the approach requires only one forward propagation through a neural network to detect objects24.

Data sets

A set of 4000 images was used in the object detection task experiments, randomly chosen from three different datasets. The three datasets were selected to represent the diversity of the data used in the experiments. The datasets are:

-

The MS COCO dataset21.

-

The human detection dataset25 comprises 921 images from closed-circuit television (CCTV) footage, encompassing both indoor and outdoor scenes with varying sizes and resolutions. Among these, 559 images feature humans, while the remaining 362 do not. The dataset is sourced from CCTV footage on YouTube and the Open Indoor Images dataset.

-

The HDA Person Dataset26 is a multi-camera, high-resolution image sequence dataset designed for research in high-definition surveillance. 80 cameras, including VGA, HD, and Full HD resolutions, were recorded simultaneously for 30 min in a typical indoor office scenario during a busy hour, involving more than 80 people. Most of the image data is captured by traditional cameras with a resolution of 640 × 480.

Experiments

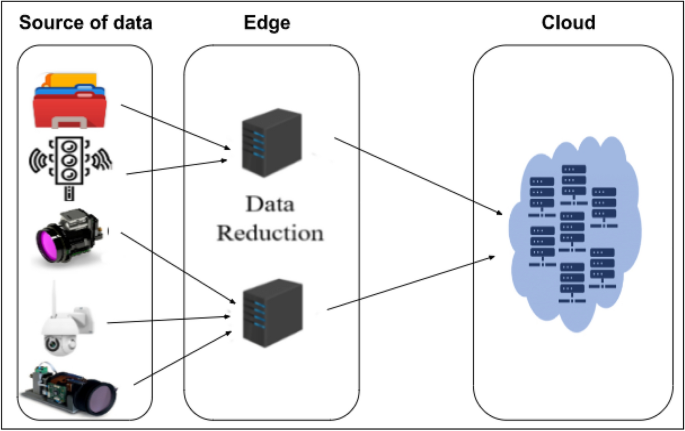

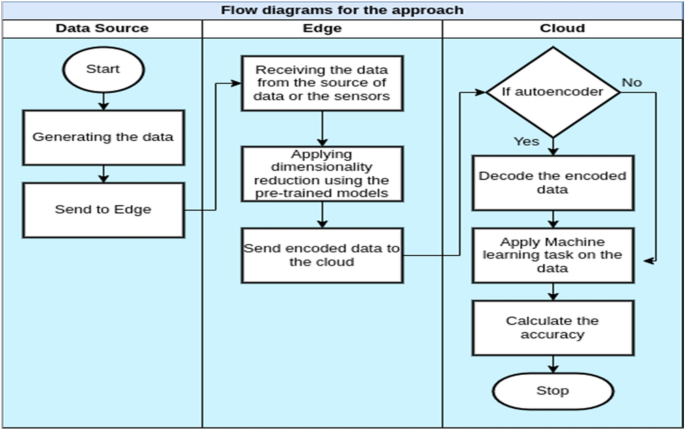

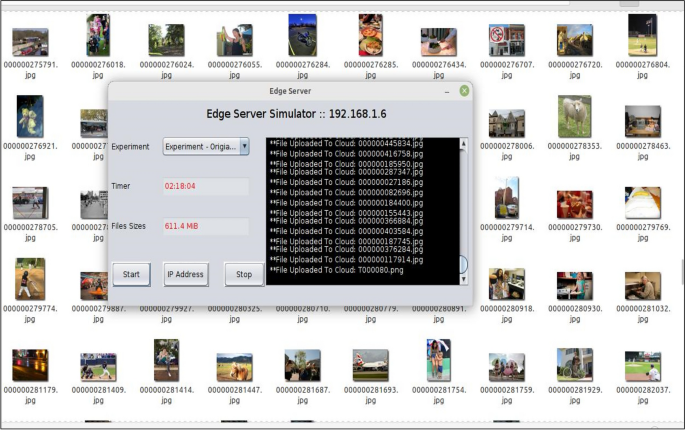

The following four experiments were conducted, aligning with the four scenarios outlined in Fig. 3. The experiment was executed according to the flow in Fig. 6, starting with the data from the camera sensors or the existing collection of images. An Android mobile application was developed to run on Lenovo tablets, responsible for transferring images to the edge servers (via the edge node’s IP address and socket programming). Furthermore, the edge performs dimensionality reduction methods on the received images. Figure 7 shows a developed simulation desktop application used in edge nodes to receive images from sensors, manage the dimensionality reduction method, and transmit encoded data to the cloud server.

Flowchart diagram of the experiment.

Simulations of edge device application.

Experiment 1

Images are sent directly to the cloud from sensors, where an object detection task is performed on the data and the accuracy is measured. It will be used later to evaluate other experiments.

Experiment 2

The principal component analysis (PCA) is utilised at the edge nodes to instantly reduce the dimensionality of data, and then the encoded data is sent to the cloud. The object detection task was carried out on the encoded data in the cloud, and the accuracy was computed.

Experiment 3

Utilizing the encoder component of the autoencoder on the edge, the two-layer autoencoder encodes images in real time. The edge application directly transmits the encoded images to the cloud. Subsequently, the autoencoder’s decoder component operates in the cloud to decode the data. The decoded images are then used for the object detection task, and the accuracy is computed.

Experiment 4

Similar to Experiment 3, but employing the three-layer autoencoder.

The encoding and decoding times are taken into consideration for the autoencoder and PCA; the following three (Tables 1, 2, 3) provide examples of the encoding and decoding times for three different groups of images with different sizes and resolutions. It was noticed that the three-layer autoencoder’s encoding and decoding time was greater than the two-layer autoencoder’s in the chosen samples of images because it kept the quality of the decoded images close to the original ones.

Wanda Parisien is a computing expert who navigates the vast landscape of hardware and software. With a focus on computer technology, software development, and industry trends, Wanda delivers informative content, tutorials, and analyses to keep readers updated on the latest in the world of computing.