BOSTON—Given that this year’s Bio-IT World Conference & Expo included three tracks about artificial intelligence (AI)-based applications including a new one focused on Generative AI, it was clear that this year’s meeting would have a very strong AI focus. That was also evident from the first keynote talk given by Dan Stanzione, PhD, executive director of Texas Advanced Computing Center (TACC) and associate vice president for research at the University of Texas at Austin.

In his talk titled, “Unleashing the power of advanced computing in bioinformatics,” Stanzione noted that while historically, bioinformatics drove the evolution of TACC, that shifted a few years ago to AI as scientists continue to generate large quantities of multimodal datasets.

“Twenty-five years ago, you gave people a big computer and a Fortran and told them to get to work [but] that’s no longer the way things are done,” he said. “Now life sciences projects are multi-team affairs with each group tasked with running different aspects of the project.” Modern science, he continued, requires much more than software.

Given the size of today’s datasets, the hardware needs and energy requirements for computing have skyrocketed. TACC is home to Frontera, a $60-million supercomputer funded by the National Science Foundation, ranked as the 29th most powerful system in the world. TACC also houses Stampede3, a new supercomputer supported by a $10-million grant from the National Science Foundation that advances high-performance computing for AI and machine learning, that went into full production this year among other platforms.

These and other TACC systems are housed in large datacenters that consume enough energy to power an entire state and cost millions to build and maintain, leading Stanzione to joke that he might as well be loading the compute racks with gold bars rather than boxes.

Power hungry

If current trends continue, the demand for power and cost are projected to rise rapidly in the coming years. During one of the ever-popular sessions of the conference, “Trends in the Trenches,” delivered by members of the scientific IT consultancy BioTeam, CEO Ari Berman noted that the AI industry is expected to consume 85–135 terawatts of power per year by 2027. By comparison, that’s enough energy to power the Netherlands for a year.

Such massive power consumption is both expensive and unsustainable. Companies like NVIDIA, which recently announced new, powerful AI computing platforms for drug discovery and genomics, have touted the energy-efficiency of their graphics processing units. But these can’t be the only solution, Berman noted, adding that new cooling methods as well as systems that use power more efficiently are very much needed.

At several points throughout the conference, a recurring question could be heard: where exactly can AI be most useful in the life sciences?

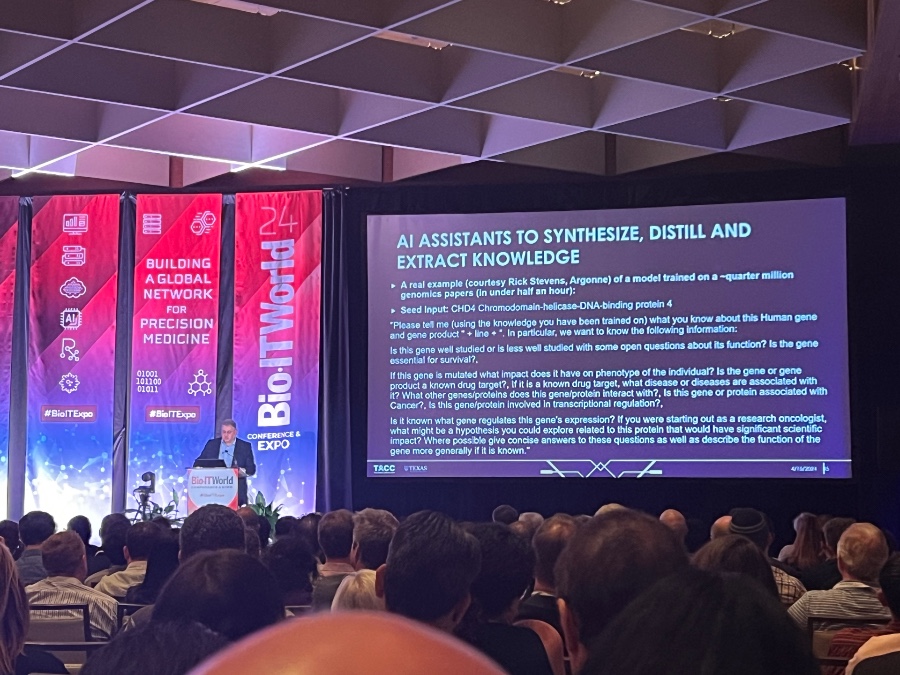

Stanzione noted that there are some arenas where it might be less impactful. He and others at the conference pointed out that one area where AI can be useful is as something of a scientist’s assistant, helping to synthesize, distill, and extract knowledge from scientific literature. Stanzione also pointed out that AI may have less use in personalized medicine, as deep learning algorithms require a lot of data to be properly trained. However, it could be useful for extracting insights from population level data about things like vaccine effectiveness, he added.

Berman urged for a more respectful and responsible approach to applying AI in life sciences. Interest in the technology would not be growing if it was not “super useful” and there weren’t clear applications where it could be leveraged successfully, such as in pathology and robot-assisted surgery. But, he noted, the most productive uses of AI in the life sciences are still about 2–5 years away. Right now, Berman said, there is a lot of hype around a technology that ultimately is not well understood—nor is it “something we should 100% rely on.”

Twin discovery

While not directly about AI, the second keynote of the conference centered on digital twins in oncology. The talk was delivered by Caroline Chung, MD, MSc, vice president, chief data officer, and director of data science development and Implementation at MD Anderson Cancer Center.

During her talk, titled, “Unveiling Tomorrow’s Possibilities: Embrace the Power of Digital Twins in Cancer Care and Research,” Chung acknowledged both the promise and hype of digital twins, and the importance of being thoughtful in adopting the technology to ensure that it is improving quality of care for patients.

Chung participated in a multi-agency sponsored initiative led by the National Academies of Sciences, Engineering, and Medicine focused on digital twin technologies and their application in healthcare and other sectors. Their efforts, detailed in a report titled, “Foundational Research Gaps and Future Directions for Digital Twins,” were summarized by Chung in her keynote address.

Critical to the initiative’s work was a clear understanding of what a digital twin is and is not. As Chung noted, digital twins involve much more than simulation and modeling and the community has to “be critical about what we define as a digital twin to avoid falling into the hype.” She also flagged some of the requirements and challenges of implementing digital twins in the healthcare context including the need for transparency, the risks of patient exposure, and the importance of a culture shift.

In oncology, digital twins could be used in clinical trials, drug discovery and development, preclinical testing, and much more. While digital twins are not avatars they do “mimic the structure, context, and behavior” of a physical system and there is a bidirectional interaction between the virtual and physical counterpart. One implication of that is “an increased risk of identifiability in the data we are dealing with,” she said.

Furthermore, model verification and validation is typically done at one point but given the bidirectional communication involved in using digital twins, Chung asked, “how do we manage verifying and validating and generating uncertainty quantification for a model that is continuously changing?”

Developers of digital twins must also deal with some of the same data challenges as AI developers.

“Data generation and flow is messy and labor intensive in healthcare today” with varying standards and terminologies that result in a lot of duplicated data in electronic medical records,” she noted. There’s also relevant information that’s important for clinicians that are not currently captured in EMRs as well as a positive publication bias, as negative results may be left out of training data.

To illustrate the data challenges that she and her colleagues deal with, Chung used an example from tumor imaging where variations in the protocols used can impact tumor detection and tracking. Physicians at MD Anderson may use imaging methods that detect 2 mm tumors in a patient. But that same patient may have visited a different center prior that used methods capable of detecting 3 mm tumors. Based on the results from one center, a physician might erroneously enroll a patient into a clinical trial thinking their tumor is progressing or disenroll them based on the second result thinking that they no longer need treatment.

Chung ended her keynote by highlighting some of the recommendations from the NAS report including the need for broader interaction and collaboration, ensuring that verification, validation, and uncertainty quantification are a critical part of digital twin programs. She also called for thoughtful consideration around the ethical aspects of digital twins before the technology moves into broader use.

Launched in 2002, the Bio-IT World Conference & Expo attracted an estimated 3,000 attendees from 30 countries in-person at the event’s new home (the Omni Hotel on the Boston Seaport) and online.

Wanda Parisien is a computing expert who navigates the vast landscape of hardware and software. With a focus on computer technology, software development, and industry trends, Wanda delivers informative content, tutorials, and analyses to keep readers updated on the latest in the world of computing.