The human brain’s processing power is exceptional. It can perform a billion mathematical operations in one second using just 20 Watts of power.

Researchers are now trying to capture this efficiency by building computers inspired by the human brain’s operation and function. This field, known as neuromorphic computing, uses artificial synapses and neurons to process information.

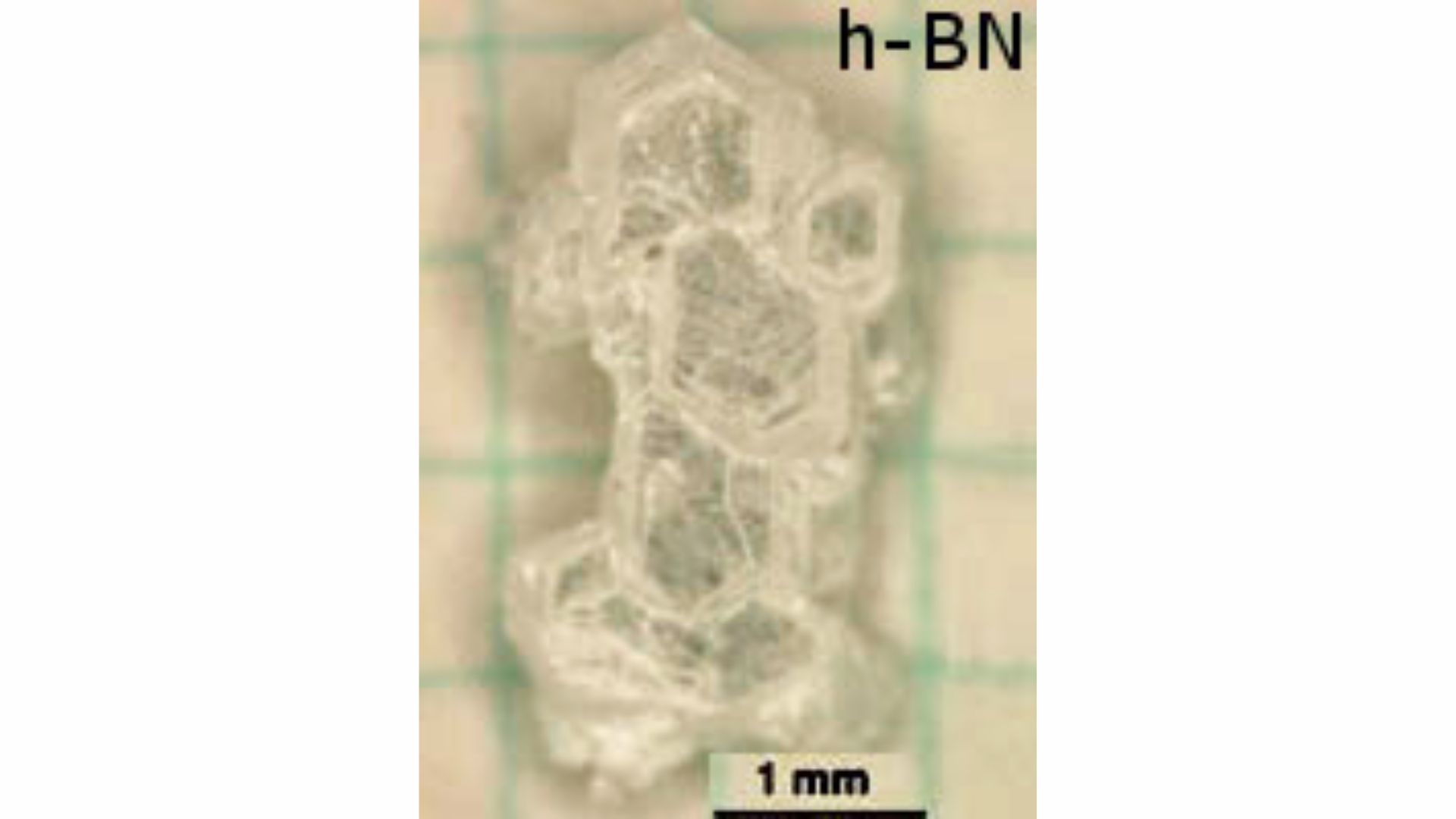

Although this is still an emerging area of research, a new study has announced a leap. Researchers from the Center for Neuromorphic Engineering at the Korea Institute of Science and Technology (KIST) have implemented an integrated hardware system consisting of artificial neurons and synaptic devices using hexagonal boron nitride (hBN) material.

They aimed to construct building blocks of neuron-synapse-neuron structures that can be stacked to develop large-scale artificial neural networks.

“Artificial neural network hardware systems can be used to efficiently process vast amounts of data generated in real-life applications such as smart cities, healthcare, next-generation communications, weather forecasting, and autonomous vehicles,” said KIST’s Dr. Joon Young Kwak, one of the study’s authors, in a press release.

But what is the problem with classical computing, and how is neuromorphic computing mimicking the brain?

Shortcomings of classical computing

One reason scientists are exploring alternate computing paradigms is the limitations of present-day computing. While present-day computers are impressive, they require high power, have limited scalability, and have limited parallelism.

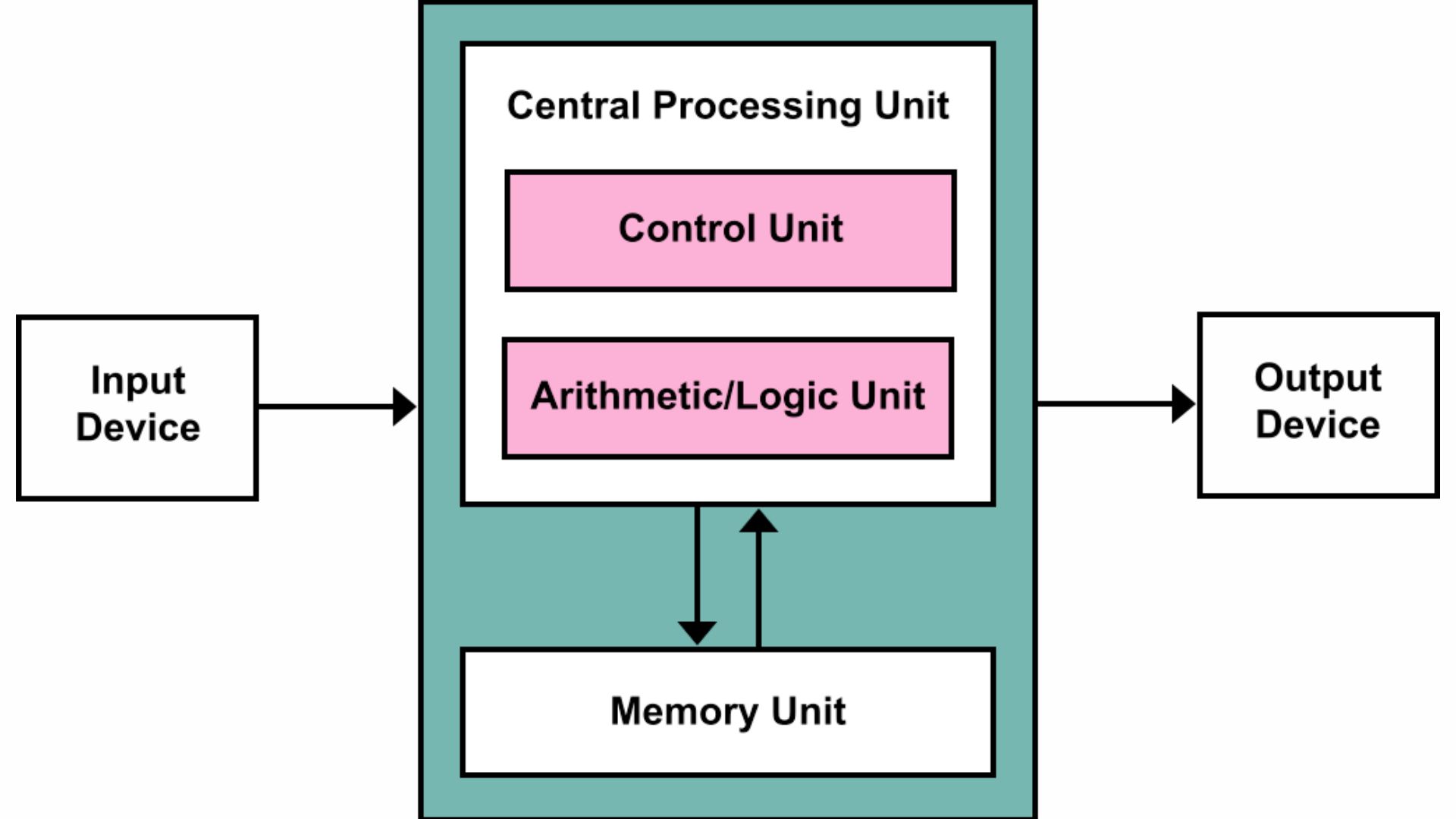

These drawbacks mean that these computers are not efficient, and one reason is the von Neumann architecture of computers today.

If you look at computers today, memory and data processors are two different units connected by a narrow channel called the bus. This means that any time a computation has to be done, data is transferred between the memory and data processing center, which is the CPU or central processing unit.

The flow of data back and forth creates a bottleneck in performance that worsens as the computations get more complex, lowering the efficiency of the overall system. This is known as the von Neumann bottleneck and affects most present-day computers.

Overcoming limitations

Neuromorphic computing doesn’t have any of these problems due to many reasons.

- Parallel computing: Like the brain, these systems can perform multiple tasks simultaneously, which means we get faster computers.

- Low power: These systems, like the brain, are designed to use the least amount of power for computations.

- Adaptability: When the brain receives input signals (essentially information), it learns from them. Neuromorphic systems share this adaptability, enhancing their efficiency and making them more flexible for tasks such as classification or pattern recognition.

- Fault-tolerant: One of the cool things about these systems is that failure in one portion of the system will not affect the system’s overall functioning. This is because their architecture is distributed and decentralized, which means a damaged neuron or synapse won’t stop the operation.

Let’s move on to the heart of the matter.

Information processing in the brain

Modeling neurons and synapses is the most critical aspect of developing neuromorphic systems. To understand how artificial ones are constructed, we first need to comprehend how the real ones function in the brain.

Neurons are cells responsible for sending signals or information throughout the body. They allow us to do activities like breathing, sleeping, and watching our favorite TV shows.

For this article, we will focus on the neuron’s three main parts: dendrites, axons, and axon terminals.

Dendrites are branch-like protrusions that receive and transmit signals from and to other neurons. They are the first step in information processing. This information is then sent to the soma (or cell body), which integrates the signals and sends them along the axon.

Like an electrical wire, the axon is a fiber that conducts electricity. Finally, the signal reaches the axon terminals, after which it is passed on to the next neuron.

Tiny gaps between neurons, specifically axon terminals, form synapses. These gaps enable the transmission of information between neurons. When signals are being transmitted or received, chemicals called neurotransmitters, which let the neurons talk to each other, are released in the synapses.

Mimicking the brain

Replicating this process is challenging and requires precise accuracy in many aspects.

- Integration of signals and crossing the threshold: A neuron doesn’t always fire or transmit information. If and only if the integrated signal crosses a threshold value, does the output signal get generated?

- Information leaks: The information received by the neurons gradually leaks over time. In other words, it may take longer for the neuron to fire. Firing means that the neuron is sending a signal.

- Synaptic plasticity: The strength of the synaptic connection can change over time depending upon the firing of the two neurons it connects. Essentially, this means the information being passed can modified in real-time. This is key for the adaptability of the networks.

Memresistors and modeling the brain

The researchers relied on memristors to model the artificial neurons and synaptic devices, which can mimic synaptic activity.

Memristors, or memory resistors, can regulate current flow by remembering their resistance state. In other words, the resistance changes when a current passes through it, but it remains even after the power is switched off.

For the artificial neurons, it was necessary to pick a device that would leak information over time to mimic the leaky behavior of neurons. They chose resistive random access memory or RRAM based on the memristor.

RRAMs are devices that allow for dynamic changes in resistance during operation. Like traditional RAMs, information can be written and read in any order.

They chose volatile RRAMs for the neurons. These devices can change their resistance while operating, but the resistance is eventually lost after the power has been switched off.

On the other hand, the researchers chose non-volatile RRAMs for the synaptic devices. These devices can retain the resistive state even after the power is turned off. This emulates the stable connections between neurons but also mimics the plasticity of synapses by allowing the resistance to change during operation.

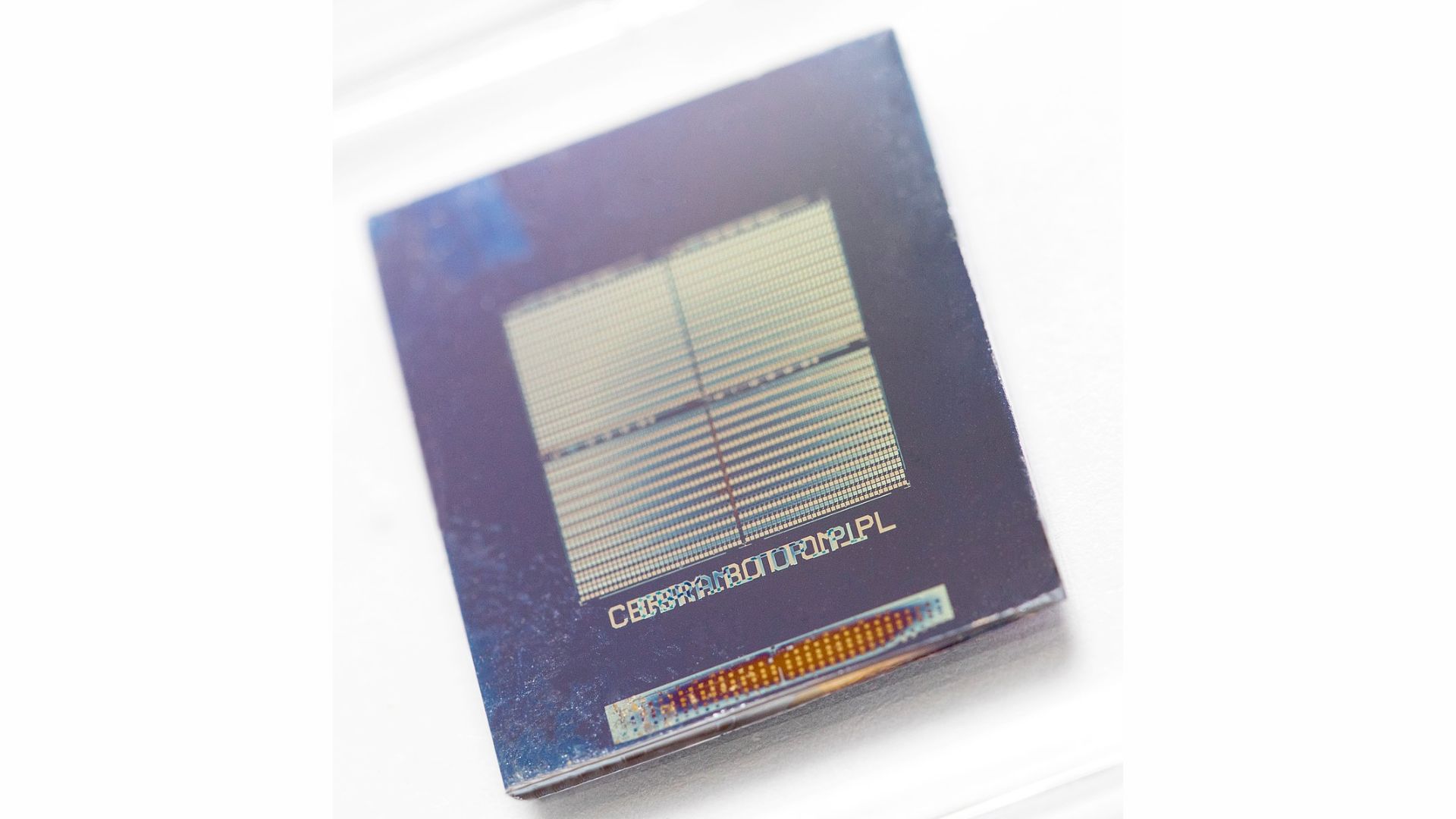

The material used to build the devices was the two-dimensional material, hBN. The researchers chose it because it consumes very low power, offers high integration and scalability, and has been shown to work very well for memristor-based devices like the RRAMs.

So now we have our neurons and synapses. The researchers used the neuron-synapse-neuron setup as a building block or Lego block, which they could use to build an entire network.

Implementing and testing a hardware neural network

The final step was assembling the Lego set. The team used their neuro-synapse-neuron setup to construct a physical artificial neural network, the first of its kind using neurons and synaptic devices built to mimic their biological counterparts.

The researchers tested their network using the MNIST dataset. This large dataset consists of handwritten digits (0-9) and is commonly used for testing machine learning algorithms on classification tasks.

They implemented a spiking neural network with an input layer of 784 neurons, a hidden layer of 100 neurons, and an output layer of 10 neurons. The input layer had 784 neurons because each image in the MNIST dataset has 28 by 28 pixels, and to encode each pixel, we need one neuron.

The output layer has 10 neurons because the network needs to classify ten digits. This is identical to artificial neural networks in machine learning.

Their network classified the digits with an accuracy of 83.45 percent, which was close to the accuracy of their ideal case scenario, which was 90.65 percent.

For perspective, most modern machine learning algorithms have demonstrated accuracies of over 99 percent with the MNIST data set. However, it is crucial to remember that this is a physical implementation of these networks and may be affected by noise or other hardware imperfections.

Where will we see neuromorphic computing?

Despite still being in the early stages of development, neuromorphic computing already has many potential applications that could hugely affect various industries.

Since these systems are receptive to environmental changes, they could play a huge role in autonomous systems such as vehicles and drug delivery systems.

Cars will be able to sense their environment, making the driverless experience safer and more energy-efficient. This is also true for IoT devices like sensors, security cameras, and thermostats.

Similarly, drug delivery systems must consume low power or have a self-powered mechanism because we can’t send massive power sources inside the human body. This means that neuromorphic systems will have a crucial role to play.

These systems, once integrated with organic materials, can also be used in prosthetics.

Neuromorphic computing could also be used in large-scale operations and artificial intelligence. These tasks demand fast and efficient operations, which neuromorphic systems excel at, particularly in pattern recognition and decision-making.

Dr. Young also mentioned in the press release that “it will help improve environmental issues such as carbon emissions by significantly reducing energy usage while exceeding the scaling limits of existing silicon CMOS-based devices.”

In summary, this technology holds immense potential, but there is still progress to be made. This study, published in Advanced Functional Materials, has shown that scientists are making strides in the right direction.

ABOUT THE EDITOR

Tejasri Gururaj Tejasri is a versatile Science Writer & Communicator, leveraging her expertise from an MS in Physics to make science accessible to all. In her spare time, she enjoys spending quality time with her cats, indulging in TV shows, and rejuvenating through naps.

Wanda Parisien is a computing expert who navigates the vast landscape of hardware and software. With a focus on computer technology, software development, and industry trends, Wanda delivers informative content, tutorials, and analyses to keep readers updated on the latest in the world of computing.